End-to-end printed Mongolian recognition translation method based on spatial transformation network

A space transformation and Mongolian language technology, applied in character recognition, natural language translation, neural learning methods, etc., can solve the problems of less research on small languages, the recognition and translation have not achieved good results, and the lack of databases, etc., to improve the accuracy of recognition. rate effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0035] The implementation of the present invention will be described in detail below in conjunction with the drawings and examples.

[0036] The invention provides an end-to-end printed Mongolian recognition and translation method based on a space transformation network, which includes two steps of character recognition and character translation. Moreover, before text recognition, in order to facilitate the deep learning neural network to better extract features, the data can be preprocessed first. The preprocessing is mainly to analyze and segment the printed Mongolian text.

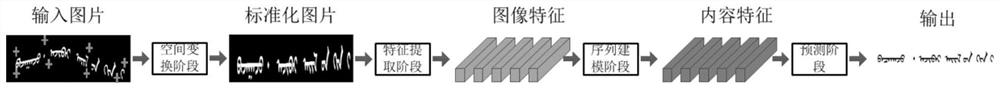

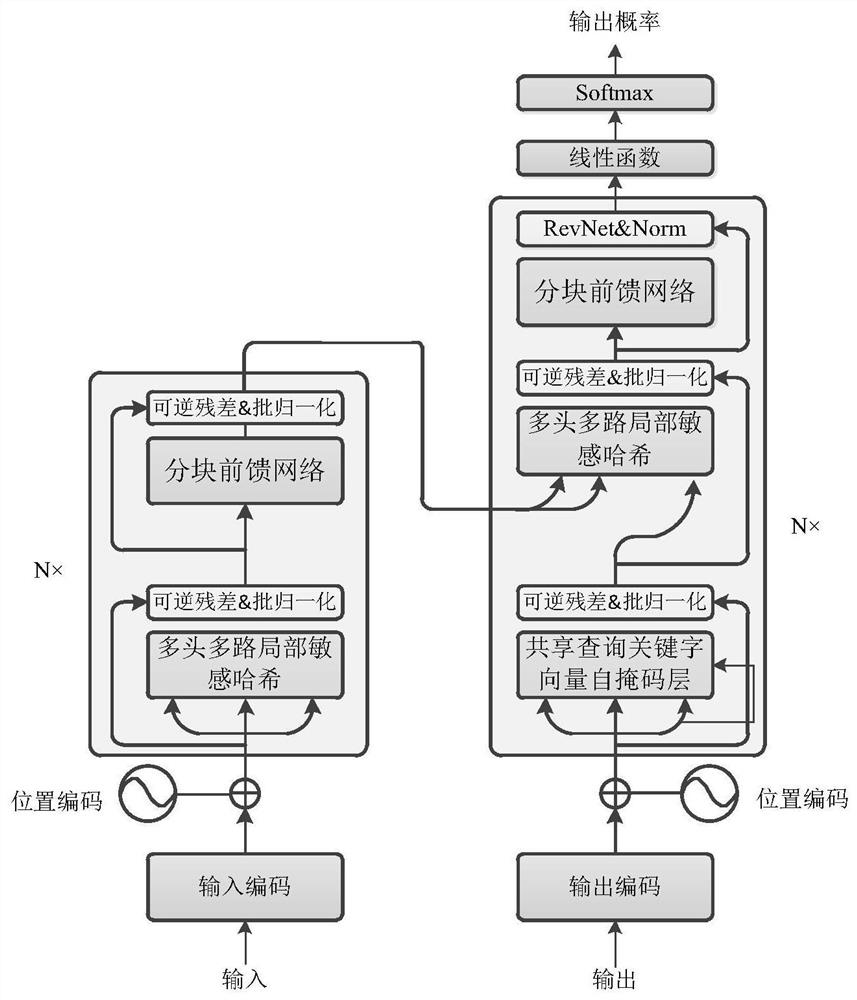

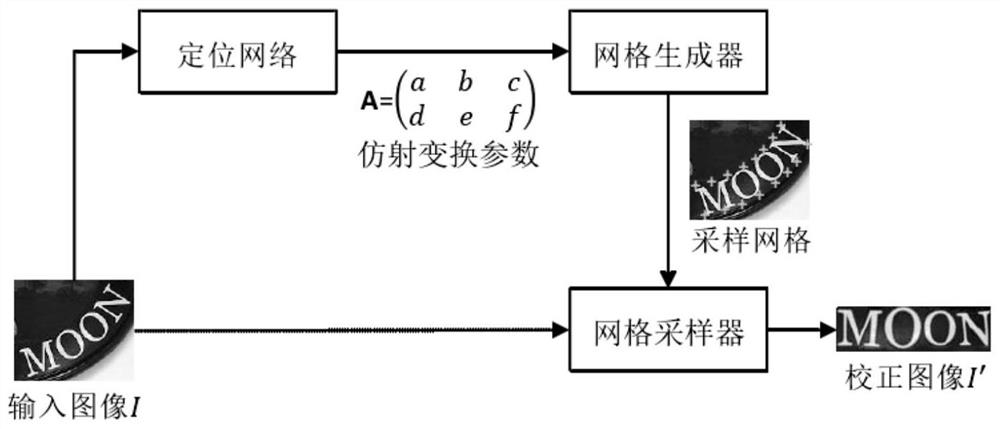

[0037] Text recognition is realized by end-to-end printing Mongolian recognition network based on space transformation network, refer to figure 1 , the present invention starts from the characteristics of Mongolian characters, and realizes recognition by four stages of space transformation (Trans.), feature extraction (Feat.), sequence modeling (Seq.), and prediction (Pred.), wherein the space transformat...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com