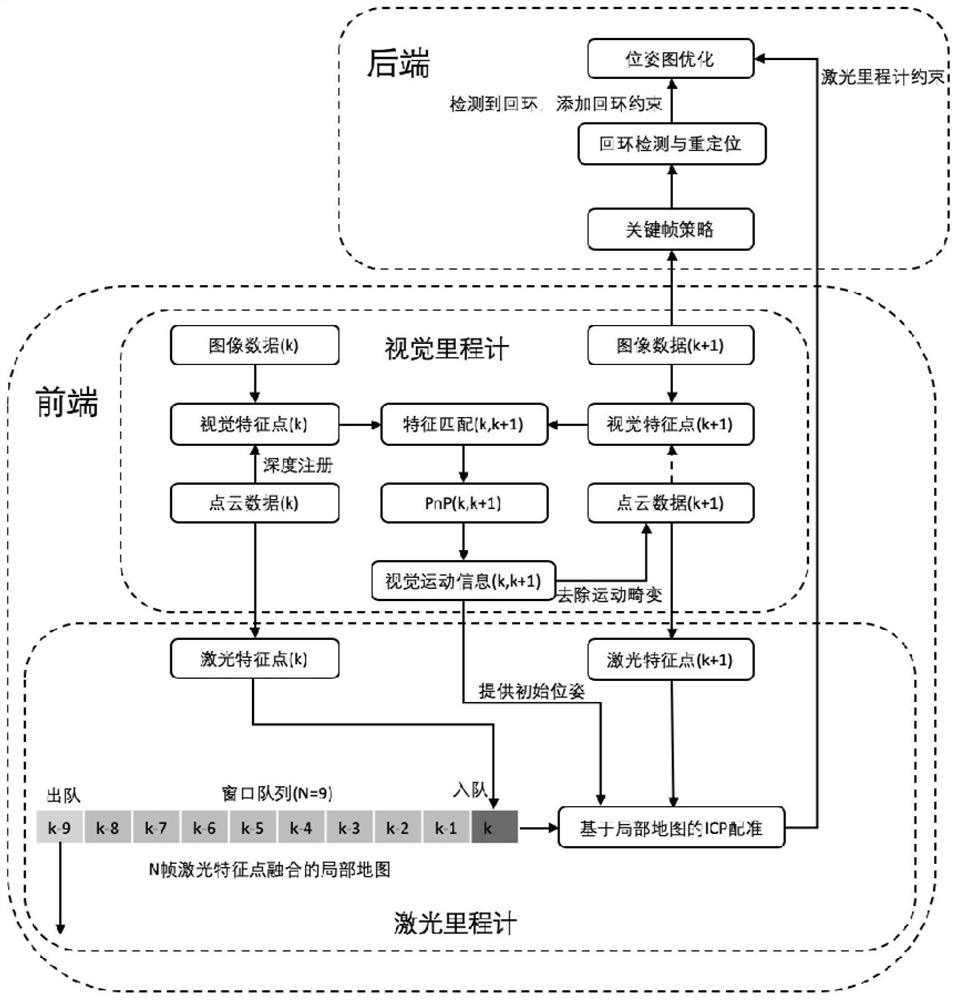

Simultaneous localization and mapping method based on vision and laser radar

A lidar and map construction technology, applied in image enhancement, image analysis, image data processing and other directions, can solve the problems of motion distortion, easy degradation, lack of universality, etc., to achieve the effect of eliminating accumulated errors

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

[0174] In order to further demonstrate the implementation effect of the present invention, this embodiment adopts KITTI's partially undistorted raw data in the rawdata data set, and selects 10 of the first 11 sequences (00-10) in KITTI as experimental data (sequence 03 and The corresponding raw point cloud data was not found from rawdata).

[0175] Evaluation indicators:

[0176] According to different positioning requirements, this embodiment uses relative error and absolute error to evaluate the level of positioning accuracy respectively.

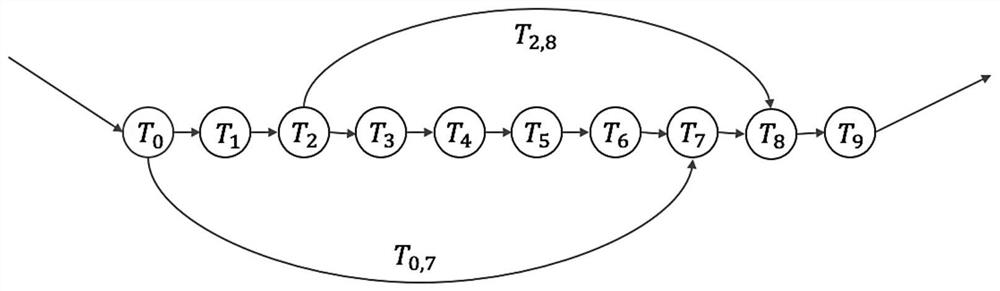

[0177] The relative error evaluation takes each pose as the starting frame, and takes 8 poses of 100 meters, 200 meters, 300 meters ... 800 meters, and then compares these poses with the real value poses calculated by the same method , divide the error by the actual length of each segment, and calculate the average rotation error R (unit: degree / 100 meters) and translation error t (unit: %). The relative error evaluation avoids the cumu...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com