Method and system for generating attention remote sensing image description based on high-low layer feature fusion

A remote sensing image and feature fusion technology, applied in biological neural network models, instruments, electrical digital data processing, etc., can solve problems such as the inability to describe input remote sensing images well, and achieve accurate understanding.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

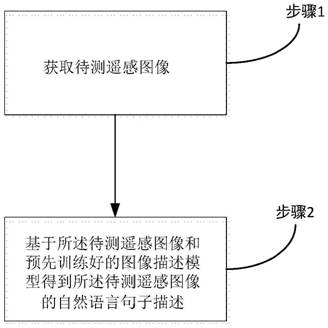

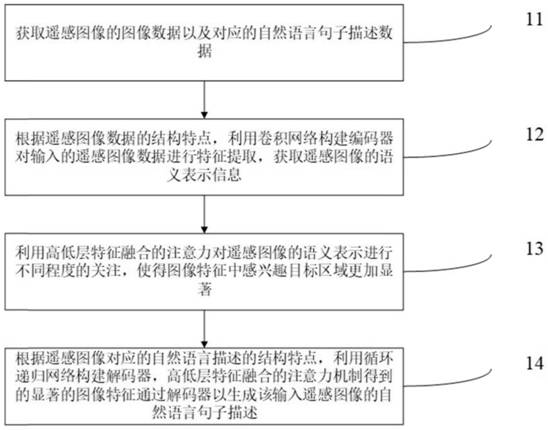

[0054] Embodiment 1: a kind of attention remote sensing image description generation method of high and low layer feature fusion, such as figure 1 Shown: includes:

[0055] Step 1: Obtain the remote sensing image to be tested;

[0056] Step 2: Obtain a natural language sentence description of the remote sensing image to be tested based on the remote sensing image to be tested and a pre-trained image description model;

[0057] Among them, the image description model is constructed by using the encoder constructed by the convolutional network, the attention of the fusion of high-level and low-level features, and the decoder constructed by the loop-recursive network.

[0058] Step 2: Obtain a natural language sentence description of the remote sensing image to be tested based on the remote sensing image to be tested and a pre-trained image description model:

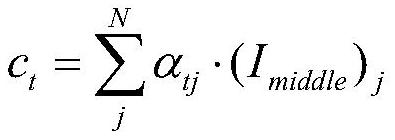

[0059] An embodiment of the present invention provides an attention remote sensing image description generation method...

Embodiment 2

[0101] The present invention based on the same inventive concept also provides a generation system for attention remote sensing image description of high-level and low-level feature fusion, including:

[0102] A data acquisition module, configured to acquire remote sensing images to be measured;

[0103] A language generation module that obtains a natural language sentence description of the remote sensing image to be tested based on the remote sensing image to be tested and a pre-trained image description model;

[0104] Wherein, the training of the image description model includes: training the encoder and the decoder based on the remote sensing image and the natural language sentence description information corresponding to the remote sensing image.

[0105] Preferably, the language generation module includes:

[0106] The feature extraction sub-module extracts features of the remote sensing image to be measured based on a pre-trained encoder, obtains the global semantic f...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com