Camera and three-dimensional laser radar data fusion road edge detection method

A three-dimensional laser and detection method technology, applied in the field of intelligent traffic road environment perception, can solve the problem that the amount of information cannot provide three-dimensional information, achieve accurate and reliable road information, meet the real-time performance, and improve the accuracy.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

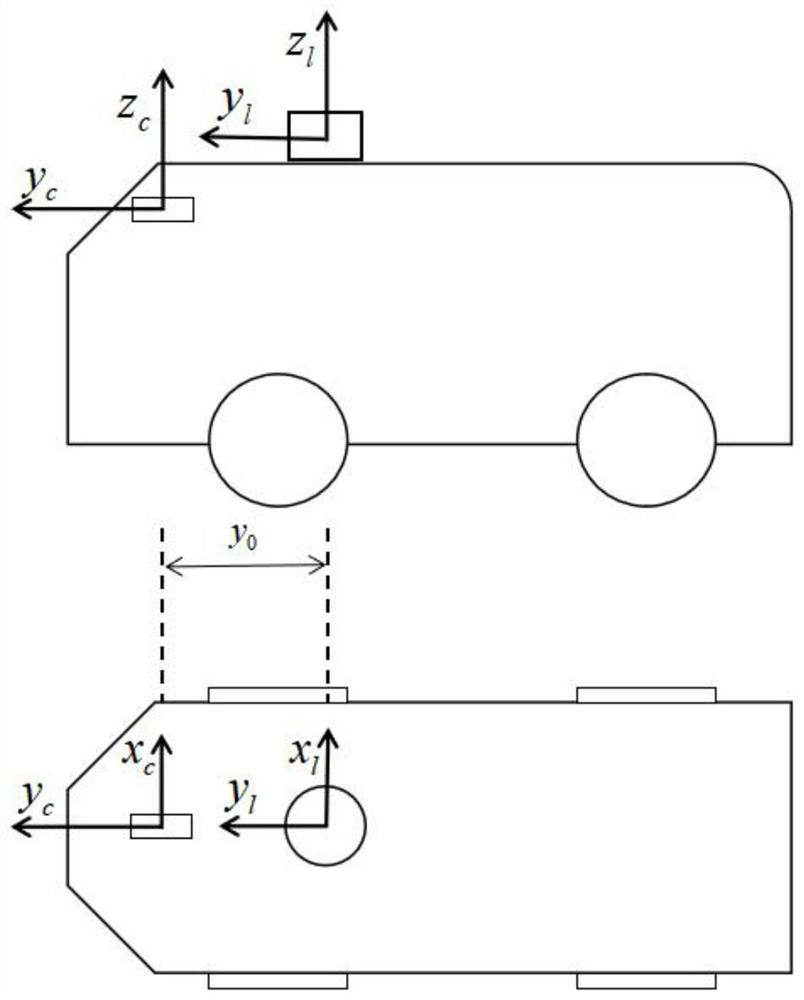

[0040] The specific technical solutions of the embodiments of the present invention will be further described below in conjunction with the accompanying drawings.

[0041] The embodiment of the present invention selects VelodyneLiDARPuck 16-line laser radar to collect point cloud data, grayscale camera to collect image data, and proposes a roadside detection method for camera and three-dimensional laser radar data fusion, such as figure 1 shown, including the following steps:

[0042] Step 1: Establish a lidar coordinate system with the 3D lidar as the origin, then use the 3D lidar to collect road point cloud data sets, and convert each point cloud data in the road point cloud data set from 3D space to 2D grid on the map;

[0043] Specifically, the method for converting point cloud data from 3D space to 2D grid map includes:

[0044] The three-dimensional laser radar Cartesian coordinate system takes the center of the radar internal rotating mirror as the origin, and the ang...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com