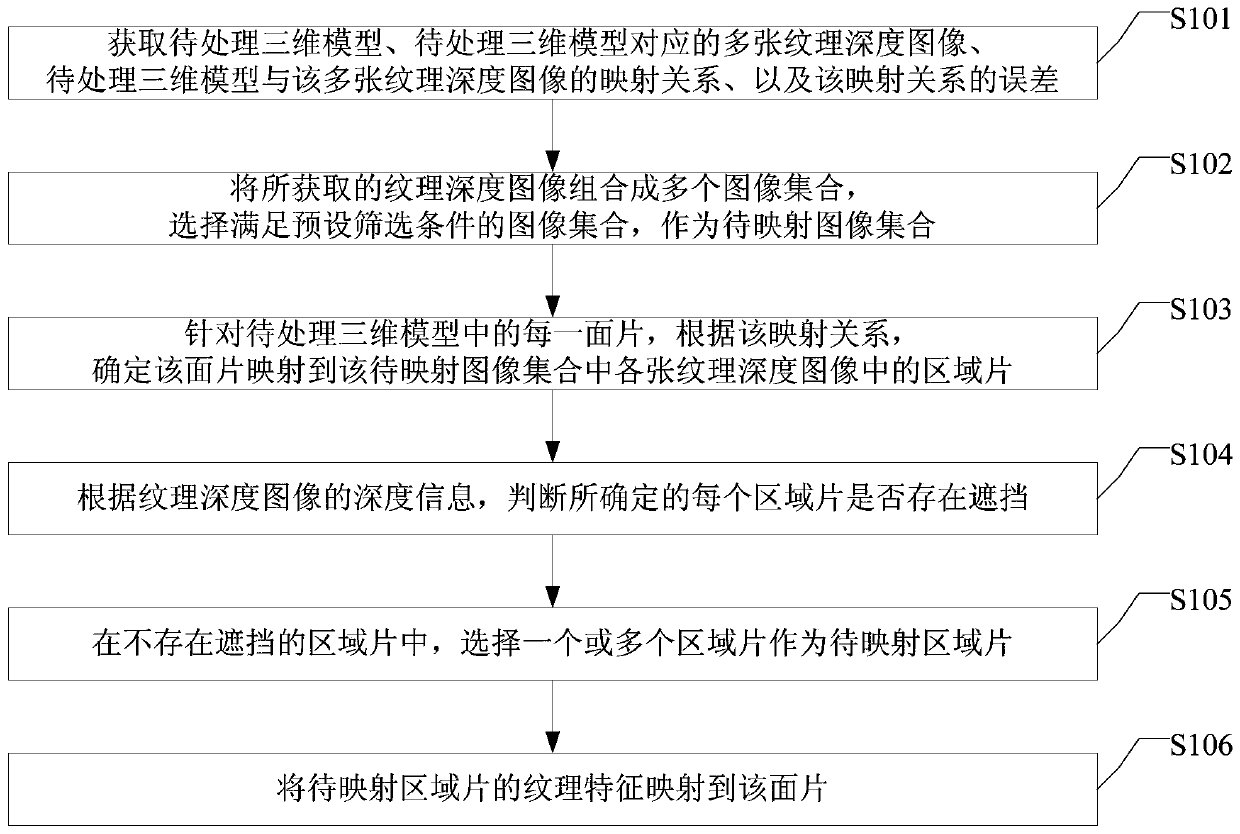

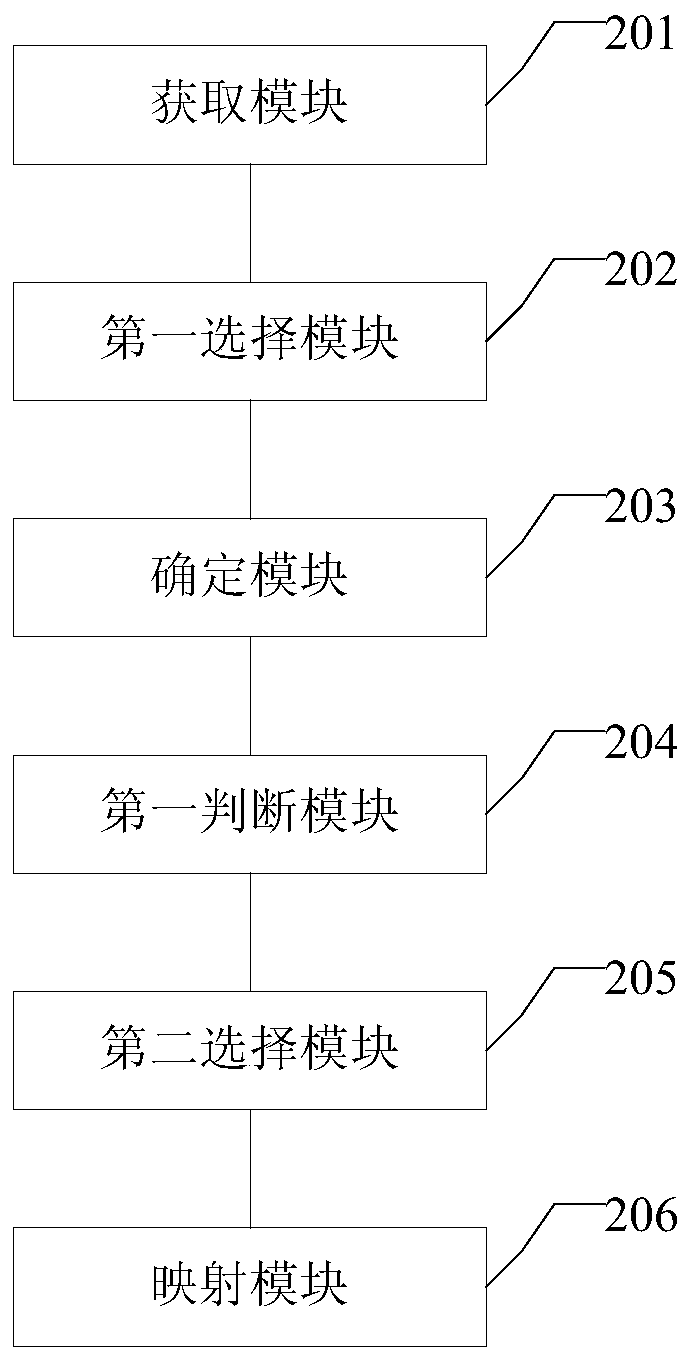

Texture mapping method, device and equipment based on three-dimensional model

A three-dimensional model and texture mapping technology, applied in the field of computer vision, can solve the problems of texture feature mapping three-dimensional model, poor mapping effect, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment approach

[0092] As an implementation manner, the preset screening conditions include:

[0093] The sum of the errors of the mapping relationship corresponding to each texture depth image in the image set and the sum of the number of texture depth images in the image set are the smallest;

[0094] The sum of the pose rotation angles of each texture depth image in the image collection is not less than 360 degrees; wherein, the pose rotation angle of the texture depth image is: between the texture depth image and the texture depth image of the adjacent viewpoint in the preset direction The pose rotation angle of .

[0095] In this embodiment, the screening conditions include the above three conditions at the same time. This filter condition can be expressed as:

[0096]

[0097]

[0098] Among them, I represents the image collection, I i represents the texture depth image in the image collection, e Ii Represents the texture depth image I i The error of the corresponding mapping...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com