Character translation and display method and device based on augmented reality and electronic equipment

A technology of augmented reality and display method, applied in the field of computer vision, can solve problems such as low convenience, accurate and intuitive one-to-one correspondence replacement of translated text, complex operation of photo-translation process, etc., so as to improve user-friendliness and display effect Realistic, Convenience-Enhancing Effects

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

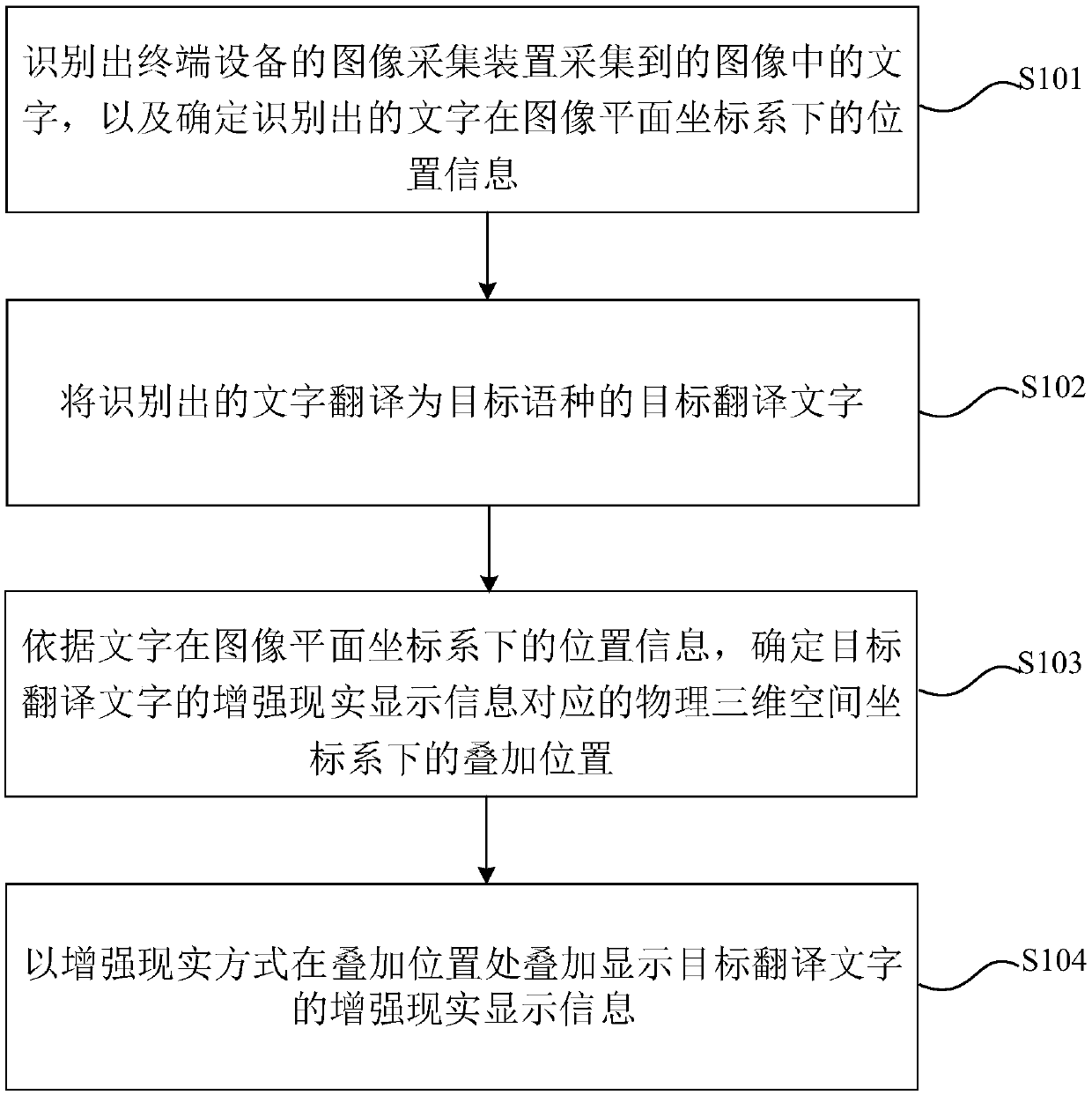

[0058] The embodiment of the present application provides a text translation and display method based on augmented reality, such as figure 1 As shown, the method includes:

[0059] Step S101, identifying characters in the image captured by the image acquisition device of the terminal device, and determining position information of the recognized characters in the image plane coordinate system.

[0060] Among them, the image acquisition device can collect images in real time and recognize the text in the image; in actual application scenarios, by starting the camera of the mobile device, the image acquisition viewfinder can be aligned with the text in the image without other shooting, The operation of the camera automatically recognizes the text in the image, and at the same time determines the position information of the text in the image plane coordinate system, which can further meet the needs of users for instant shooting and flipping in different scenarios.

[0061] Step ...

Embodiment 2

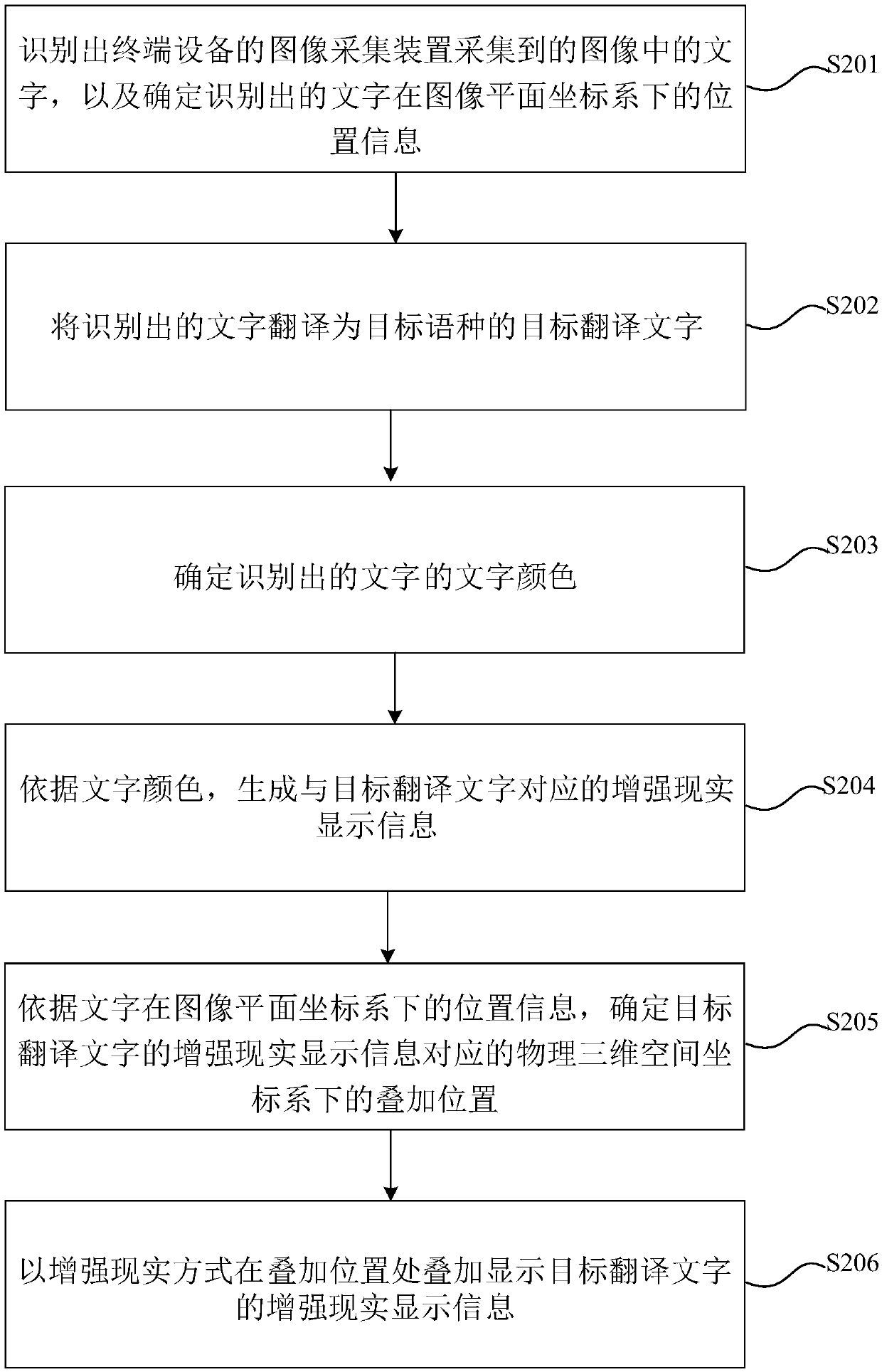

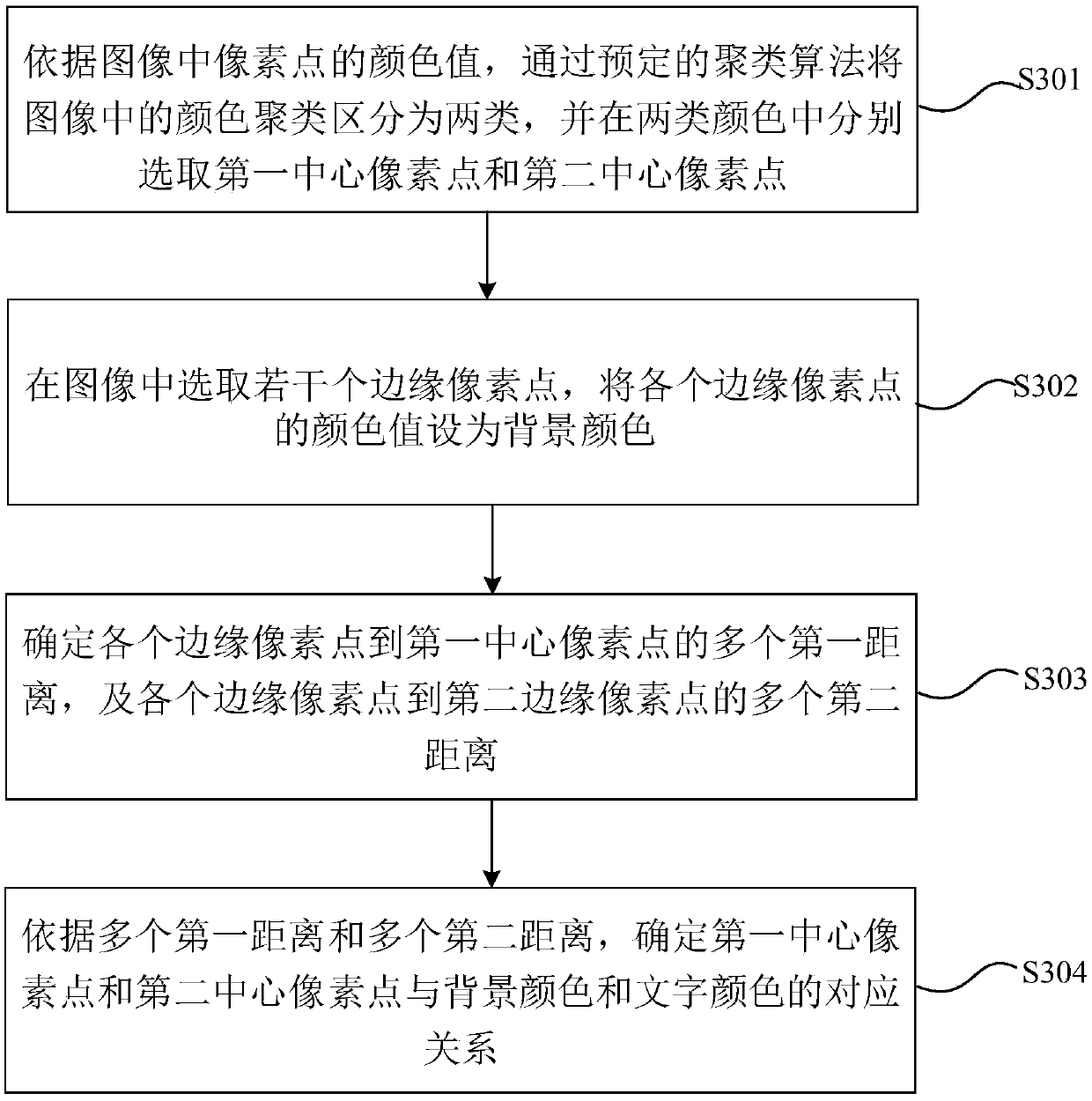

[0069] The embodiment of the present application provides another possible implementation. On the basis of the first embodiment, it also includes the method shown in the second embodiment, wherein, in step S101, the image collected by the image acquisition device of the terminal device is identified text, including:

[0070] The text in the image collected by the image collection device of the terminal device is recognized by the optical character recognition algorithm OCR.

[0071] Further, the text in the image collected by the image collection device of the terminal device is recognized through the optical character recognition algorithm OCR, specifically including:

[0072] Binarize the image;

[0073] Divide the binarized image into multiple blocks;

[0074] extracting feature information of each word block, and matching the extracted feature information with a feature database, and determining the matching result as the recognition result of each word block;

[0075] ...

Embodiment 3

[0120] The present application provides a text translation and display device 40 based on augmented reality, such as Figure 4 As shown, the device may include: an image recognition module 401, a text translation module 402, an overlay position determination module 403, and a display module 404, wherein,

[0121] The image recognition module 401 is configured to recognize the text in the image collected by the image collection device of the terminal device, and determine the position information of the recognized text in the image plane coordinate system.

[0122] The text translation module 402 is configured to translate the recognized text into a target translated text in the target language.

[0123] The superimposition position determination module 403 is configured to determine the superimposition position in the physical three-dimensional space coordinate system corresponding to the augmented reality display information of the target translated text according to the posi...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com