A distribution network overcurrent protection method based on deep reinforcement learning

A technology of reinforcement learning and overcurrent protection, applied in neural learning methods, automatic disconnection emergency protection devices, emergency protection circuit devices, etc., can solve problems such as reducing the correlation between samples

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

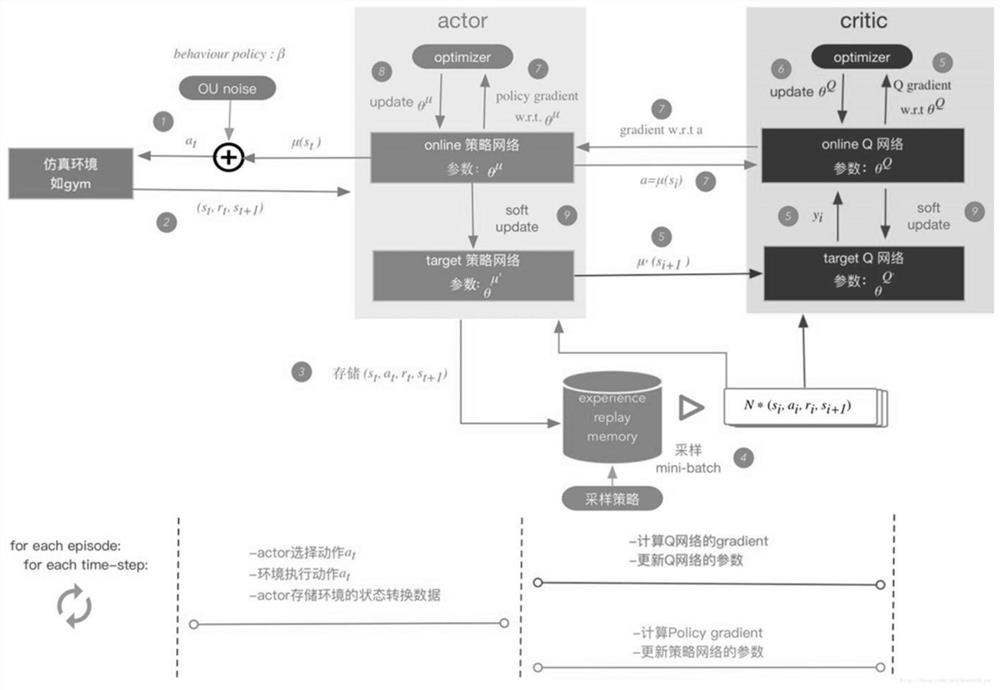

[0071] The invention aims at the problem of misoperation and refusal of line overcurrent protection caused by the overly complex distribution of distributed power sources, and describes this problem as a Markov decision process (MDP), introduces a deep reinforcement learning mechanism, and uses intelligent agents to pass Continuously interact with the grid environment to obtain the optimal dynamic threshold setting strategy.

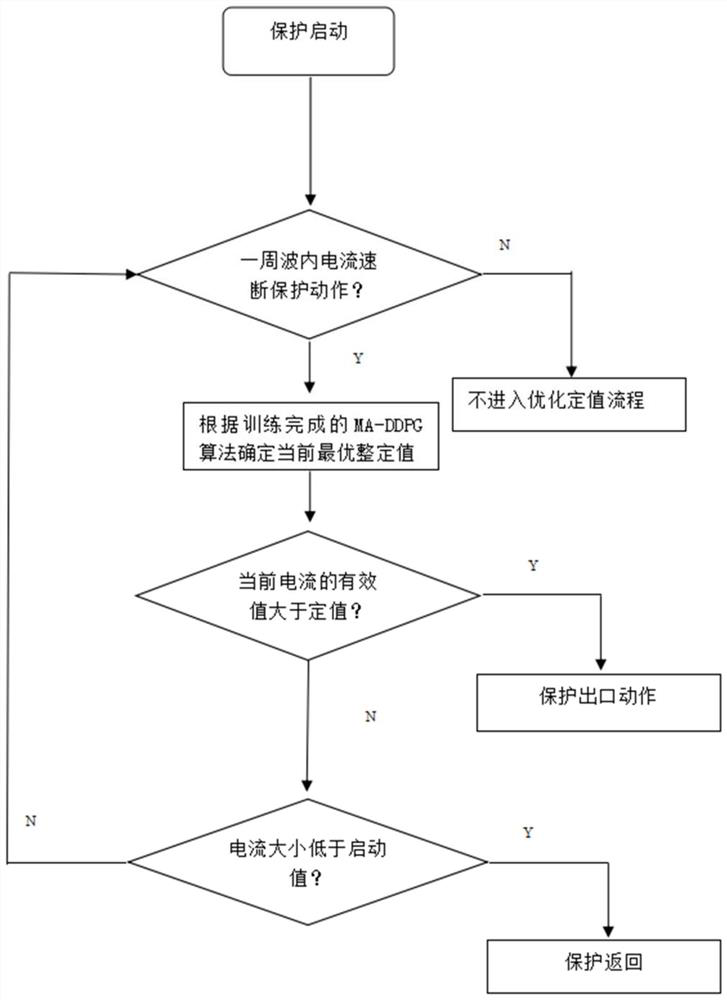

[0072] Such as figure 1 Shown is a flow chart of a distribution network overcurrent protection method based on deep reinforcement learning, and the method includes steps:

[0073] (1) Start the protection, and judge whether the current quick-break protection operates within a cycle:

[0074] If the current quick-break protection does not operate, there is no need to optimize the setting;

[0075] If the current quick-break protection operates, optimize the fixed value;

[0076] (2) Determine the optimal setting value according to the MA-DDPG algorithm...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com