Target classification and positioning method based on network supervision

A target classification and positioning method technology, which is applied in the field of target classification and positioning based on network supervision, can solve the problems of weakly supervised learning and matching performance, and achieve the effects of avoiding network over-fitting, good positioning performance, and improved fine classification performance

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

[0102] 1. Database and sample classification

[0103] Using the present invention to perform network supervision target classification and positioning does not require any data set help in the application stage. However, after the classification and positioning network training is completed, the present invention requires a stable test set to verify the classification accuracy of the classification network and the positioning network The positioning accuracy of the training set is limited by the test set. At present, the existing data set for weakly supervised classification and positioning tasks, the CUB_200_2011 data set can well meet the needs of the experimental test set.

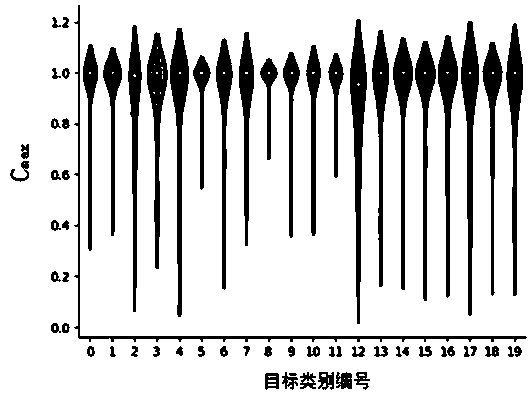

[0104] Such as Figure 8 As shown, the CUB_200_2011 data set is an improved version of the CUB_200 data set, which contains image data of 200 species of birds, the total number of images is 11788, and the test set is 5794, which can be used to evaluate fine classification tasks; the test set Each image has ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com