Unmanned aerial vehicle path planning method based on transfer learning strategy deep Q-network

A technology of transfer learning and path planning, applied in two-dimensional position/channel control, vehicle position/route/altitude control, non-electric variable control, etc. The effect of improving the speed of convergence, shortening the time spent

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

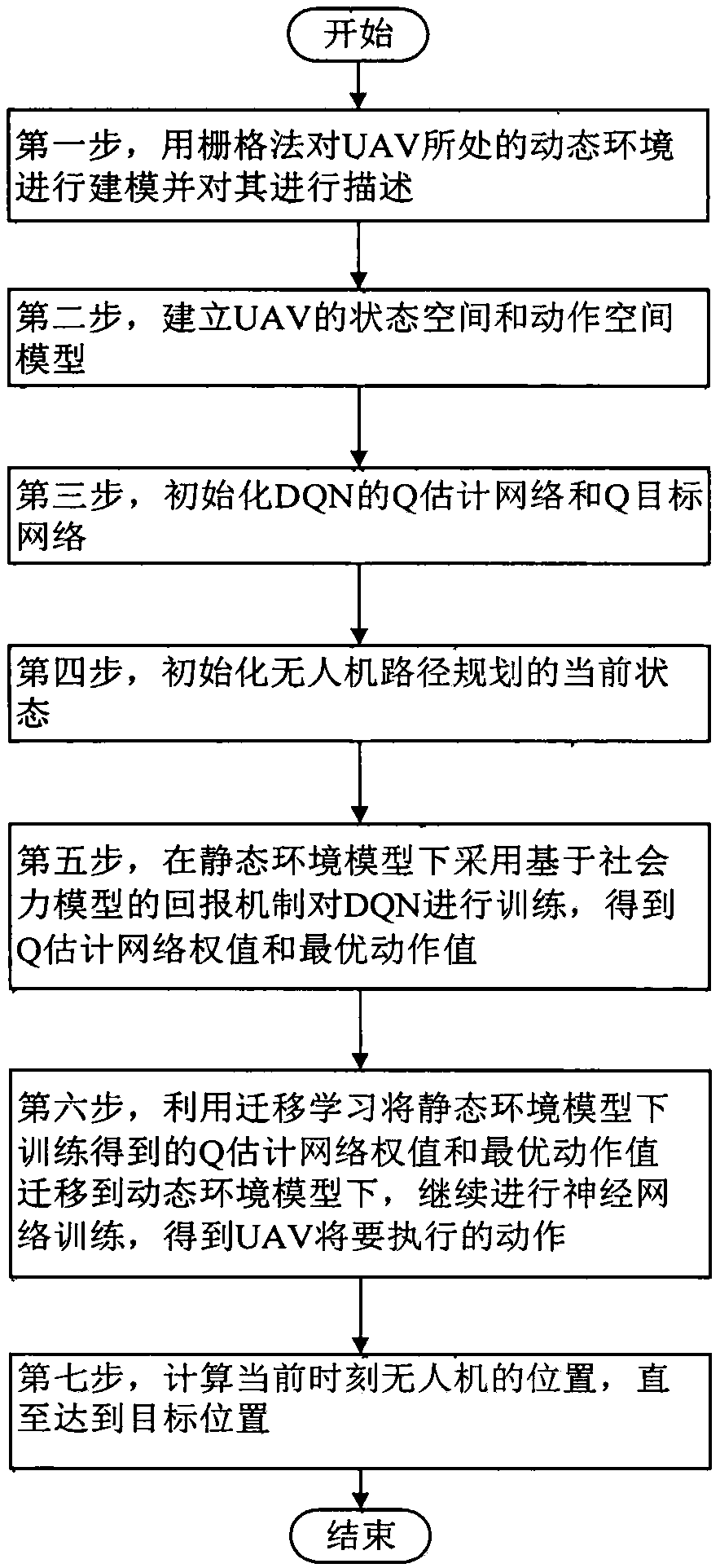

[0026] The technical solutions of the present invention are described in detail with reference to the accompanying drawings.

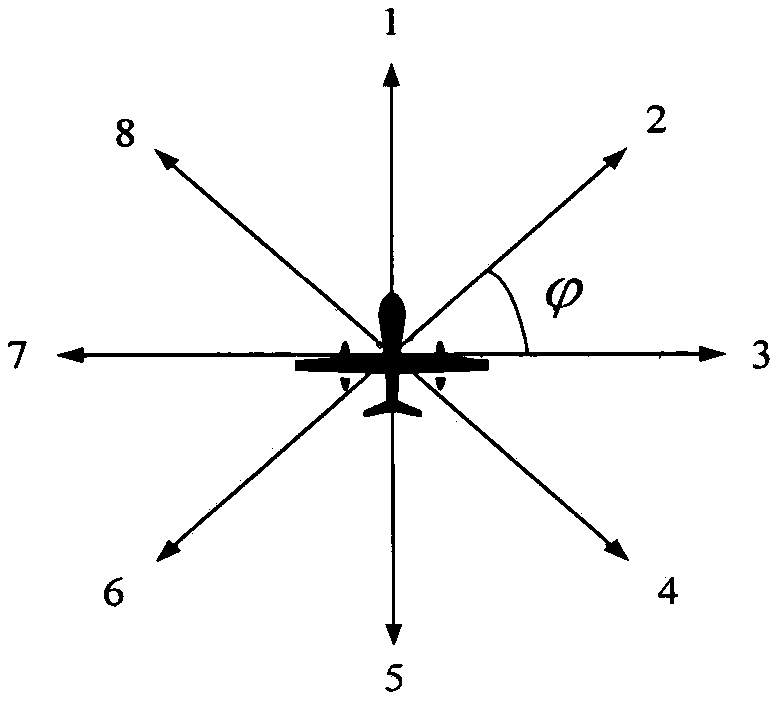

[0027] A UAV path planning method based on the migration learning strategy deep Q network of the present invention specifically includes the following steps:

[0028] Step 1, use the grid method to model and describe the dynamic environment in which the UAV is located.

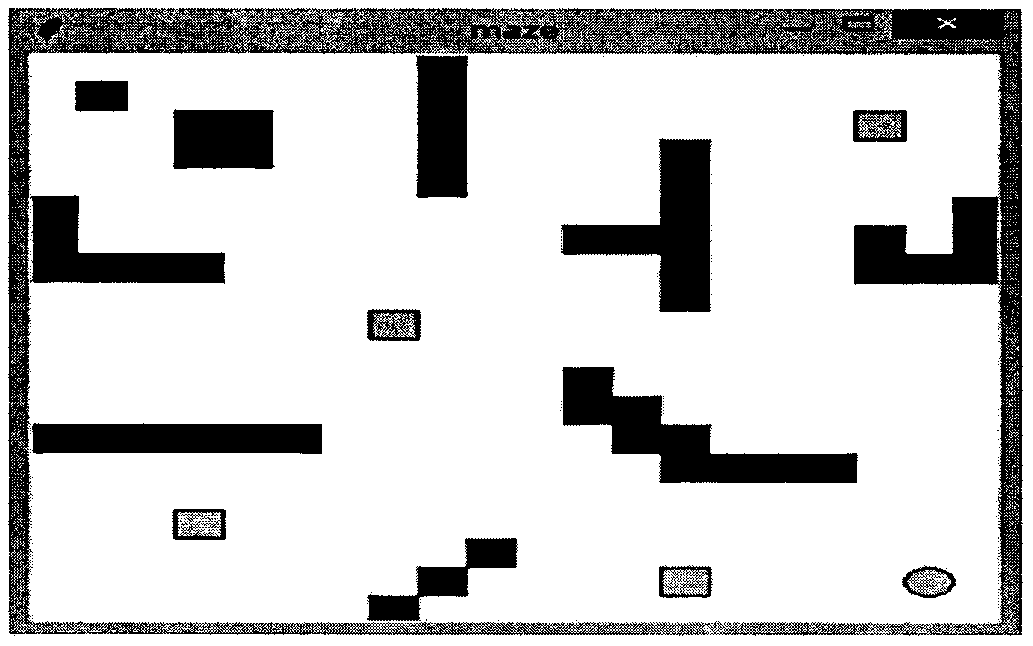

[0029] (1.1) The dynamic environment in which the UAV is located is a 20x20 grid map, such as figure 2 shown. Among them, the light pink squares are movable obstacles; the other black positions are immovable obstacles, which are L-shaped wall, horizontal wall, vertical wall, T-line wall, inclined wall, square wall and irregular wall. Test the obstacle avoidance effect of the agent; the yellow circle is the target position, the red square is the starting position of the agent, the target position and the starting position of the agent can be randomly generated, when the agent moves t...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com