Many-to-many speaker conversion method based on Perceptual STARGAN

A conversion method and speaker technology, applied in speech analysis, speech recognition, instruments, etc., can solve problems such as network degradation

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

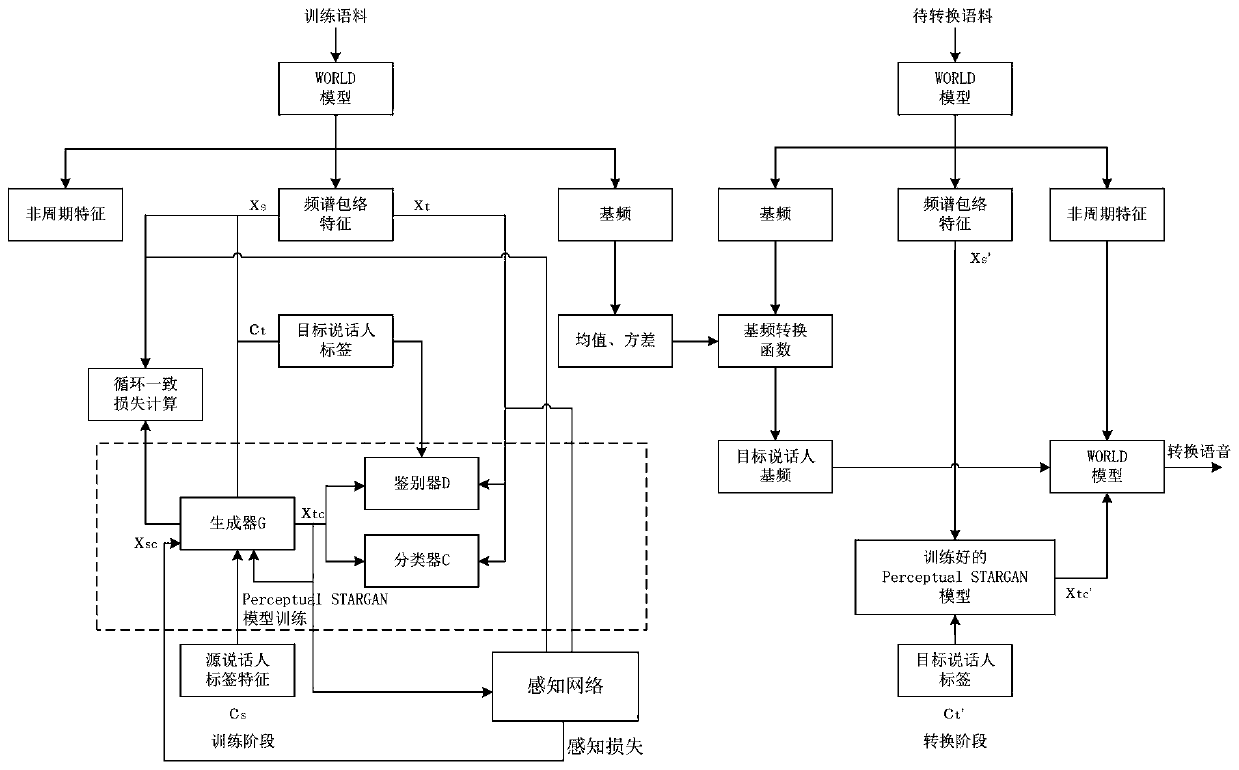

Method used

Image

Examples

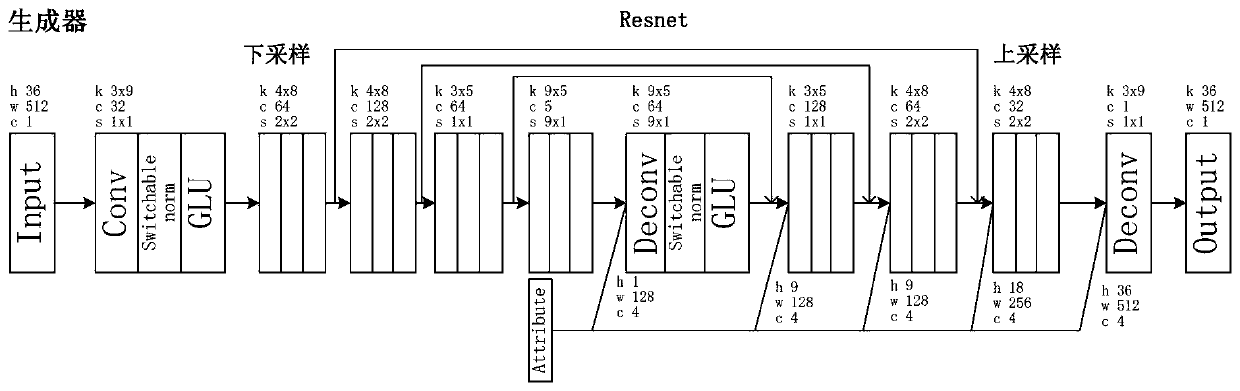

Embodiment Construction

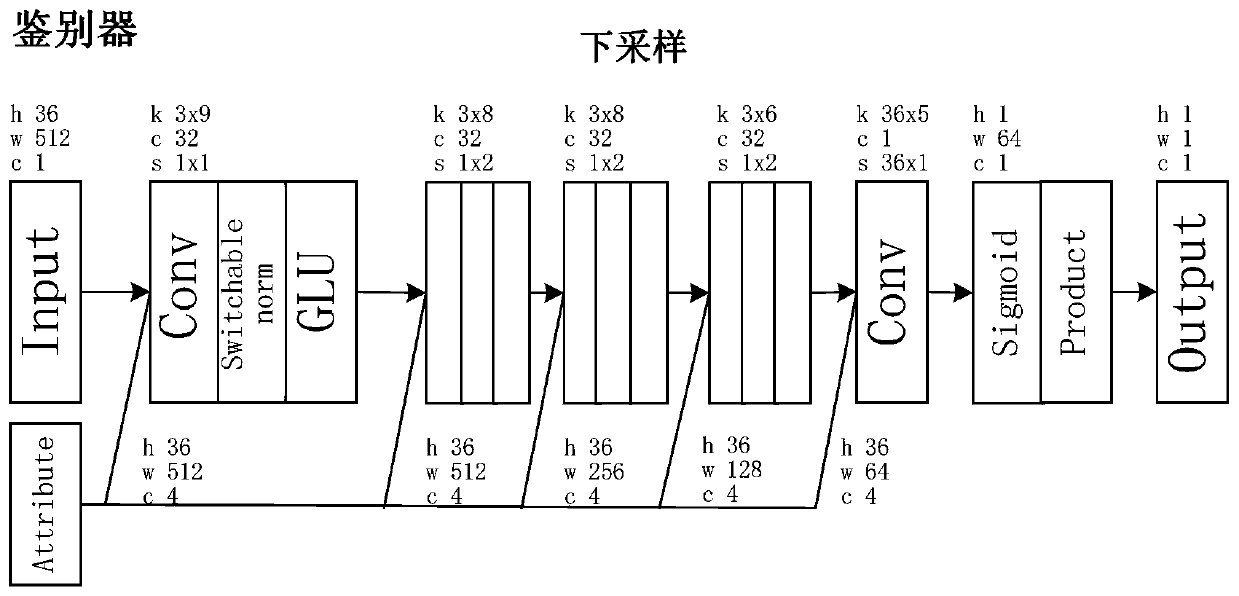

[0070] The perceptual network can be applied in the image field to calculate the perceptual loss, which is beneficial to obtain more delicate details and edge features in the converted image. The present invention applies the idea of the perceptual network to the field of speech conversion. However, in the image field, a pre-trained network is used to extract high-dimensional image information, and there is no general pre-trained network in the speech field, so the present invention creatively uses the discriminator as a perceptual network to calculate the perceptual loss, thereby extracting high-dimensional information in the spectrum to Improve the ability of the model to extract the semantic features and personality features of the spectrum and improve the quality of the generated speech. The present invention regards part of the network structure of the discriminator D as a perceptual network Using this perceptual network to calculate the perceptual loss of the deep sem...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com