Automatic text classification method based on BERT and feature fusion

A technology of automatic classification and feature fusion, applied in the field of supervised text classification and deep learning, can solve the problems of word vector or word vector change, single information coverage, etc., and achieve the effect of improving accuracy and coding ability.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

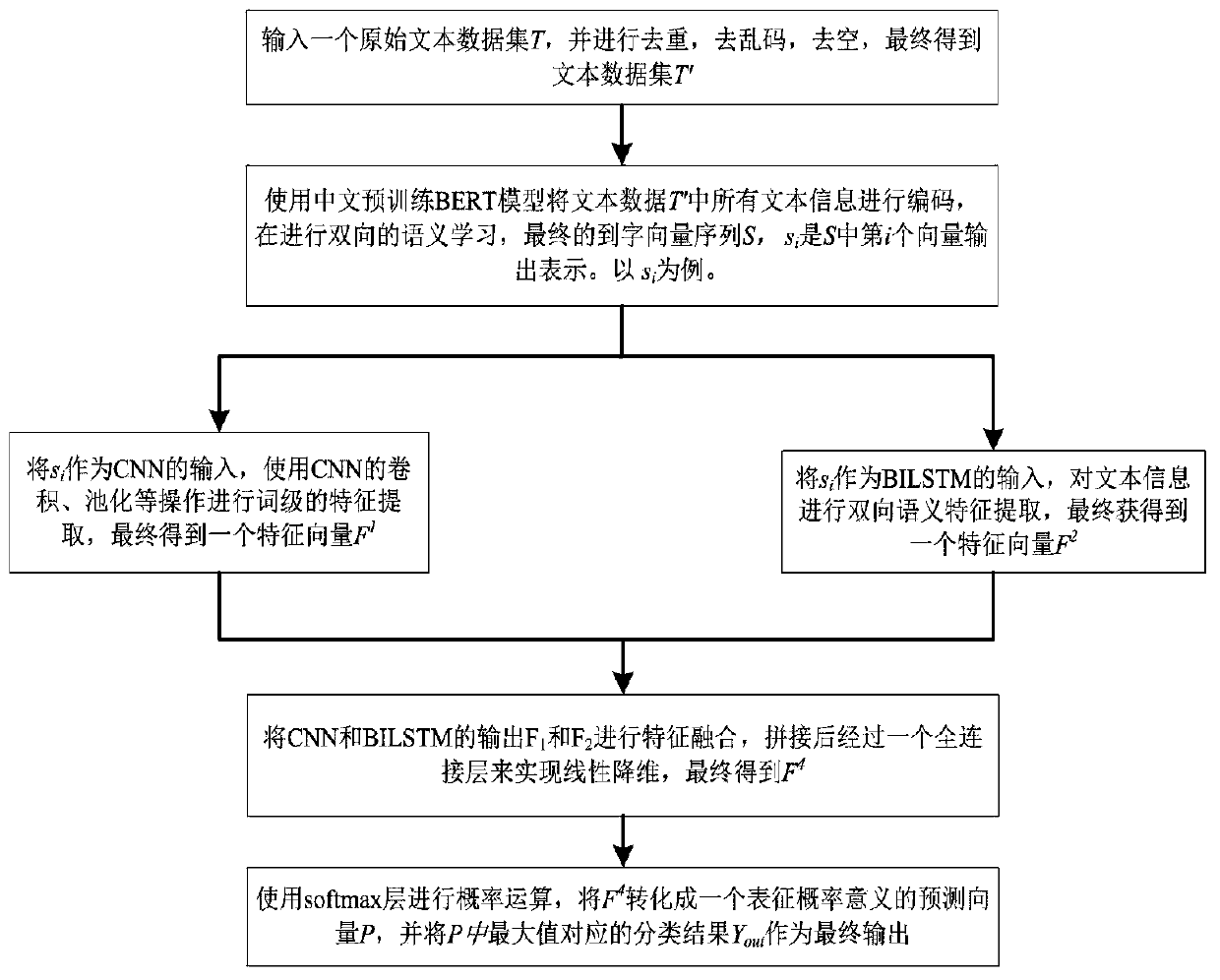

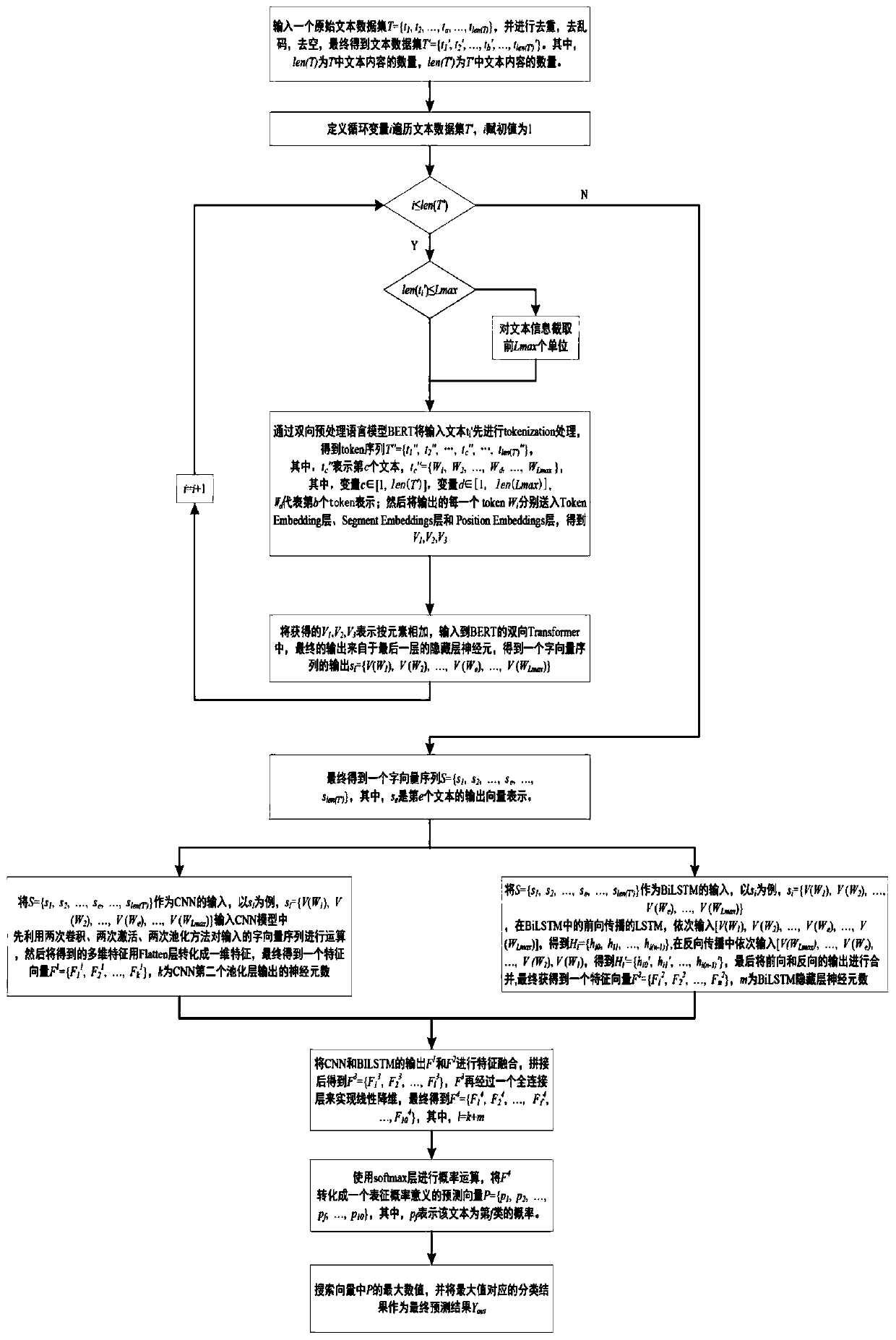

Method used

Image

Examples

Embodiment Construction

[0031] BERT (Bidirectional Encoder Representation from Transformers, Transformer's bidirectional encoding representation) language model: BERT uses the masked model to realize the bidirectionality of the language model, which proves the importance of bidirectionality for language representation pre-training. The BERT model is a two-way language model in the true sense, and each word can use the context information of the word at the same time. BERT is the first fine-tuning model to achieve the best results in both sentence-level and token-level natural language tasks. It is proved that pre-trained representations can alleviate the design requirements of special model structures for different tasks. BERT achieves the best results on 11 natural language processing tasks. And in BERT's extensive ablations proved that "BERT's bidirectionality" is an important innovation. The BERT language model realizes the conversion of text to dynamic word vectors and enhances the semantic inf...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com