A multi-modal customer service automatic reply method and system

An automatic reply and multi-modal technology, applied in character and pattern recognition, instruments, computing, etc., can solve problems such as ignoring attribute information, and achieve the effect of satisfying users' wishes

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

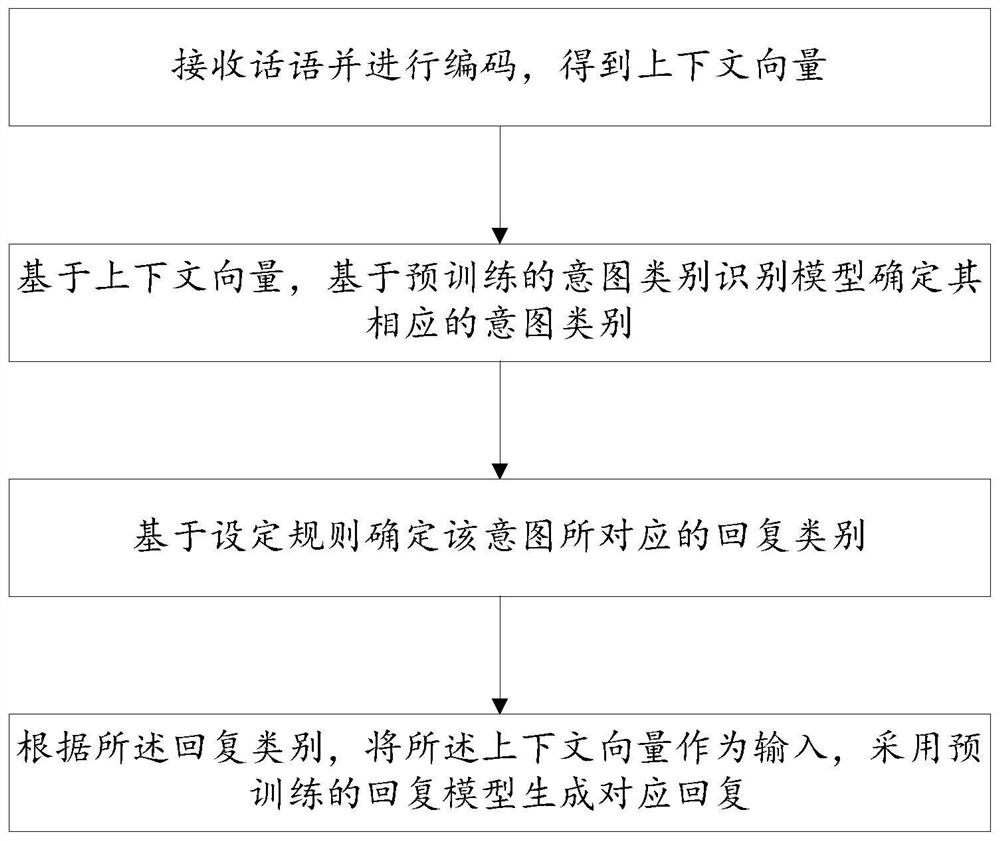

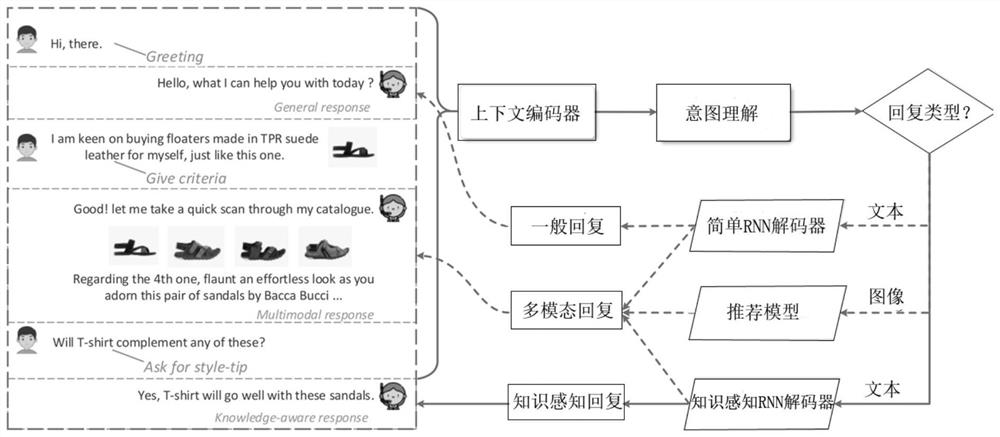

[0035] This embodiment discloses a multi-modal dialogue system with an adaptive decoder. Include the following steps:

[0036] Step 1: Receive the dialogue and encode it to get the context vector;

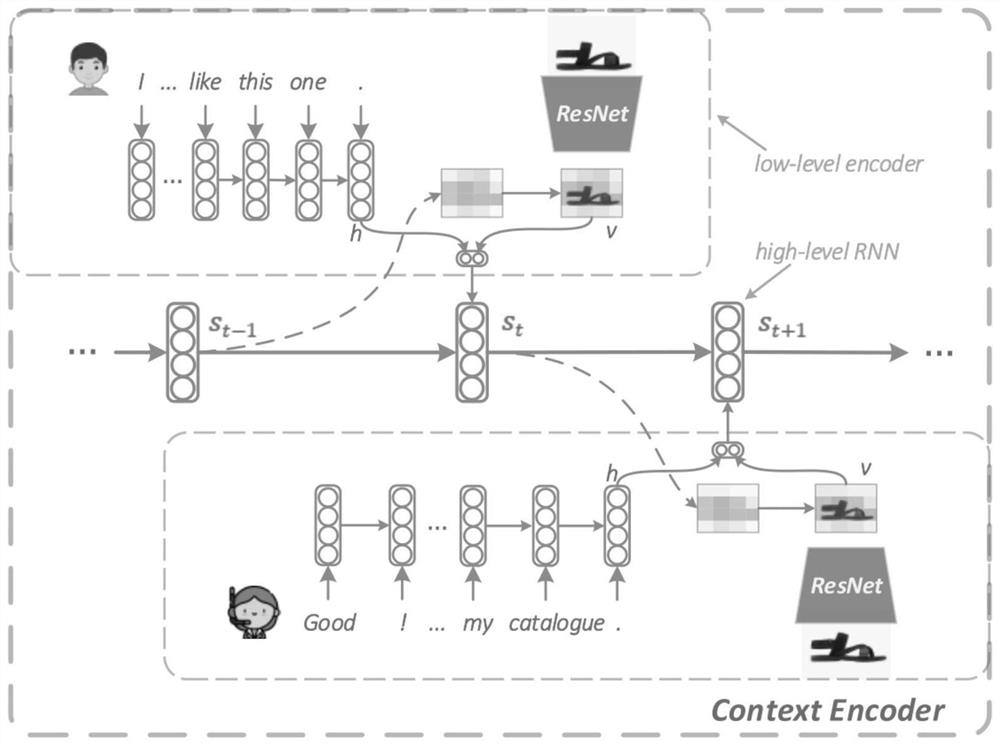

[0037] The encoding employs a context encoder. The context encoder includes: a low-level, word-level recurrent neural network and a residual network enhanced with soft visual attention, and a high-level, sentence-level recurrent neural network.

[0038] Specifically, at a low level, the input text utterance is encoded word-by-word by a word-level recurrent neural network, and the final hidden state that embeds the entire utterance information is regarded as a representation of the input text utterance. It is worth noting that utterances can be textual or multi-modal. As for the extraction of visual features, considering the difference in the visual attention of users to image regions, image utterances of commodities use soft visual attention-enhanced residual Differential networ...

Embodiment 2

[0058] The purpose of this embodiment is to provide a multi-modal customer service automatic reply system.

[0059] In order to achieve the above purpose, this embodiment provides a multi-modal customer service automatic reply system, including:

[0060] The context encoder receives and encodes the utterance to obtain the context vector;

[0061] The intent type identification module, based on the context vector, determines its corresponding intent category based on the pre-trained intent category identification model;

[0062] A reply category determining module, which determines the reply category corresponding to the intention based on the set rules;

[0063] The reply generation module takes the context vector as input according to the reply category, and generates a corresponding reply by using a pre-trained reply model.

Embodiment 3

[0065] The purpose of this embodiment is to provide an electronic device.

[0066] In order to achieve the above object, this embodiment provides an electronic device, including a memory, a processor, and a computer program stored on the memory and operable on the processor, when the processor executes the program, it realizes:

[0067] Receive the utterance and encode it to get the context vector;

[0068] Based on the context vector, the corresponding intent category is determined based on the pre-trained intent category recognition model;

[0069] Determine the reply category corresponding to the intent based on the set rules;

[0070] According to the reply category, the context vector is used as an input, and a pre-trained reply model is used to generate a corresponding reply.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com