Virtual reloading method based on 2D image

An image and clothing technology, applied in the field of virtual dress-up based on 2D images, can solve the problems of wrongly generated results, disadvantage, high computational cost, etc., and achieve the effect of good synthesis effect.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

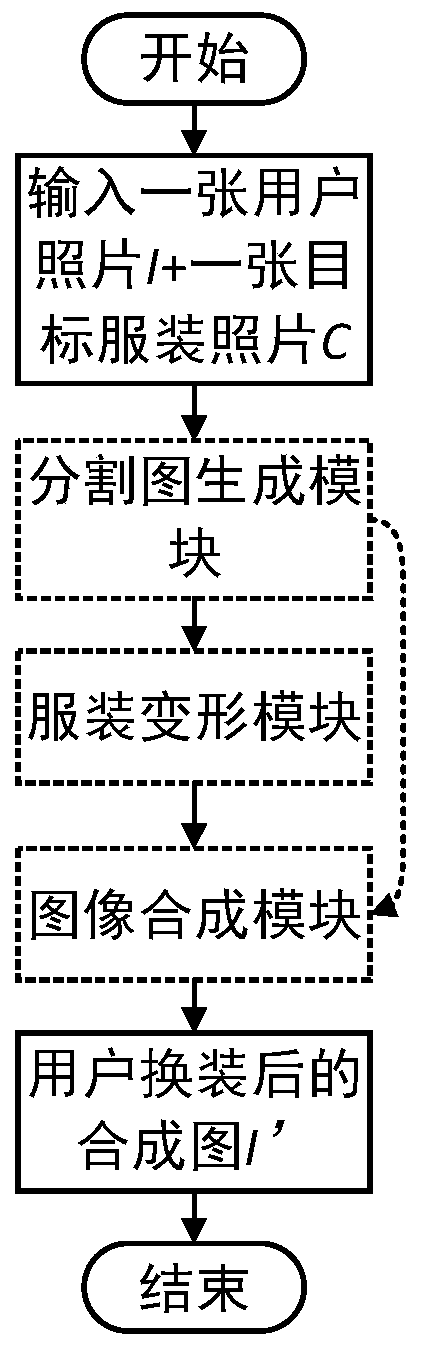

[0029] The specific training and testing steps of the present invention will be described in detail below in conjunction with the accompanying drawings.

[0030] In the manner of this embodiment, the software environment is Ubuntu16.04.

[0031] For the training phase, the overall process of the method is as follows figure 1 shown.

[0032] Step 1: Input any user photo I and a target clothing photo C. Adjust the size of the two pictures to 256×192×3, 3 means RGB three-channel color picture.

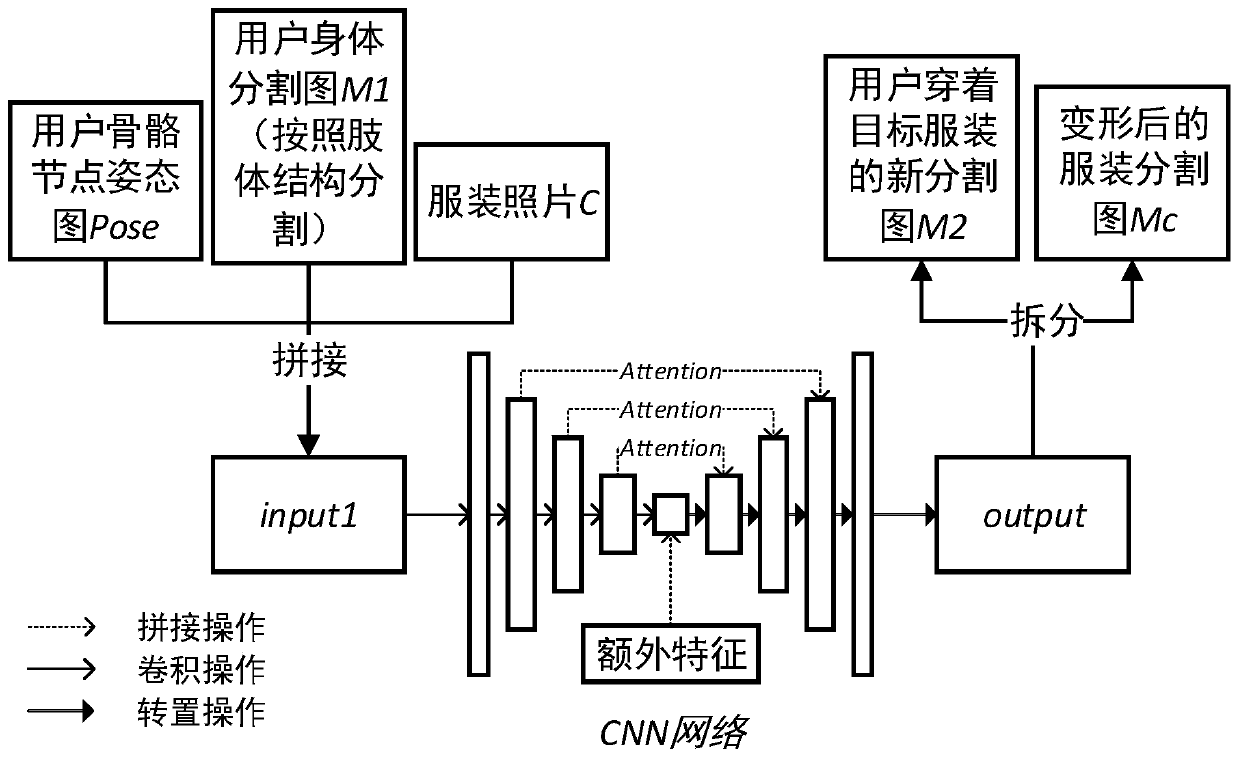

[0033] Step 2: According to the user in photo I, extract the user's skeletal node pose graph Pose and the user's body segmentation graph M1 (here, segment according to the limb structure).

[0034] Step 2.1: Input the image I into the network model for identifying posture joint points, and obtain 18 skeletal joint points (including left eye, right eye, nose, left ear, right ear, neck, left hand, right hand, left elbow joint, right elbow joint , left shoulder, right shoulder, left hip ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com