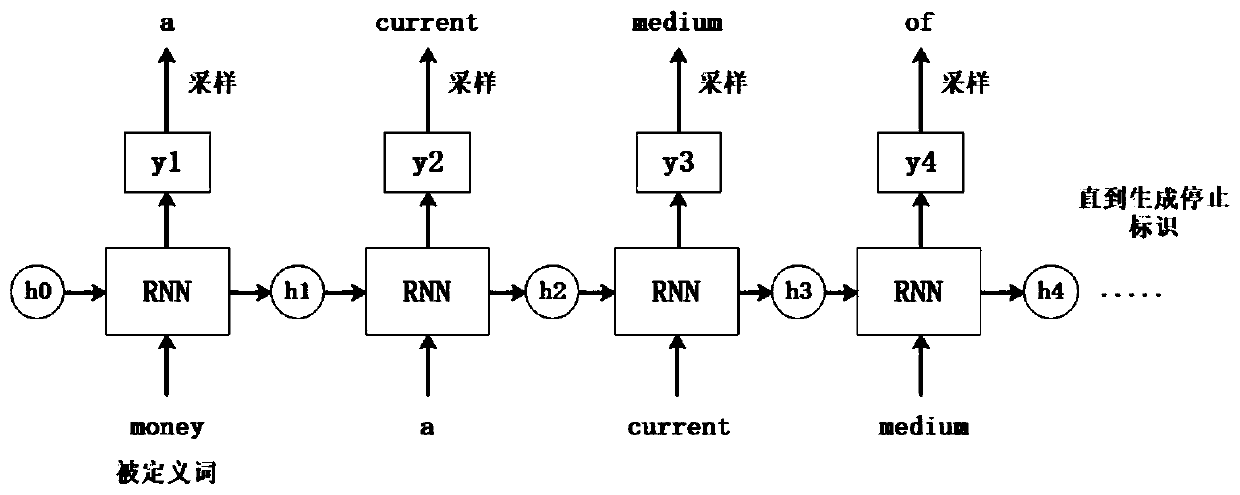

Word definition generation method based on recurrent neural network and latent variable structure

A technology of cyclic neural network and latent variables, applied in the field of natural language processing, can solve problems such as polysemy of a word, and achieve high-quality and easy-to-understand effects

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

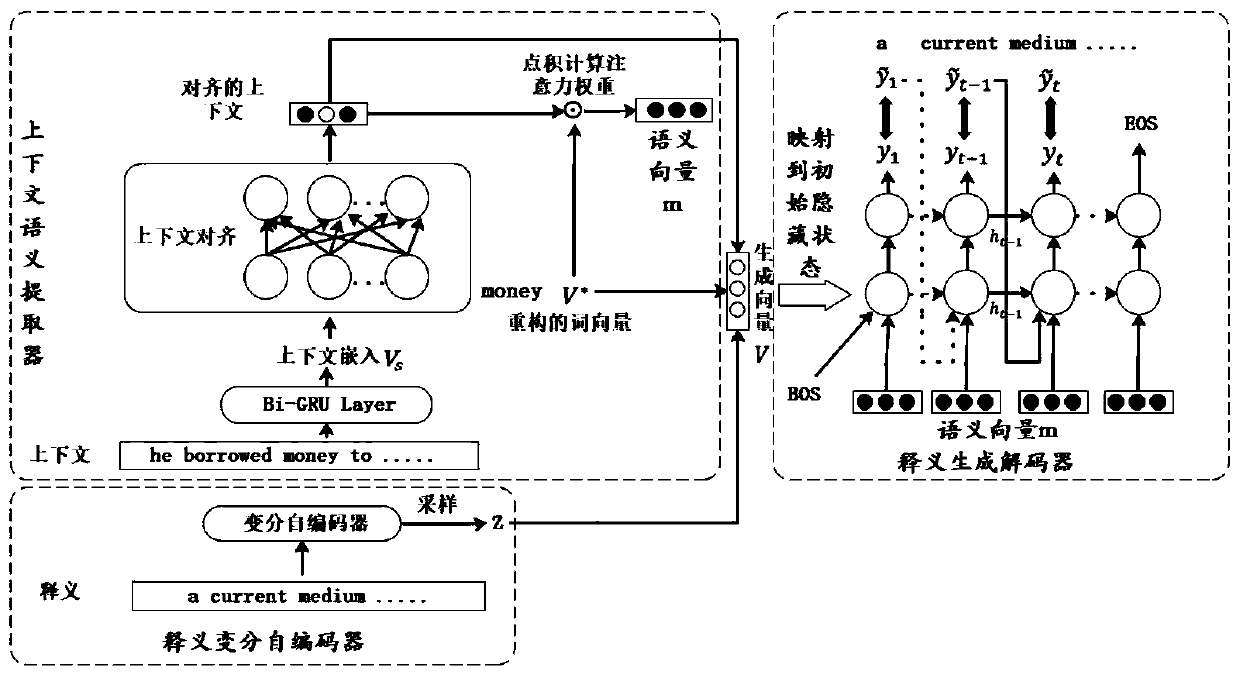

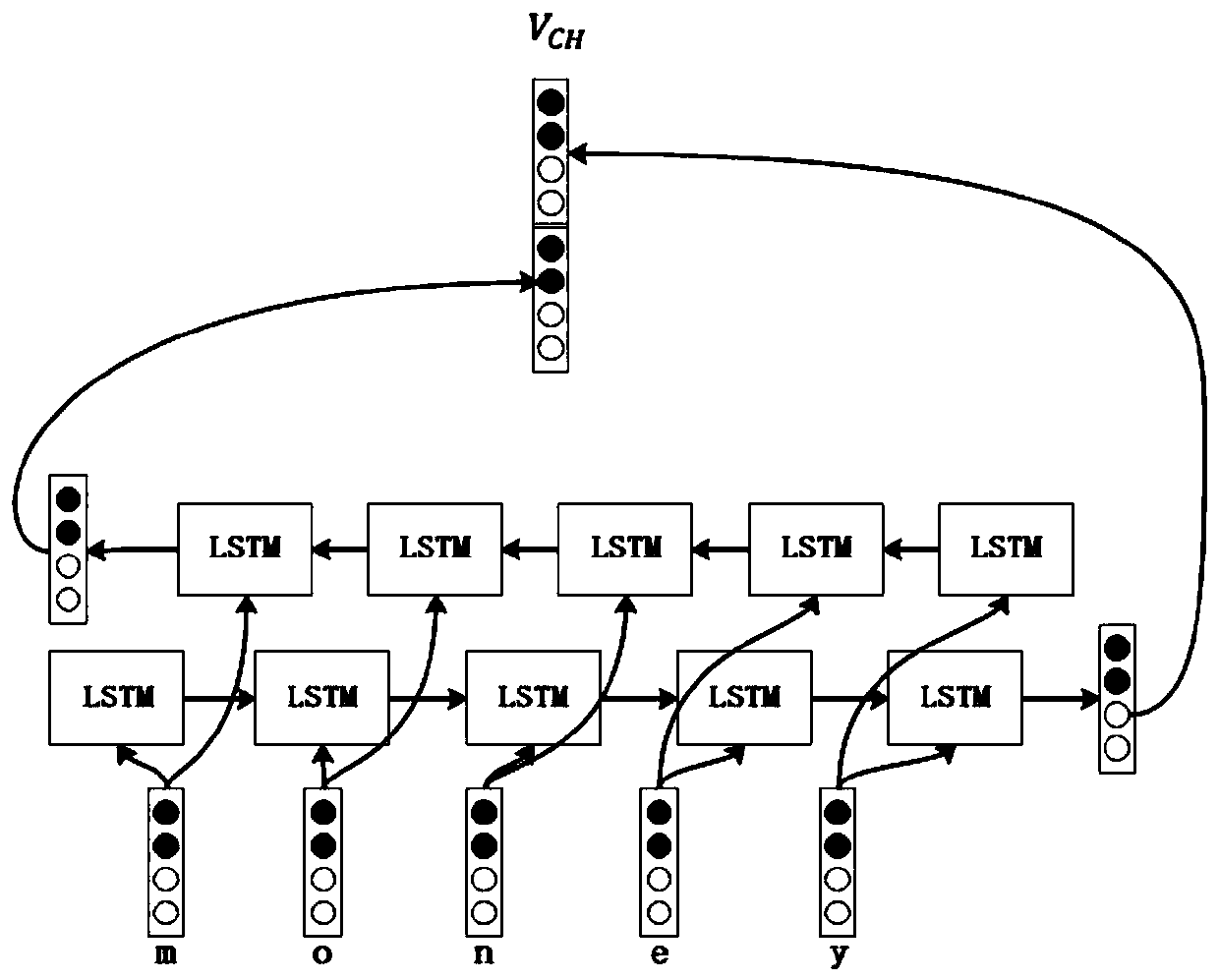

[0070] figure 2 It is a schematic diagram of the model structure of the word definition generation method based on the cyclic neural network and the latent variable structure of the present invention. This implementation includes a context semantic extractor, a paraphrase variational autoencoder, and a paraphrase generation decoder.

[0071] Some basic concepts and interrelationships involved in the present invention

[0072] 1. Vocabulary: It consists of all the words included in the dictionary, that is, it consists of all defined words;

[0073] 2. Initial vocabulary: count the first 70,000 characters with the highest frequency in the WikiText-103 dataset, remove special symbols, and only keep English words as the initial vocabulary;

[0074] 3. Basic corpus: It is to organize the language materials that have actually appeared together to form a corpus, so that when explaining words, we can draw materials from them or obtain data evidence. The corpus described in the pres...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com