Gesture error correction method, system and device in augmented reality environment

A technology of augmented reality and error correction method, which is applied in the field of information processing, can solve the problems of insufficient timeliness and accuracy, and achieve the effect of improving gesture recognition rate and error correction efficiency

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

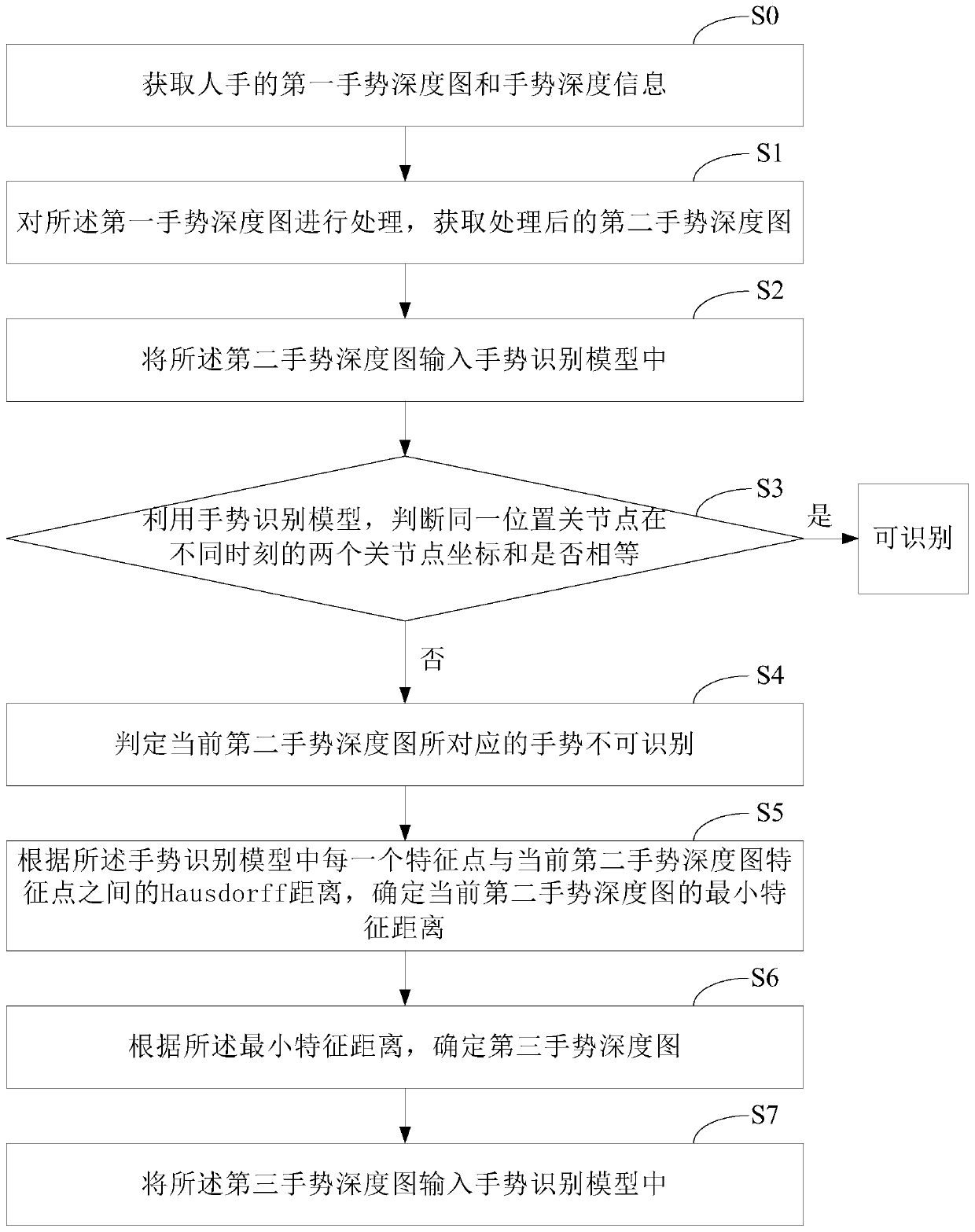

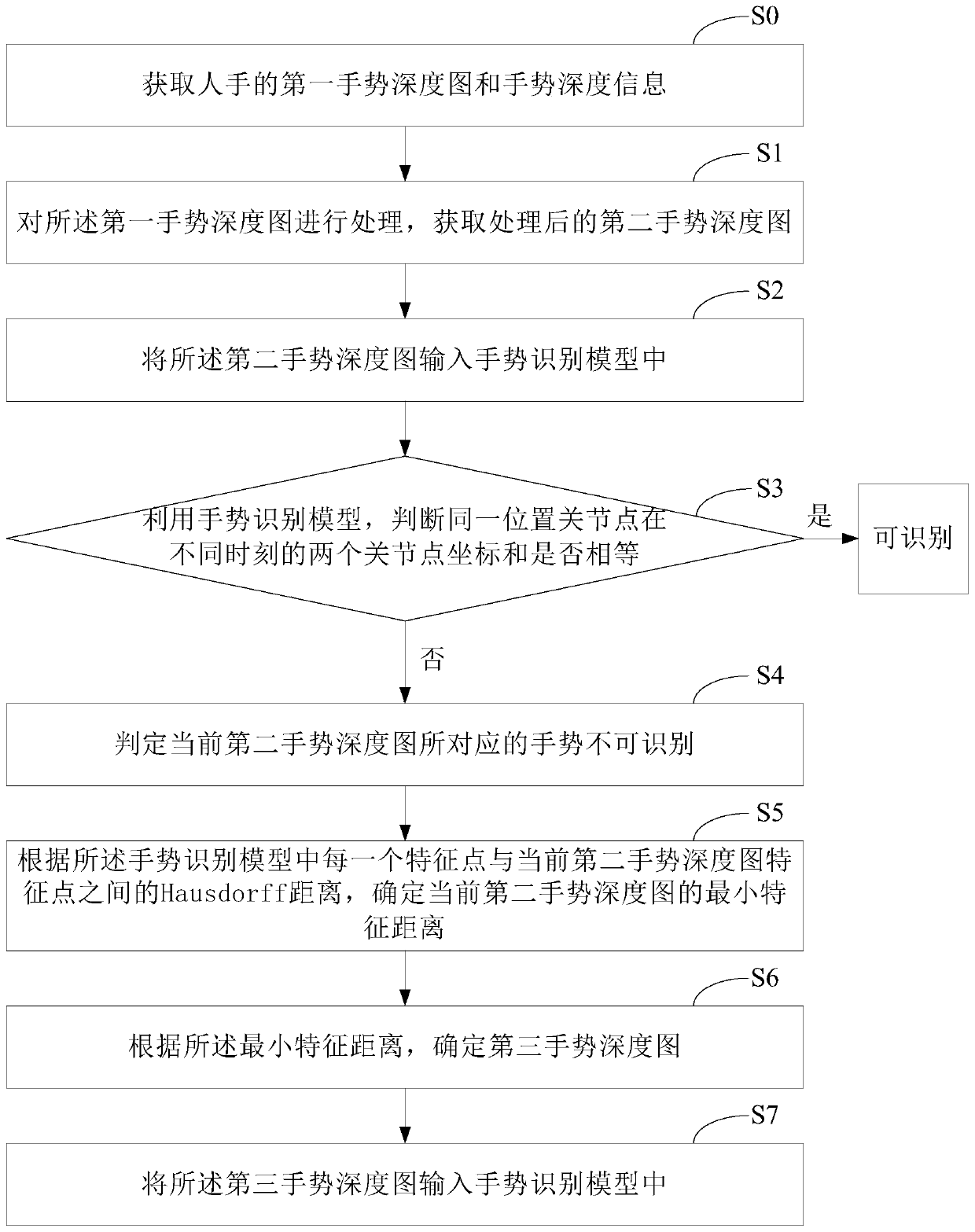

[0059] see figure 1 , figure 1 It is a schematic flowchart of a gesture error correction method in an augmented reality environment provided by an embodiment of the present application. Depend on figure 1 It can be seen that the gesture error correction method in the augmented reality environment in this embodiment mainly includes the following processes:

[0060] S0: Obtain the first gesture depth map and gesture depth information of the human hand.

[0061] Wherein, the first gesture depth map is an original gesture depth map, and the first gesture depth map includes a static gesture depth map and a dynamic gesture depth map, and the gesture depth information includes joint point coordinates.

[0062] In this embodiment, the kinect device can be used to obtain the first gesture depth map and gesture depth information of the human hand, and the depth coordinates of the bone nodes of the human hand can be obtained through the bone information of the kinect.

[0063] S1: Pr...

Embodiment 2

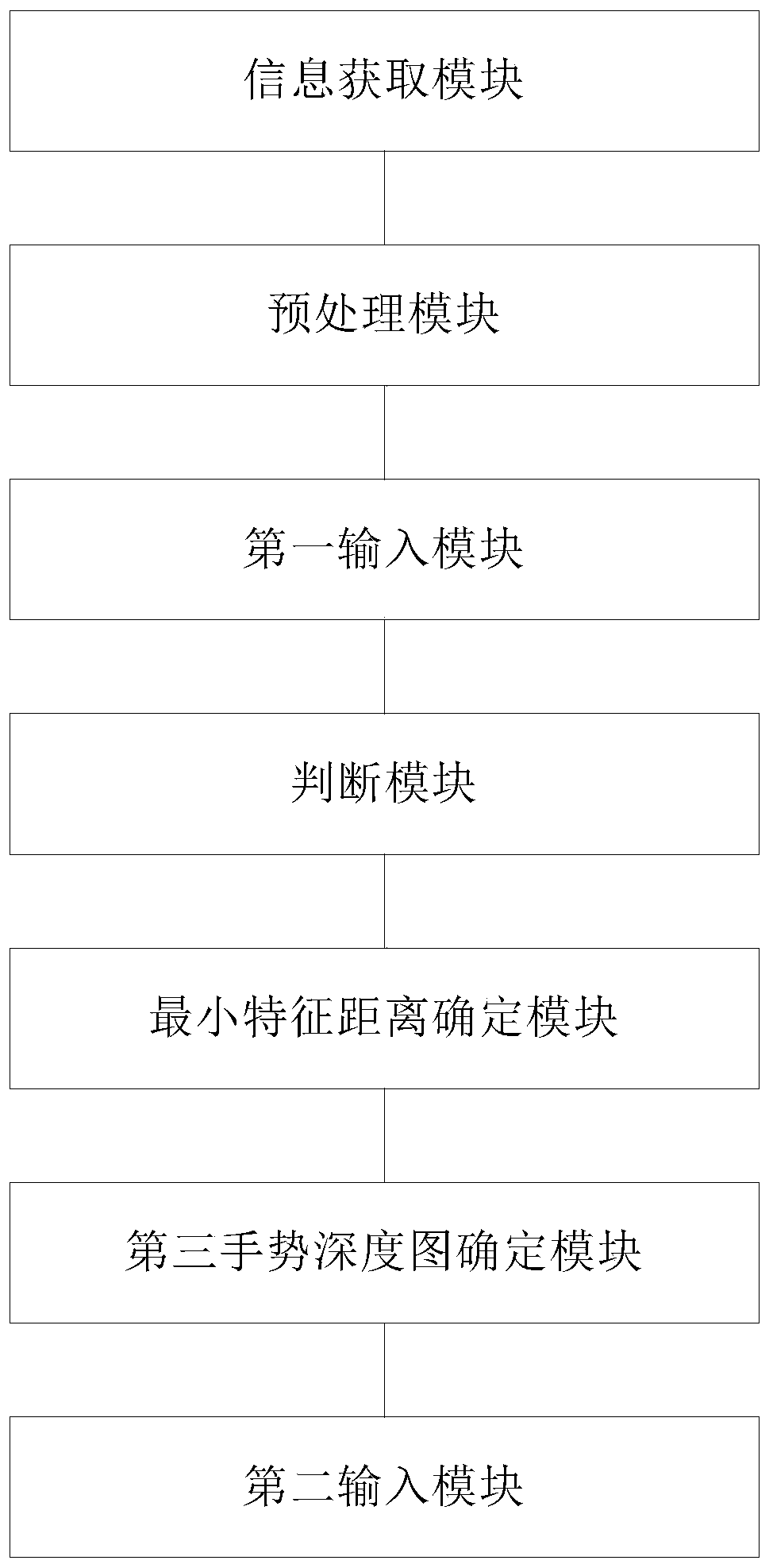

[0105] exist figure 1 On the basis of the illustrated embodiment see figure 2 , figure 2 It is a schematic structural diagram of a gesture error correction system in an augmented reality environment provided by the embodiment of the present application. Depend on figure 2 It can be seen that the gesture error correction system in this embodiment mainly includes: an information acquisition module, a preprocessing module, a first input module, a judgment module, a minimum characteristic distance determination module, a third gesture depth map determination module and a second input module.

[0106] Wherein, the information acquisition module is used to acquire the first gesture depth map and gesture depth information of human hands, the first gesture depth map is the original gesture depth map, and the first gesture depth map includes static gesture depth map and dynamic gesture depth map, gesture depth map Depth information includes joint point coordinates. The preproces...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com