Image-text retrieval system and method based on multi-angle self-attention mechanism

A retrieval system and attention technology, applied in the field of cross-modal retrieval, can solve problems such as insufficient features and achieve the effect of performance improvement

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

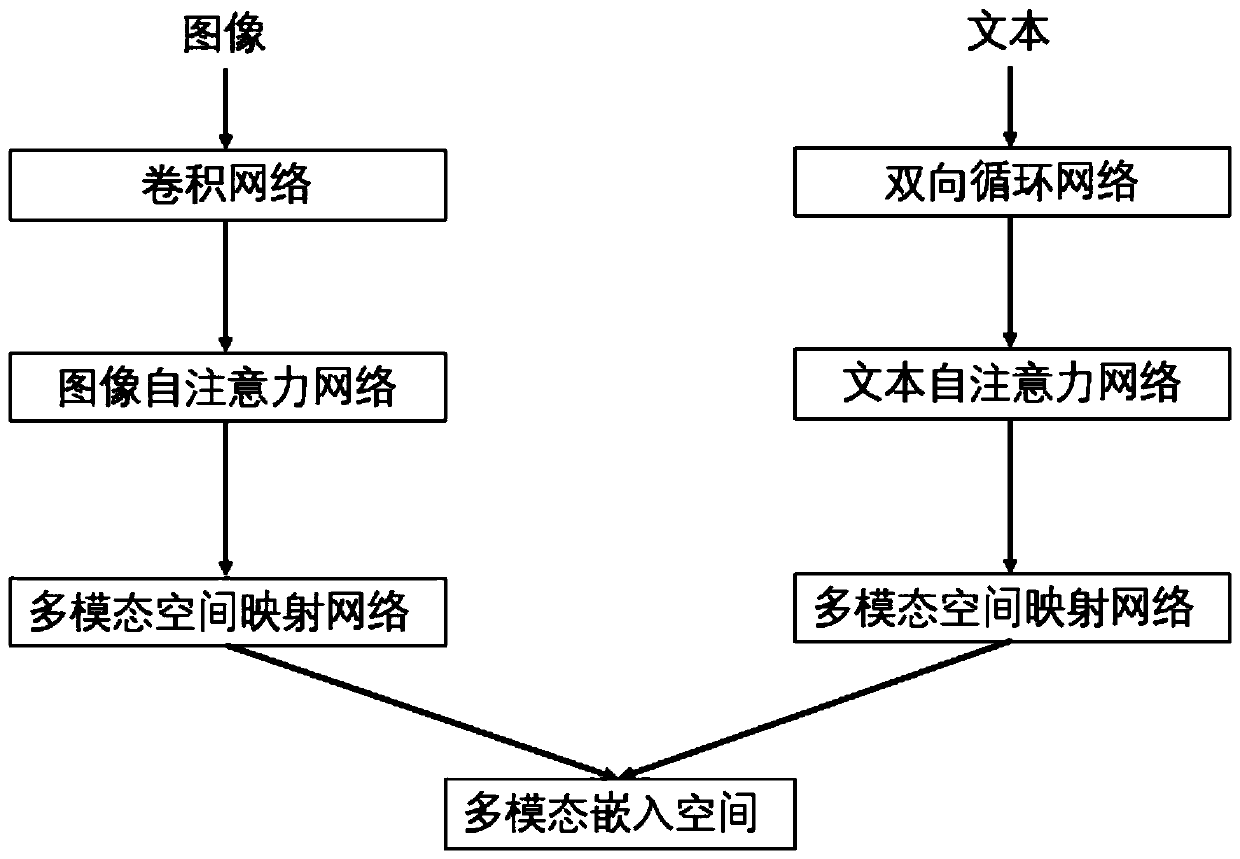

Method used

Image

Examples

Embodiment Construction

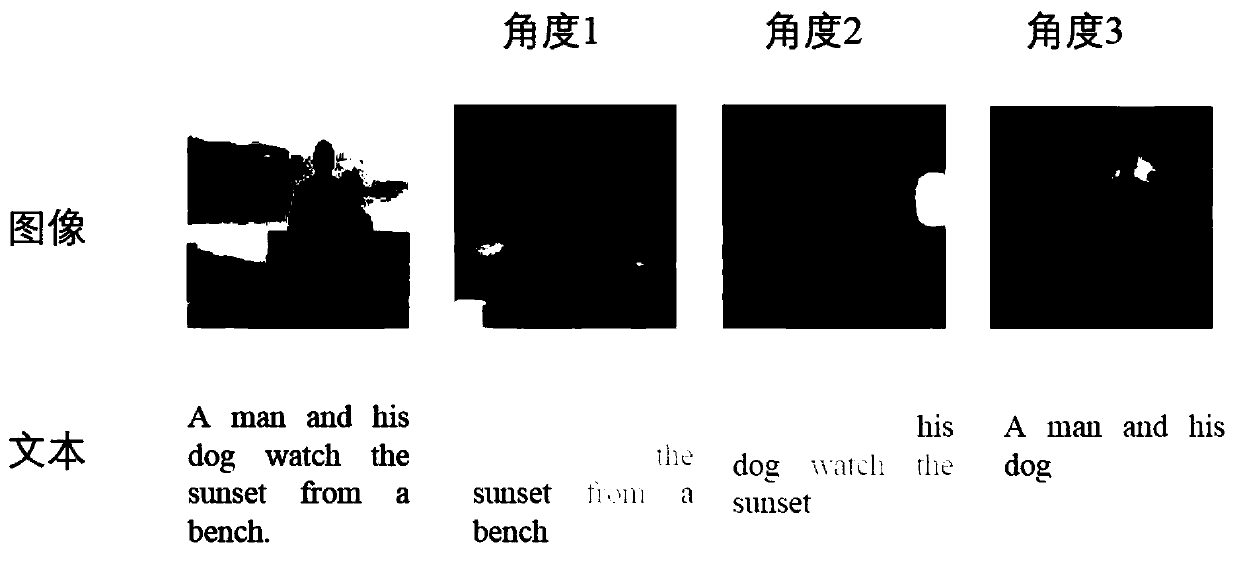

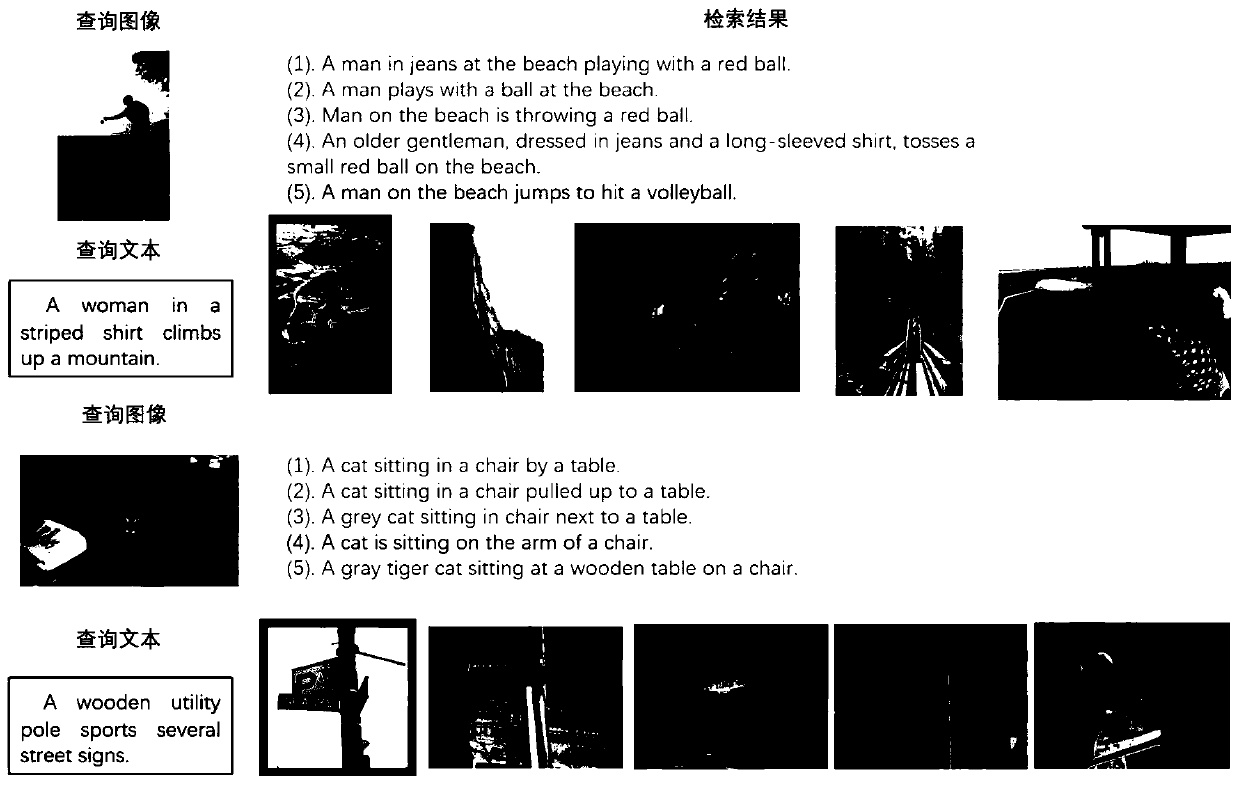

[0038]It can be seen from the background technology that the instance features extracted by the existing image-text retrieval methods are relatively rough, which cannot reflect the key semantic information well, and there is room for improvement in the optimization method. The applicant conducts research on the above-mentioned problems and believes that the key information can be extracted from different angles. For example, given an image, different people may pay attention to different content, such as dogs or grass, and the same is true for text. To this end, the self-attention mechanism is used to extract the key information from different angles, and at the same time, further research is done on the optimization of difficult examples. It is found that the overall optimization and then the optimization of difficult examples can make the proposed framework more effective. Good optimization, learn better network parameters.

[0039] In this embodiment, image region features...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com