A conditional generative adversarial network three-dimensional facial expression motion unit synthesis method

A 3D face, conditional generation technology, applied in animation production, image data processing, instruments, etc., can solve the problems of complex facial AU annotation and difficult to widely apply.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

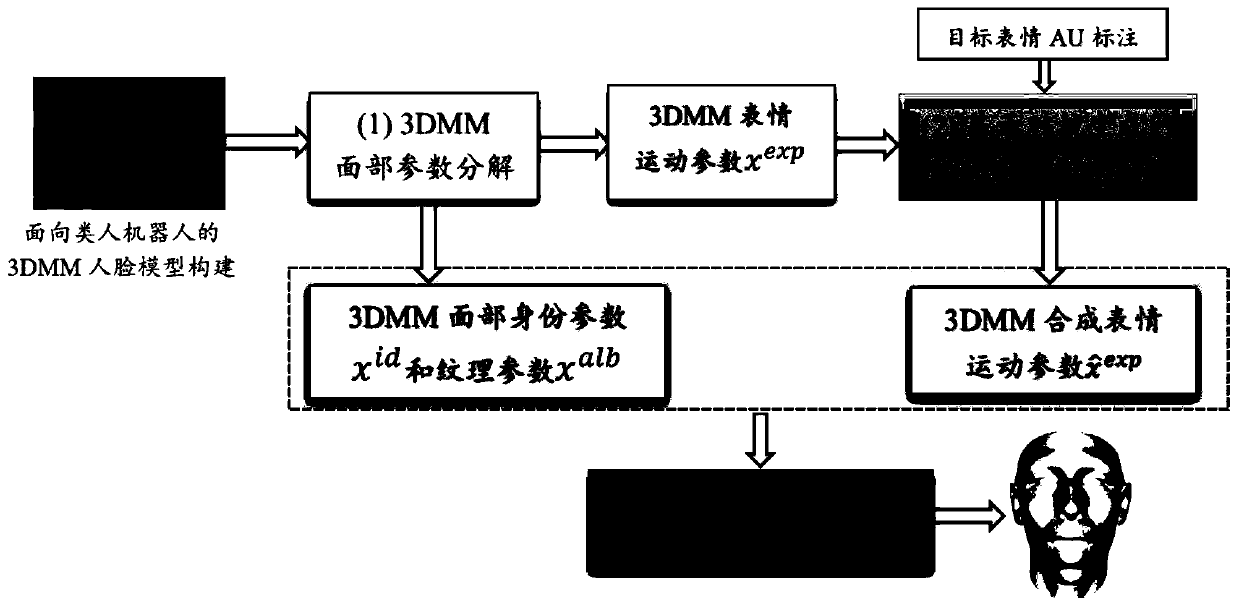

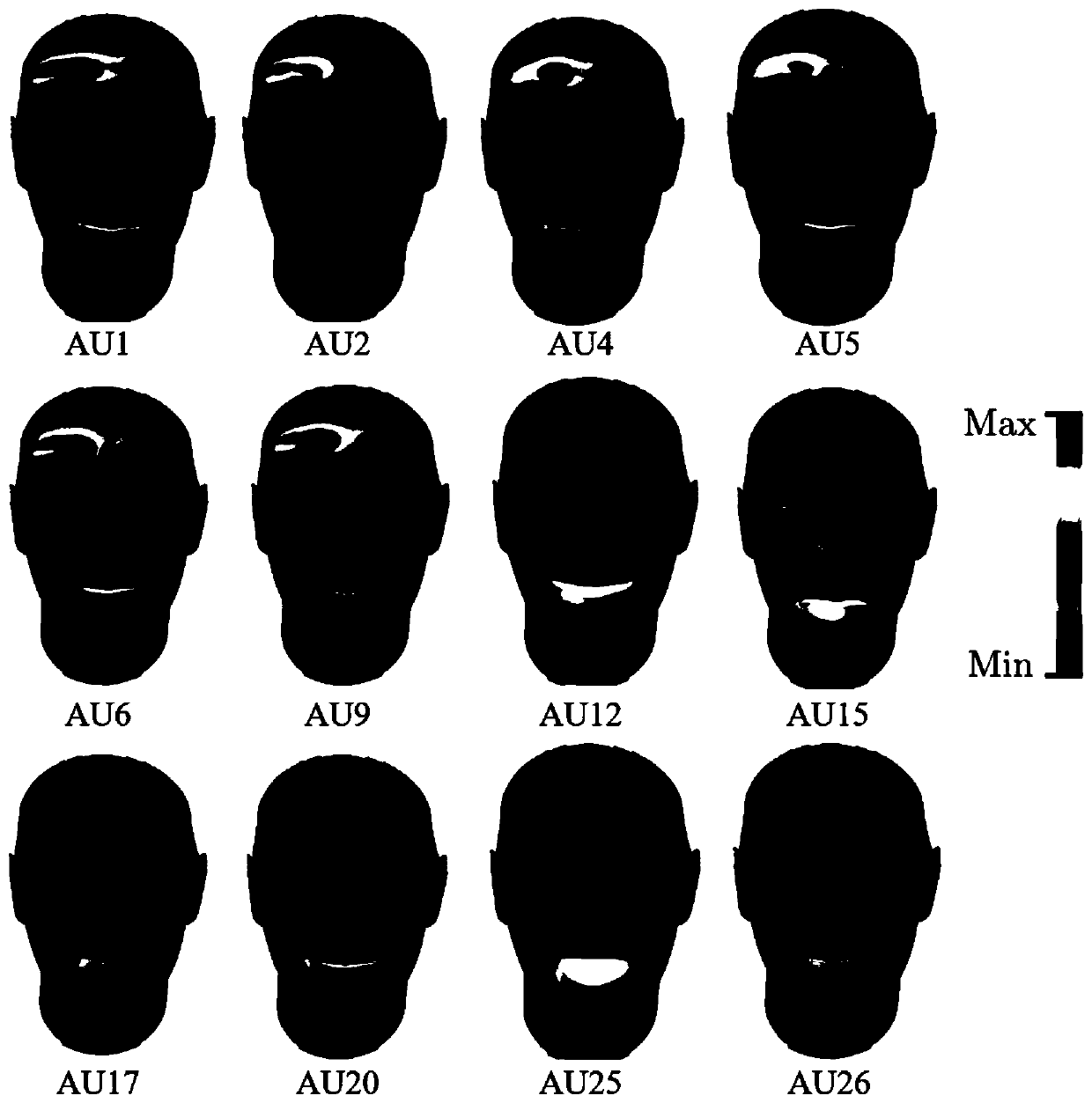

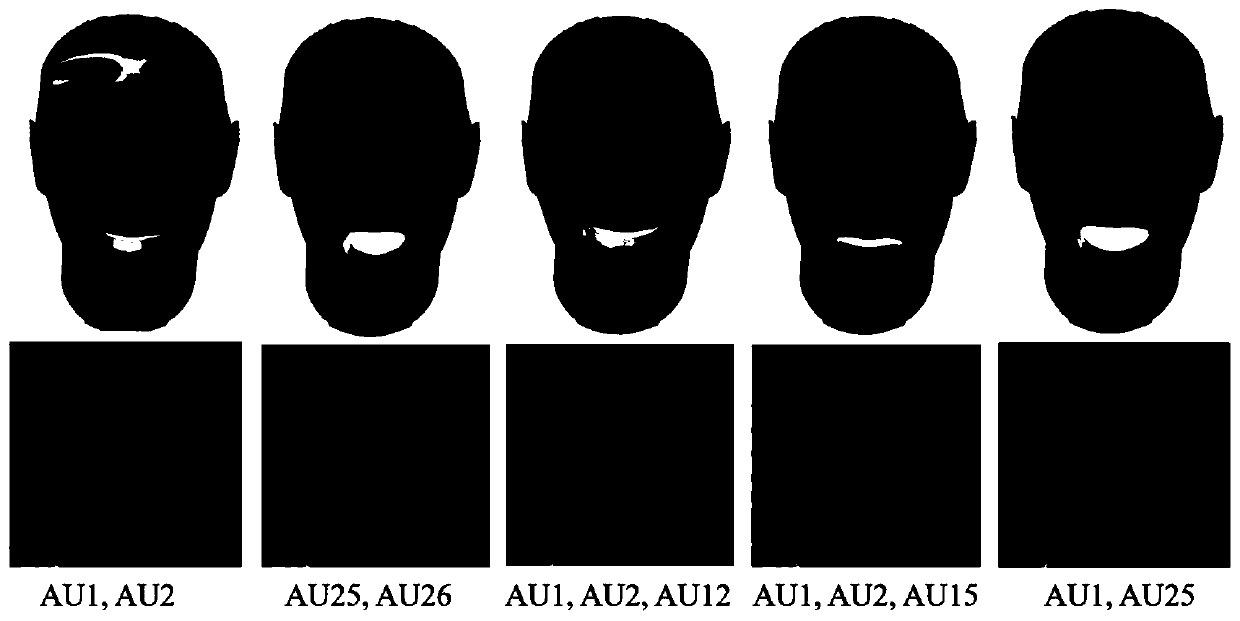

[0028] The present invention will use the three-dimensional human face model oriented to the virtual or physical humanoid robot as a carrier to study the generation and control of the natural facial expressions of the humanoid robot. It mainly includes three aspects:

[0029] (1) First, parametrically decompose the face of the humanoid robot through the 3DMM model, and learn and model the distribution of expression parameters in the 3D face model of the humanoid robot based on the deep generative model, and establish facial movements with different intensities and different combinations Efficient mapping between cell annotations and facial expression parameter distributions;

[0030] (2) Then, through the discriminator in the conditional generative confrontation network model, the authenticity of the generated expression parameters is discriminated, and the game optimization is carried out with the generated results of the expression and motion parameter generation model;9

...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com