OCR recognition method and terminal based on deep learning model

A technology of deep learning and recognition methods, applied in the field of data processing, can solve problems such as many interference factors and affect the recognition accuracy of deep learning models, and achieve the effect of high recognition accuracy and good anti-interference ability

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

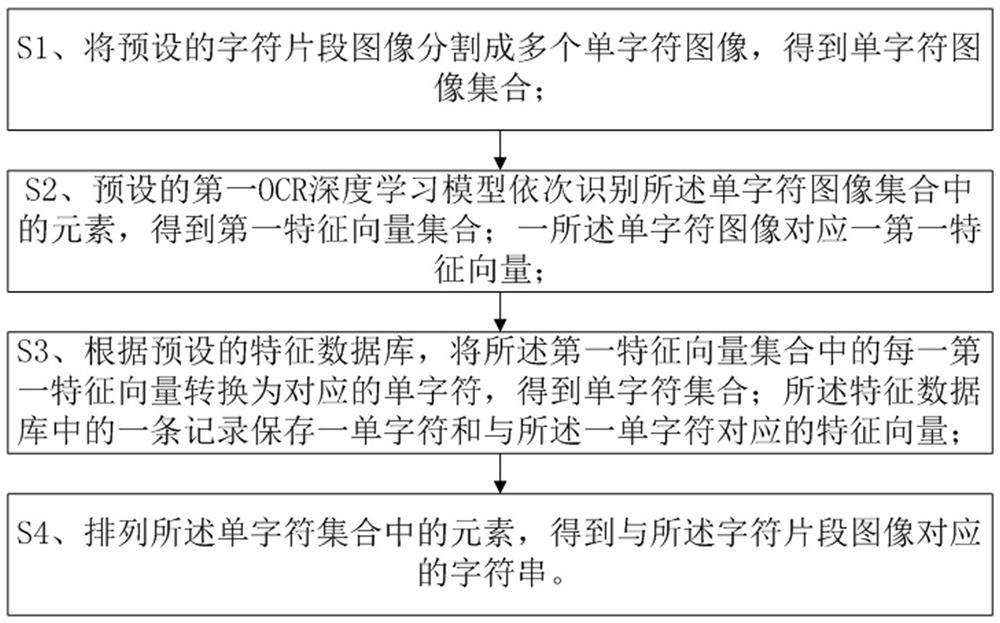

[0079] Such as figure 1 As shown, this embodiment provides an OCR recognition method based on a deep learning model, including:

[0080] S1. Divide a preset character segment image into multiple single-character images to obtain a single-character image set.

[0081] Among them, this embodiment uses the open-source deep learning target detection model RFCN to train and detect the position of a single character in the bill image, and obtain the coordinates of the upper left corner and the lower right corner of the circumscribed rectangular frame of each character on the bill image. According to the coordinate information corresponding to each character, multiple single-character images are cut from the original bill image.

[0082] For example, a character segment image contains the character segment "value-added tax invoice". The coordinates of each character are recognized through the target detection model, and the character segment image is divided according to the coordin...

Embodiment 2

[0127] Such as Figure 4 As shown, this embodiment also provides an OCR recognition terminal based on a deep learning model, including one or more processors 1 and a memory 2, the memory 2 stores a program, and is configured to be controlled by the one or more Processor 1 performs the following steps:

[0128] S1. Divide a preset character segment image into multiple single-character images to obtain a single-character image set.

[0129] Among them, this embodiment uses the open-source deep learning target detection model RFCN to train and detect the position of a single character in the bill image, and obtain the coordinates of the upper left corner and the lower right corner of the circumscribed rectangular frame of each character on the bill image. According to the coordinate information corresponding to each character, multiple single-character images are cut from the original bill image.

[0130] For example, a character segment image contains the character segment "va...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com