A Method of Accurately Calibrating Robot Endpoint and Vision System

A vision system and robot technology, which is applied in the field of precise calibration of the robot end and the vision system, can solve the problems that the calibration method cannot achieve three-dimensional coordinate transformation, the one-dimensional information is difficult to deal with complex calculations, and cannot achieve accurate calibration, etc., so as to improve the overall Operational efficiency, good application results, easy-to-achieve results

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

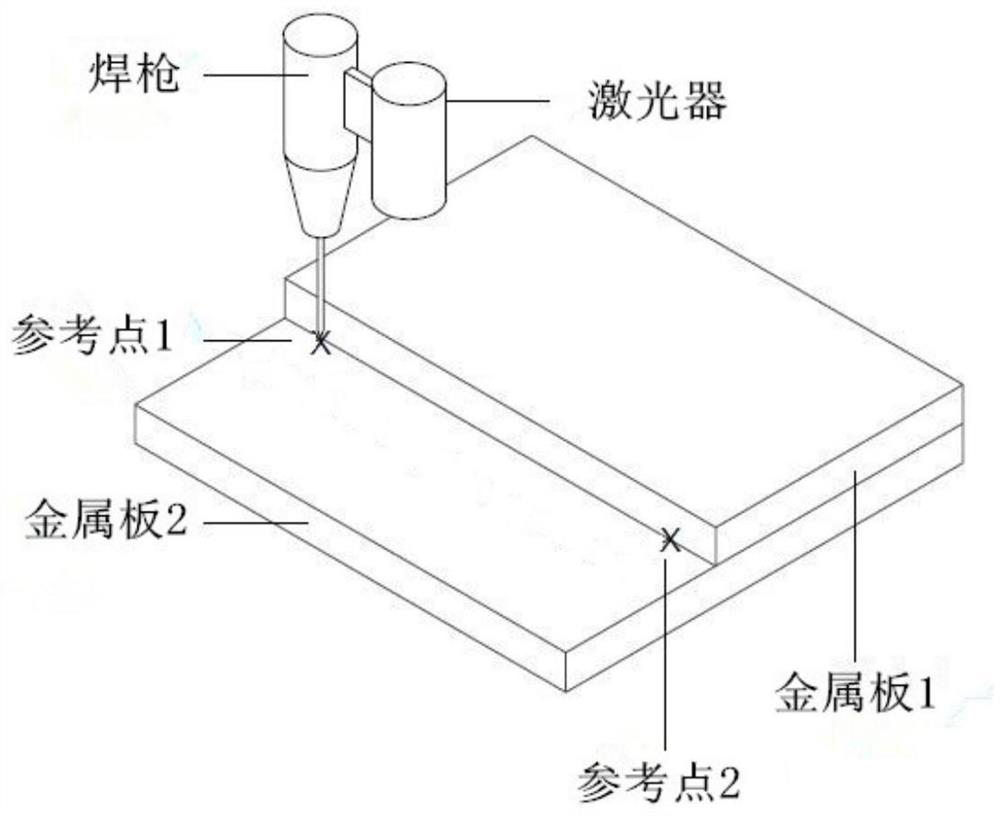

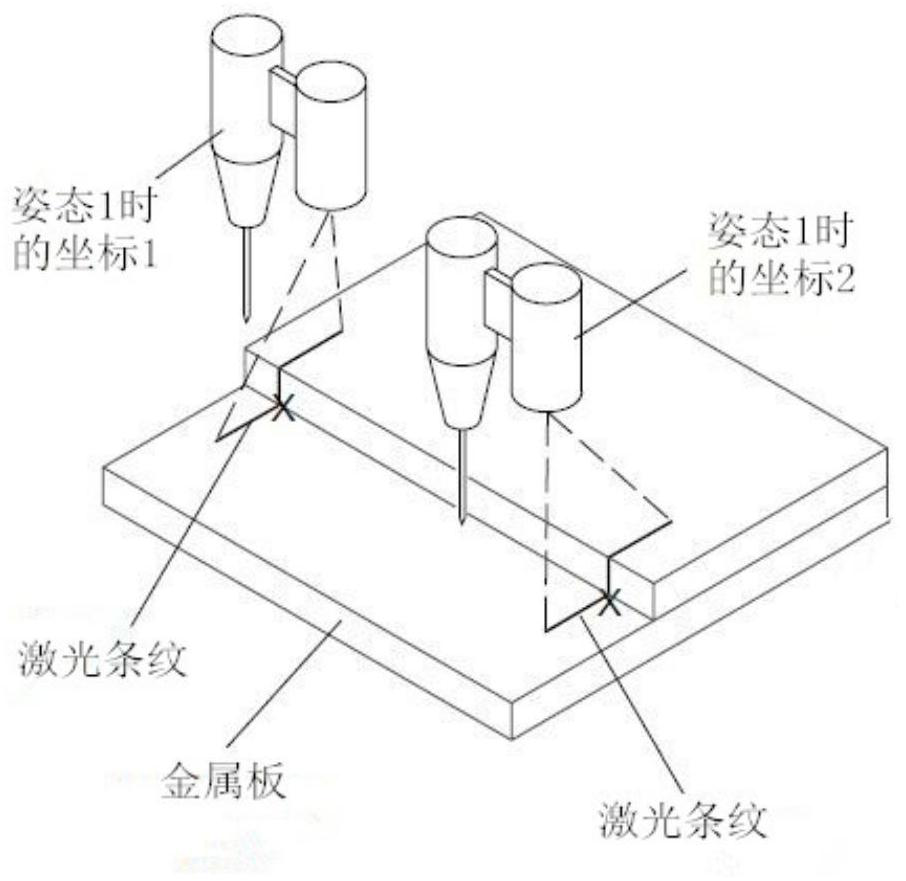

[0094] According to the method of accurately calibrating the end of the robot and the vision system, do an experiment on reference point 1, and the following data can be obtained:

[0095] for for for for for for for for for for

[0096] Set the matrix to be solved for

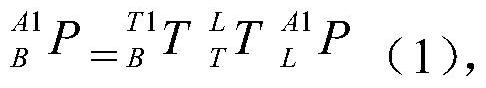

[0097] the above for for for and the solved matrix for At the same time into the formula (3) Among them, the formula (1) is obtained;

[0098]

[0099] the above for for for and the solved matrix for At the same time into the formula (5) Among them, the formula (2) is obtained;

[0100]

[0101] the above for for for and the solved matrix for At the same time into the formula (7) Among them, the formula (2) is obtained;

[0102]

[0103] Formulas (1)(2)(3) can be arranged to get formulas (4)(5)(6), as follows:

[0104]

[0105]

[0106]

[0107] Formula (7)(8)(9) can be obtained by rearranging formula (4)(5)(6):

[0108...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com