Moving object tracking method based on sample combination and depth detection network

A technology for deep detection and moving targets, which is applied in the field of image processing, can solve the problems of slow target recognition, target tracking failure, and consumption, and achieve the effects of shortening target detection time, fast target recognition speed, and overcoming a large amount of time consumption

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0034] The present invention will be further described below in conjunction with the accompanying drawings.

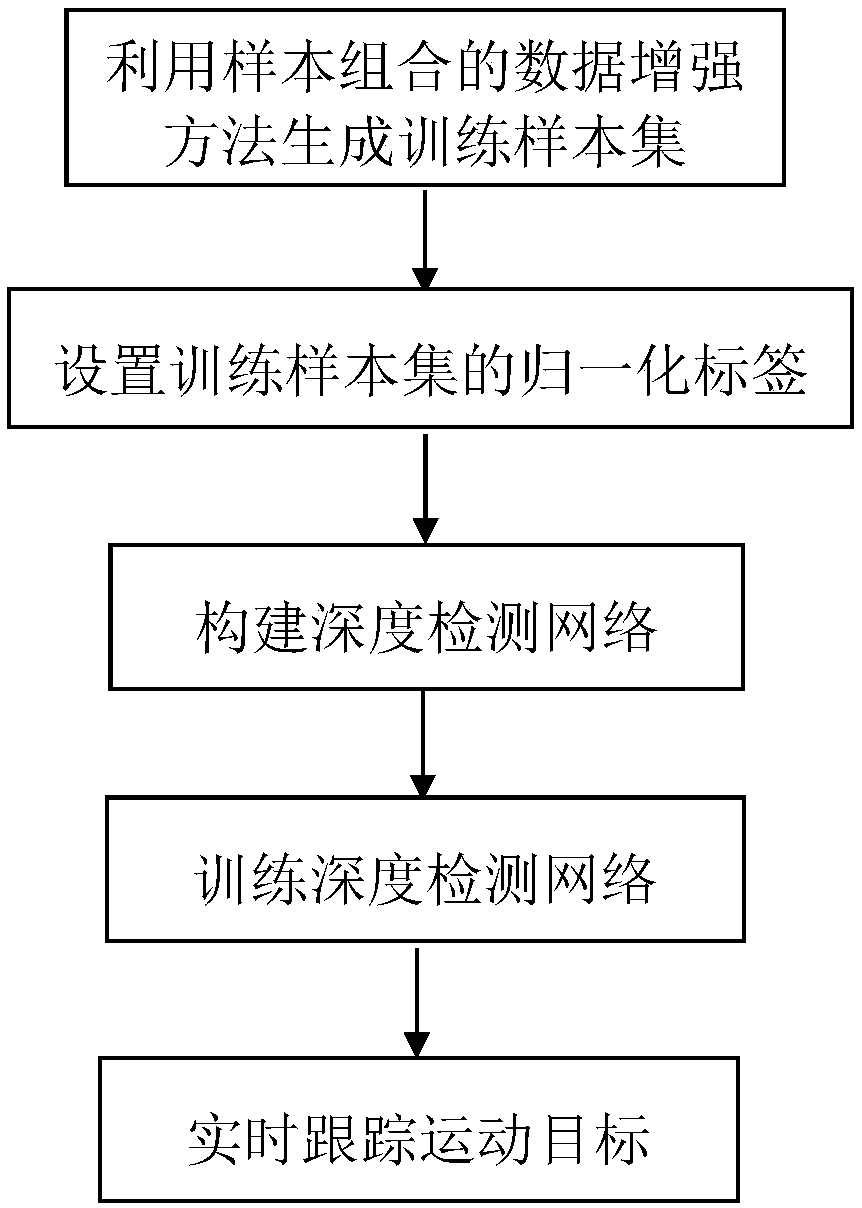

[0035] Refer to attached figure 1 , to further describe the specific steps of the present invention.

[0036] Step 1, using the data augmentation method of sample combination to generate a training sample set.

[0037] Input the first frame video image in the color video image sequence containing the moving target to be tracked.

[0038] Add zero-value pixels to the upper, lower, left, and right edges of the first frame of the video image at the same time, increase 5 pixels each time, increase 100 times to generate 100 enlarged images, and form the enlarged images to form a small-scale sample set .

[0039] In the first frame of video image, a rectangular frame is determined with the center of the initial position of the moving target to be tracked as the center, and the length and width of the moving target to be tracked as the length and width, and the image insid...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com