An OCR method based on depth learning

A deep learning and algorithmic technology, applied in the direction of instruments, character and pattern recognition, character recognition, etc., can solve the problems that the actual effect is not always satisfactory, achieve the effect of fast retrieval, fast processing speed, and improve work efficiency

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0035] The present invention will be described in detail below according to the accompanying drawings and preferred embodiments, and the purpose and effect of the present invention will become clearer. The present invention will be further described in detail below in conjunction with the accompanying drawings and embodiments. It should be understood that the specific embodiments described here are only used to explain the present invention, not to limit the present invention.

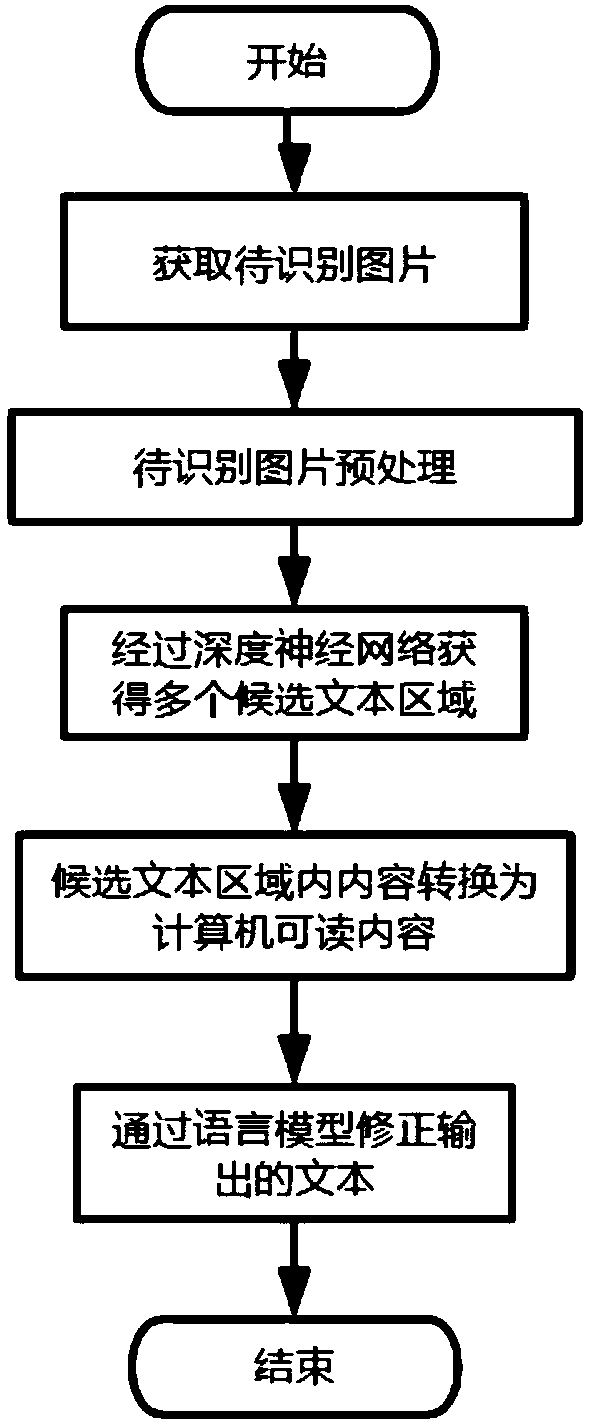

[0036] Such as figure 1 Shown, the OCR method based on deep learning of the present invention, it comprises the steps:

[0037] S1: Acquiring the image to be recognized;

[0038] S2: Scaling the image to be recognized, and then preprocessing the zoomed image, the preprocessing is any one or more of sharpening, grayscale, binarization, tilt correction, noise reduction, and official seal removal item;

[0039] Image preprocessing to remove the official seal adopts the following method:

[0040] S2.1:...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com