Multi-pipeline scheduling method for distributed memory database

A pipelined and distributed technology, applied in the computer field, can solve the problems of delay in response time, high cost, and inability to preempt SQL queries, and achieve the effect of speeding up the response time and shortening the execution time.

- Summary

- Abstract

- Description

- Claims

- Application Information

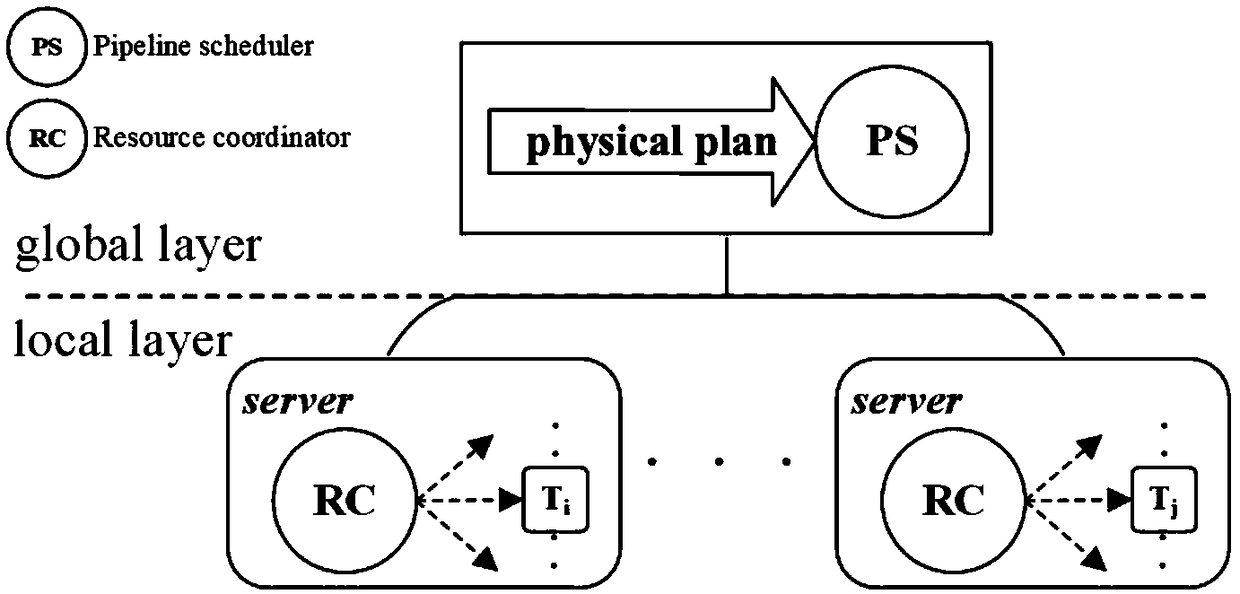

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

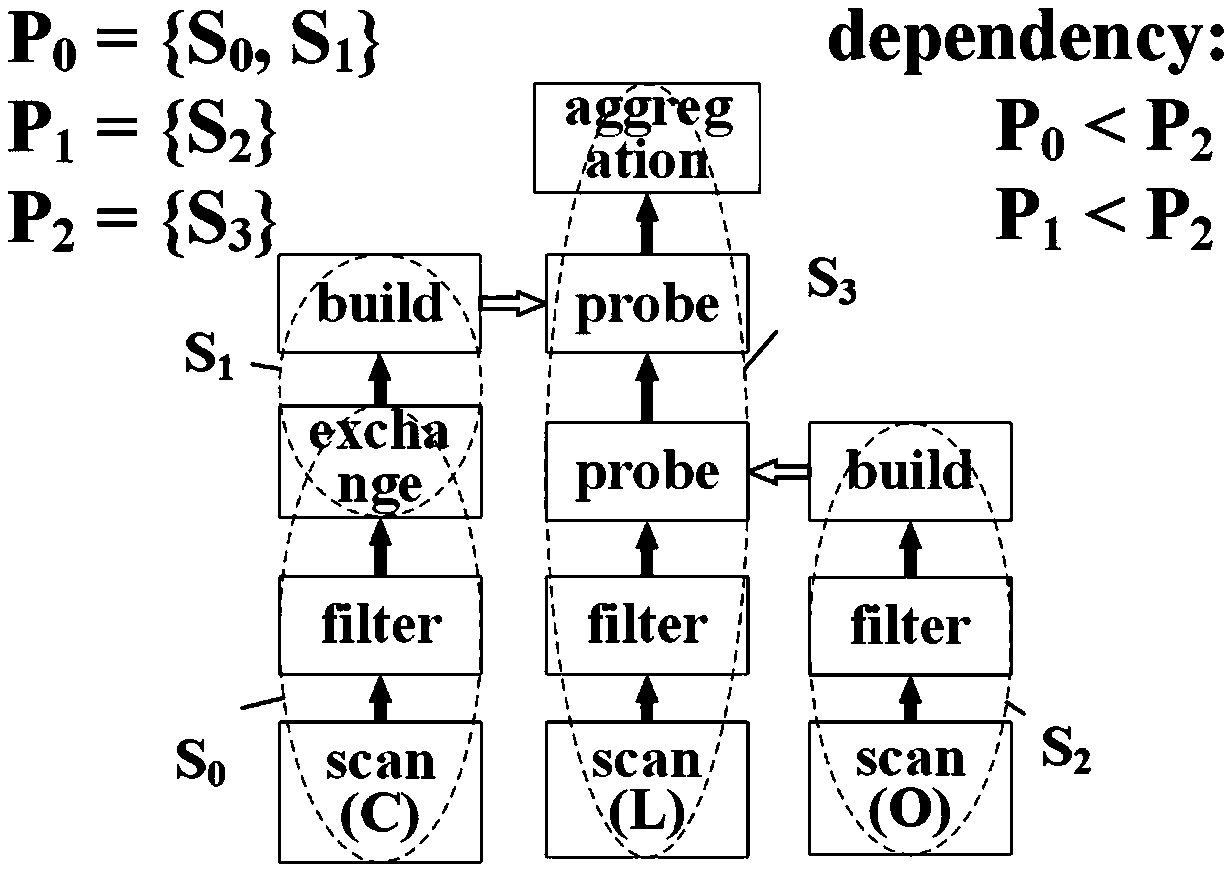

Embodiment 1

[0079] Embodiment 1: We illustrate how the algorithm in the present invention shortens the execution time of the SQL query by running the third query statement (Q3) in the TPC-H benchmark test set. A physical execution plan for Q3 such as figure 2 shown. It contains 3 pipelines (Pipeline, referred to as P), and each pipeline is divided into multiple stages (Stage, referred to as S) by the data transmission operator (exchange). The instantiation of each stage on different data slices is a task. There is a dependency relationship among the three pipelines, that is, P 2 depends on P 0 and P 1 , but P 0 and P 1 There is no dependency between them, which means that the two pipelines can be executed in parallel. And, since P 0 It involves network transmission and belongs to the pipeline sensitive to network resources. On the contrary, P 1 It is a computationally sensitive pipeline. Here, we assume that P 0 has priority over P 1 . To illustrate the situation, let's assu...

Embodiment 2

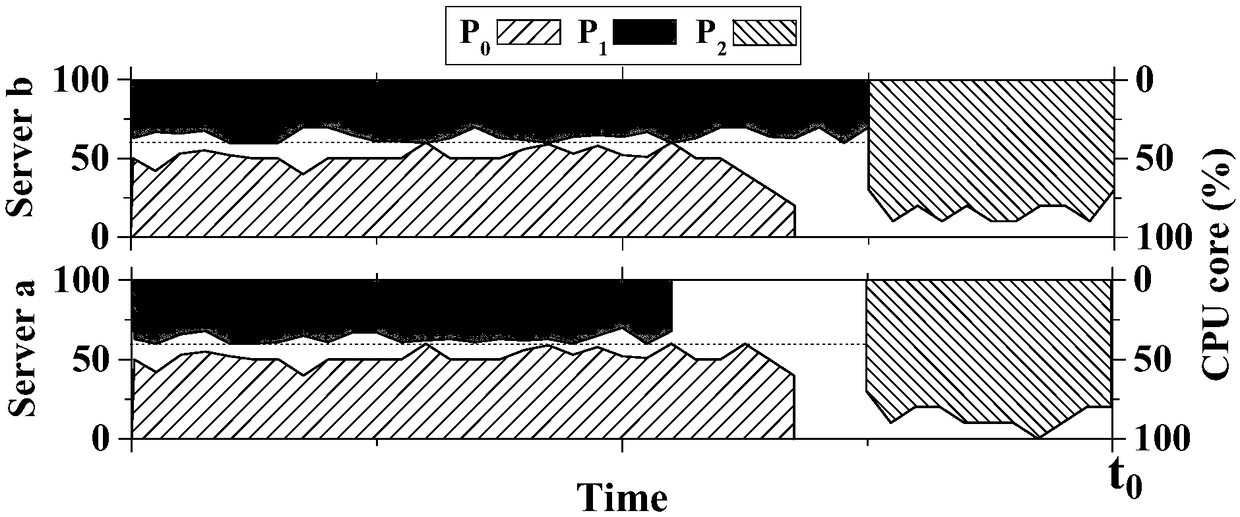

[0082] Embodiment 2: In order to describe the preemption of multiple SQL query statements in detail, we introduce the first query statement (Q1) of TPC-H. It contains only one computationally intensive pipeline, denoted as P 3 . we assume it Figure 4a and 4b Medium P 0 and P 1 Enter the system when finished, and compare the two cases of whether preemption occurs at this moment. Figure 4a Indicates that no preemption occurs. At the instant t of the Q1 input 1 , its P 3 As a secondary pipeline to fill the outstanding P in Q3 2 idle resources. at P 2 After completion, P 3 If you monopolize the resources in the cluster, some CPU resources will also not be fully utilized. By analogy, these idle resources can be filled by the pipeline in other SQL statements. Figure 4a Indicates that Q1 is given a higher priority, then it will seize the running resources of Q3 after inputting into the system, where P 2 becomes a secondary pipeline, while P 3 is the main pipeline. ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com