Neural machine translation incorporating dependencies

A technology of dependency and machine translation, applied in natural language translation, instruments, computing, etc., can solve the problems of not considering linguistic information, not considering the correlation of the source hidden layer, etc., to achieve the effect of improving translation quality

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

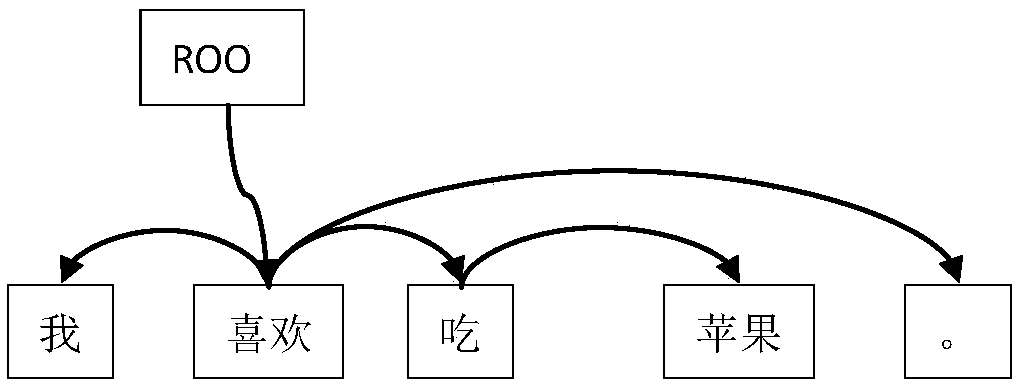

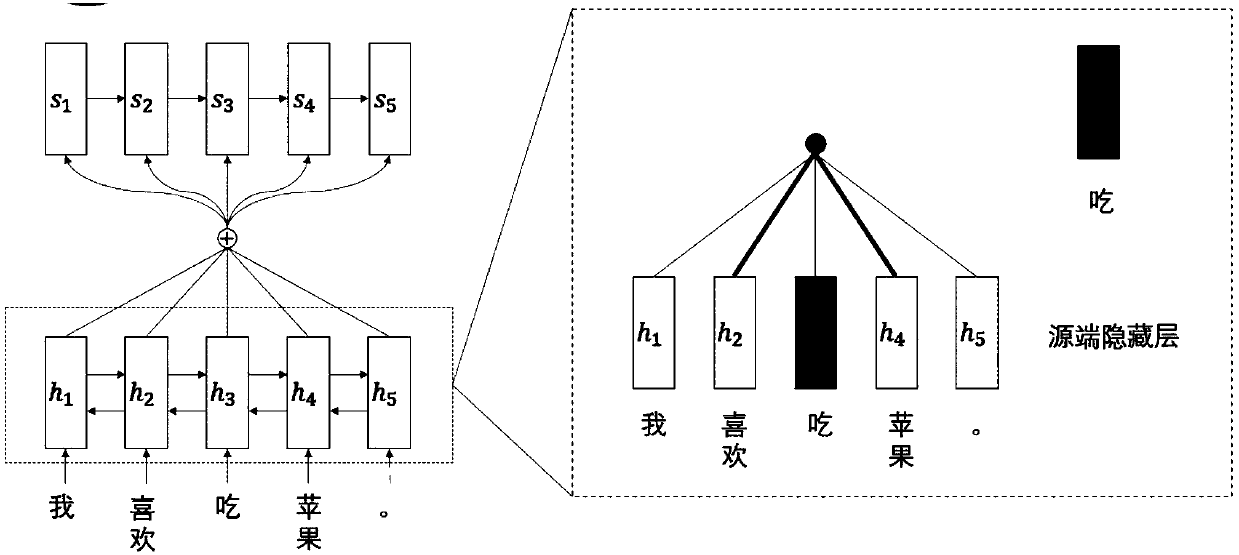

[0054] The neural machine translation method incorporating dependencies in this embodiment, such as figure 1 Shown is a dependency tree parsed by stanfordparser, where the arrow points to the child node, and the arrow starts to be the parent node. in figure 1 "Eating" is more related to "Like" and "Apple". The present invention guides the source end: at the source end, a dependency correlation loss is added to guide the correlation between hidden layers. The source end of the network constitutes a guidance loss, which is used to guide the neural machine translation NMT.

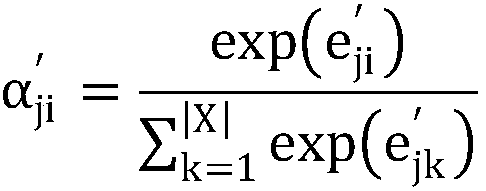

[0055] For a sentence pair (X, Y), the overall loss of the proposed network is defined as follows:

[0056] loss=-logP(Y|X)+Δ dep

[0057] Where -logP(Y|X) is the cross entropy loss, Δ dep It is the loss of dependency and correlation. Through this guidance loss, neural machine translation NMT can guide the relationship between the source-side hidden layers.

[0058] As the commonly used neural machine translat...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com