Regular auto-encoding text embedded expression method for local topic probability generation

An embedded representation and self-encoding technology, which is applied in the fields of natural language processing and machine learning, can solve problems such as the difficulty in effectively estimating the semantic features of out-of-sample text and the inability to effectively maintain smoothness, and achieve the effect of maintaining smoothness

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

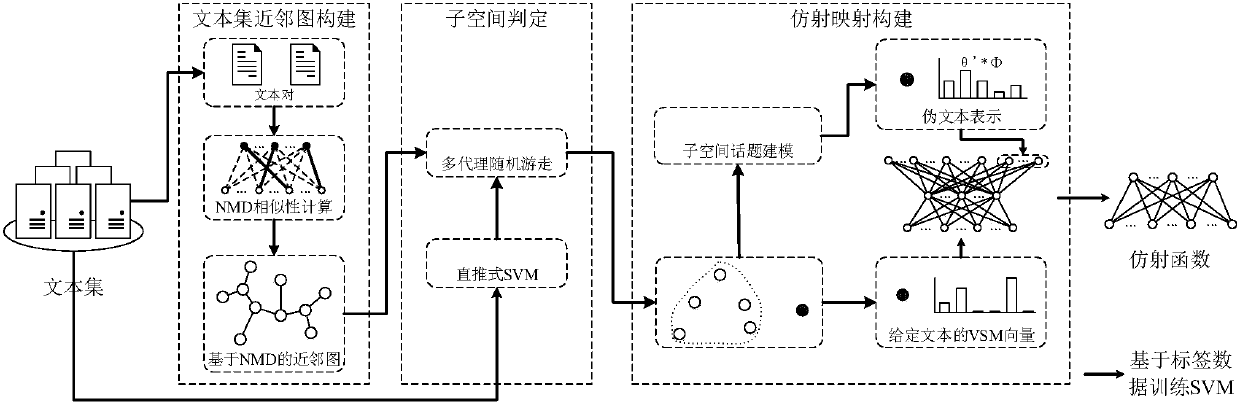

Method used

Image

Examples

Embodiment Construction

[0063] In order to better illustrate the purpose and advantages of the present invention, the implementation of the method of the present invention will be further described in detail below in conjunction with examples.

[0064] Select 20newsgroups, Amazon reviews and RCV1 public data sets, of which 20newsgroups contains 20 news discussion groups with different topics, Amazon reviews are composed of more than 1.4 million reviews about products on the Amazon website, and select relevant reviews of 10 categories of products, RCV1 has Over 800,000 manually categorized press release stories, with text for 3 subtopics selected.

[0065] In order to verify that the parametric affine mapping established by the method of the present invention can improve the smoothness of the out-of-sample text embedding representation vector and improve the effect of text clustering and classification, the K-means algorithm is used for text clustering experiments and the 1-NN algorithm is used for Te...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com