Antagonistic cross-media search method based on limited text space

A cross-media, adversarial technology, applied in the field of computer vision, which can solve the problems of loss of image action and interaction information, inappropriate cross-media retrieval, and performance degradation of cross-media retrieval.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0063] Below in conjunction with accompanying drawing, further describe the present invention through embodiment, but do not limit the scope of the present invention in any way.

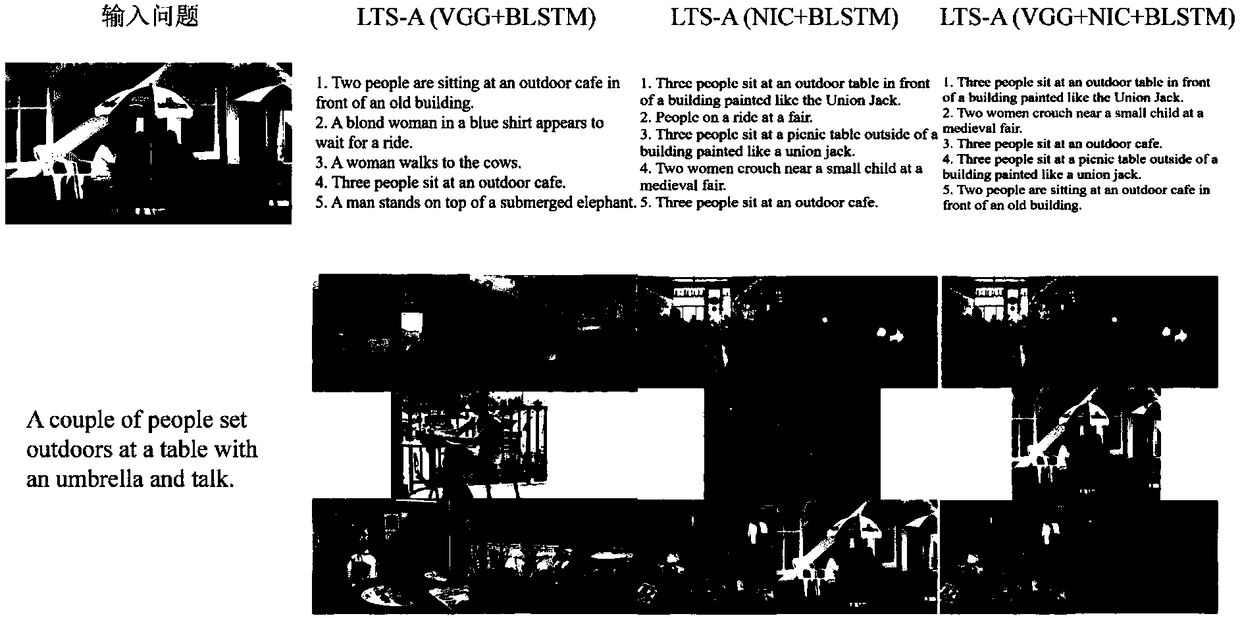

[0064] The invention provides an adversarial cross-media retrieval method based on a limited text space, which mainly obtains the limited text space through learning, and realizes the similarity measurement between images and texts. Based on a limited text space, the method extracts image and text features suitable for cross-media retrieval by simulating human cognition, realizes the mapping of image features from image space to text space, and introduces an adversarial training mechanism. It aims to continuously reduce the difference in feature distribution between different modal data during the learning process. The feature extraction network, feature mapping network, modality classifier and their implementation in the present invention, as well as the training steps of the network are described i...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com