Method for extracting high-dimensional features by convolution network based on tensor

A convolutional network and convolution technology, applied in the field of convolution based on dimensionality separability and feature fusion, can solve problems such as high computational complexity, large number of parameters, and increasing the difficulty of high-dimensional convolutional neural networks

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0019] 1 The input multi-dimensional signal (N-order tensor) passes through several separation-fusion modules and corresponding pooling layers in turn. Generally, we set up three separation-fusion modules, and set them after each separation-fusion module. a max pooling layer;

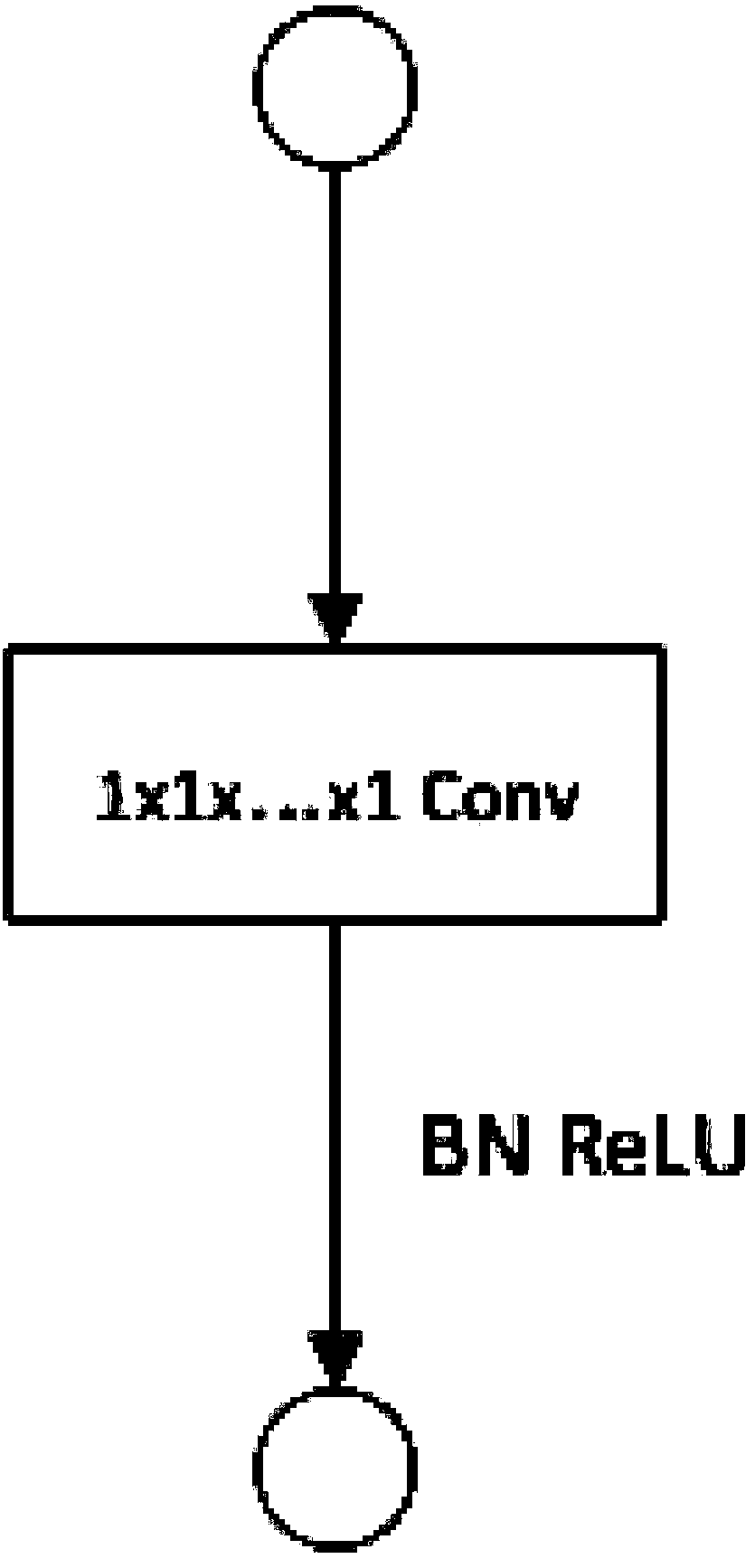

[0020] 2 In each separation-fusion module, the input tensor data is first expanded into N matrices according to the operation of tensor expansion, and each matrix is extracted by a separable convolution component to form N groups of feature matrices. These matrices are respectively passed through The tensor folding operation can obtain N tensors of order N, and then the tensors of order N are input into the feature fusion module, and the feature fusion is performed through the fusion map, and finally an order N tensor is output;

[0021] 3. The features output by the separation-fusion module are down-sampled through the maximum pooling layer;

[0022] 4 After the input data passes through all the sep...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com