Graph-Based Bilingual Recurrent Autoencoder

An autoencoder, bilingual technology, applied in instrumentation, semantic analysis, natural language translation, etc., can solve problems such as lack of consideration of semantic constraints

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

specific Embodiment approach

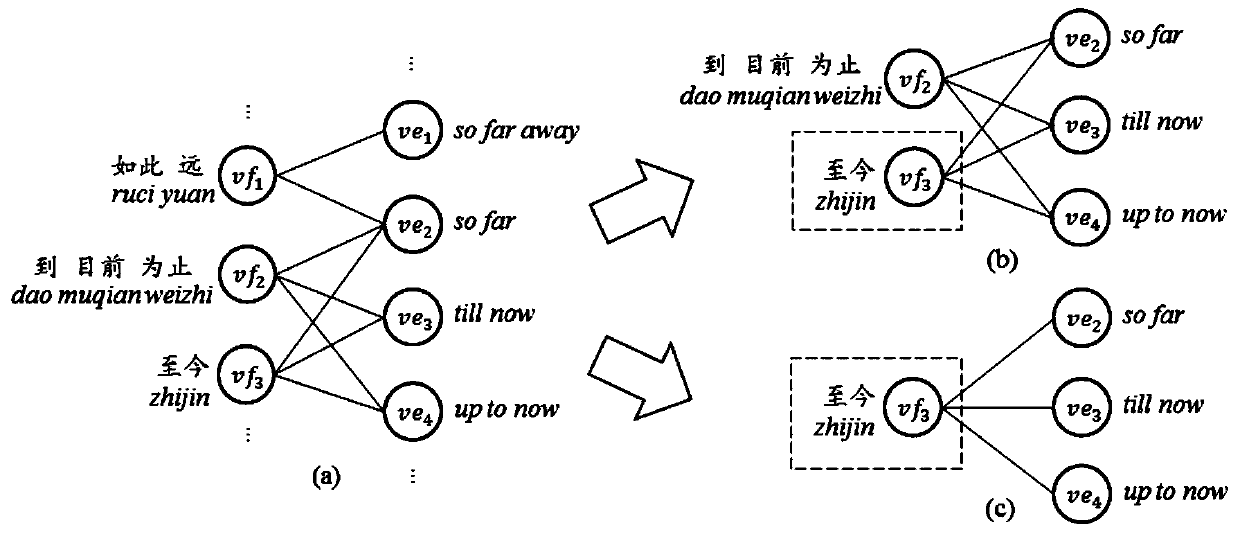

[0035] The first step is to extract bilingual phrases from the parallel corpus as training data, and calculate the translation probability between bilingual phrases.

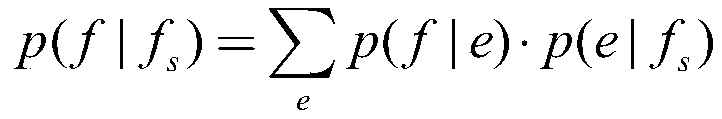

[0036] The second step is to calculate the probability of retelling based on the pivot language method.

[0037] The third step is to construct a semantic relationship graph of bilingual phrases. Taking the source phrase and target phrase as nodes, for any source phrase and target phrase, if it belongs to a phrase pair in the bilingual phrase corpus, a link is constructed. All node sets and edge sets constitute the semantic relationship graph of the corresponding bilingual phrase.

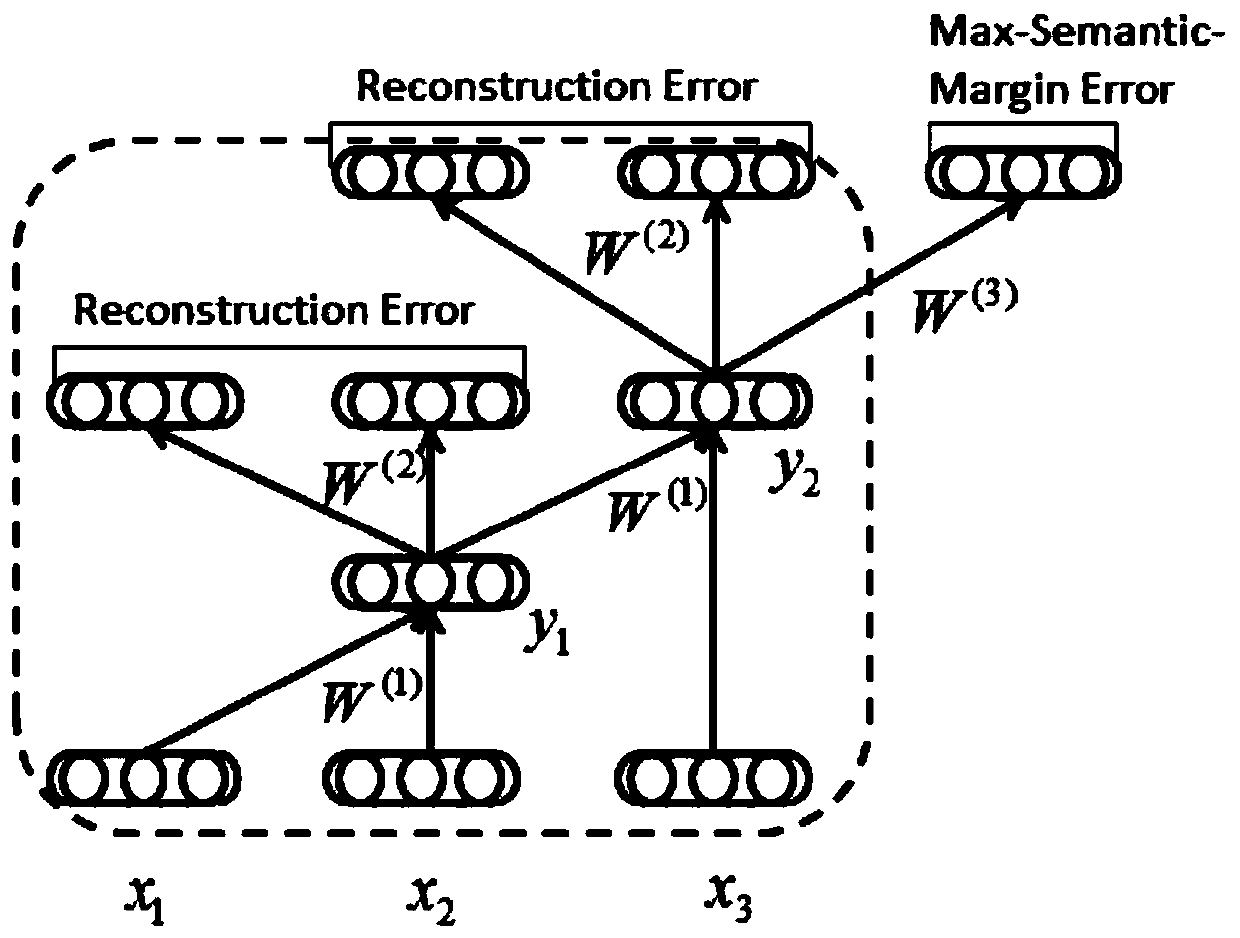

[0038] The fourth step is to define two implicit semantic constraints based on the semantic relationship diagram of bilingual phrases. For two different nodes in the same language, if they are connected to the same set of nodes in another language, they are considered to be close to each other in semantic space, which is constraint one. ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com