An Open View Action Recognition Method Based on Linear Discriminant Analysis

A linear discriminant analysis and action recognition technology, applied in the field of action recognition, can solve problems such as samples that cannot guarantee the action category, and achieve the effect of increasing the learning speed and simplifying the process

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

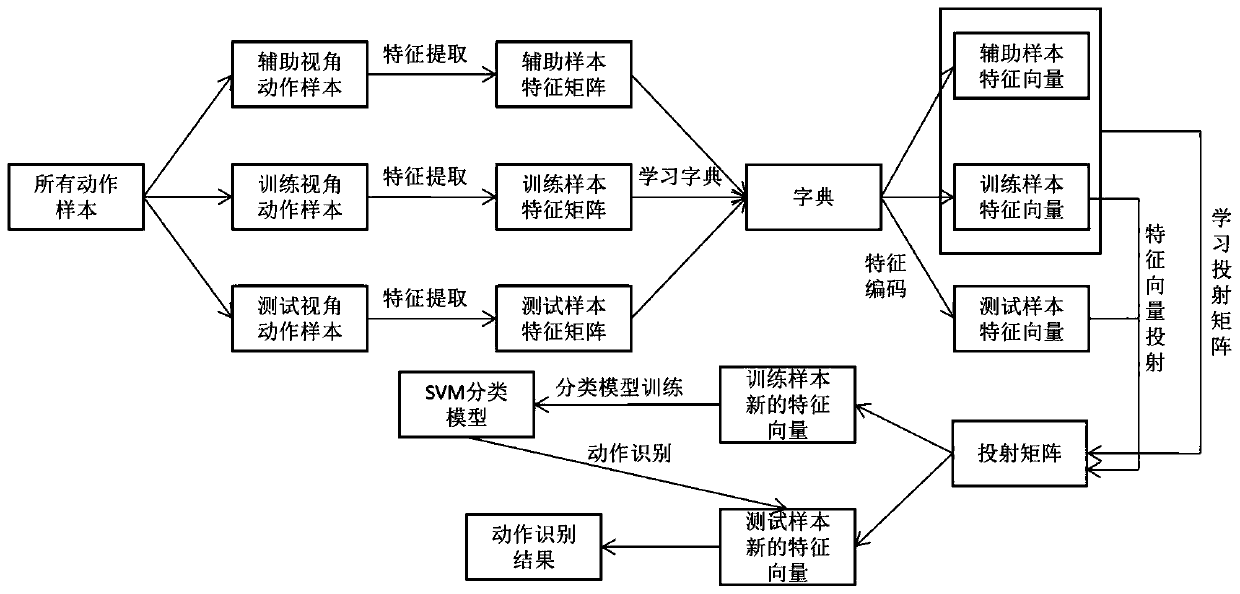

[0029] An open-view action recognition method based on linear discriminant analysis, see figure 1 , the action recognition method includes the following steps:

[0030] 101: Use the Kmeans algorithm to use the combined feature matrix for dictionary learning; convert the feature matrix of the action sample into the feature vector of the action sample;

[0031] 102: Use linear discriminant analysis to learn the projection matrix using the feature vectors of the action samples in the training view and the auxiliary view;

[0032] 103: Combining the projection matrix, projecting a total of t action sample representation vectors under the training perspective and the test perspective into the same space, and obtaining a new feature vector of each action sample;

[0033] 104: Use the linear support vector machine to use the new feature vector of the action sample to learn the action classification model, and finally use the action classification model to perform the action classifi...

Embodiment 2

[0045] The scheme in embodiment 1 is further introduced below in conjunction with specific calculation formulas and examples, see the following description for details:

[0046] 201: Collect and record motion information of the human body, and establish a multi-view motion database;

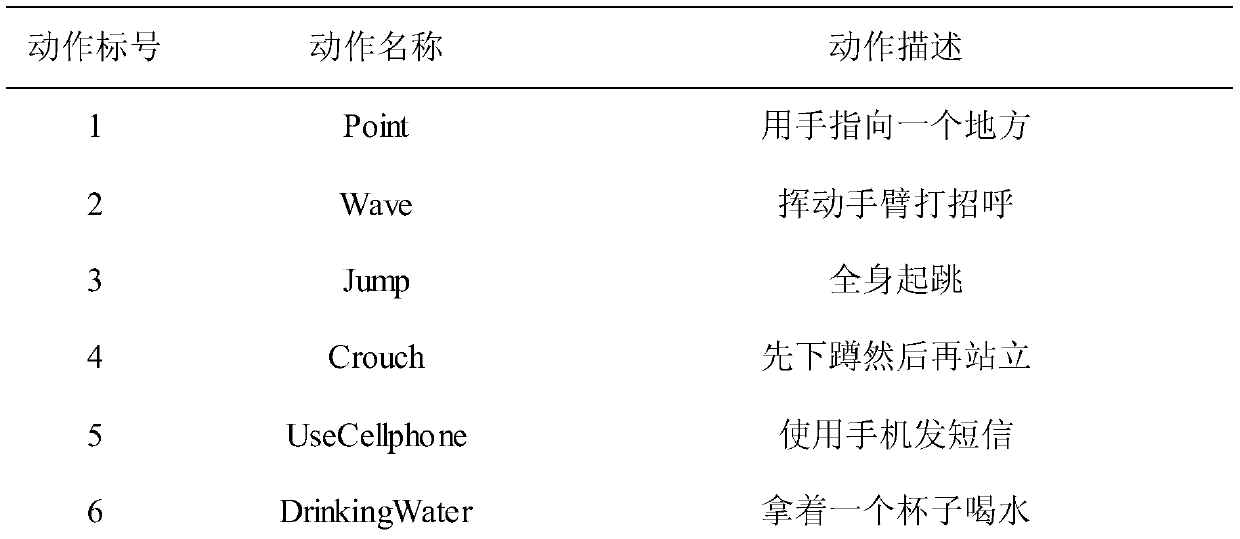

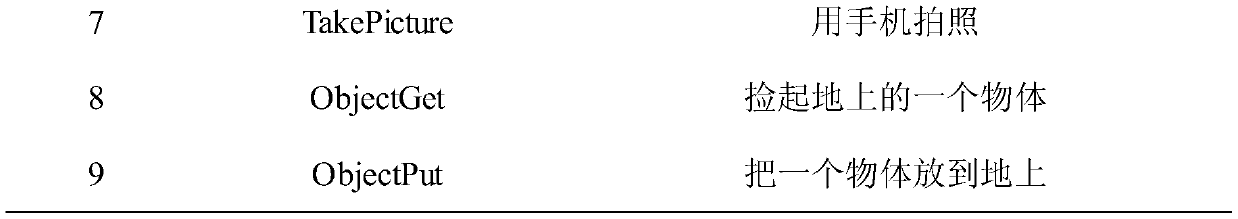

[0047] Among them, in order to ensure that there is no direct correlation between the action samples under different viewing angles, each action sample is recorded separately under different viewing angles. Table 1 gives the action list of the established database.

[0048] Table 1 action list

[0049]

[0050]

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com