Method and device for realizing interactive image segmentation, and terminal

An image segmentation and interactive technology, applied in the field of image processing, can solve problems such as unsatisfactory segmentation effect, long algorithm running time, and affecting user experience

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 3

[0268] An embodiment of the present invention also provides a terminal, where the terminal includes the above-mentioned device for realizing interactive image segmentation.

application example 1

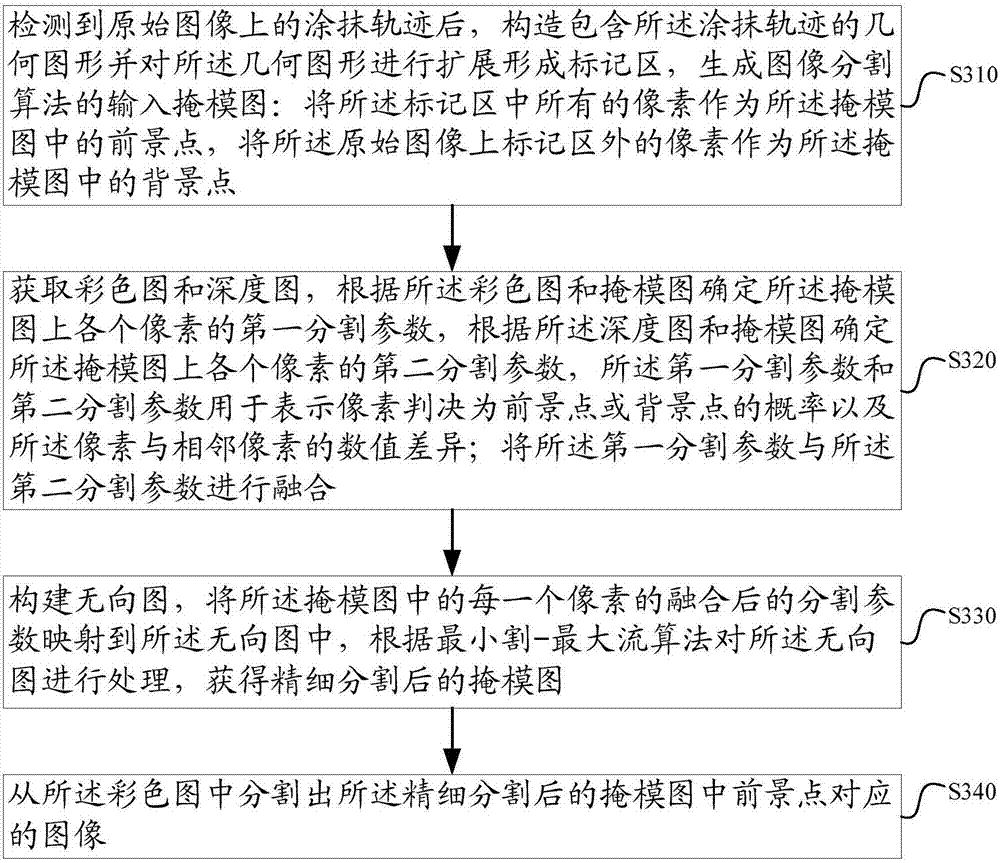

[0270] The user smears the target object he is interested in on the original image, and the image segmentation method in this paper is used to extract the target object, which may include the following steps:

[0271] Step S501, detecting that the user chooses to mark the target object by smearing;

[0272] For example, two buttons for marking are provided on the interface, one is "smudge" and the other is "outline". If the user clicks the "smudge" button, the smear track will be preprocessed.

[0273] Among them, smearing and outlining are two different ways to mark the target object;

[0274] Generally, smearing is to mark the inner area of the target object, and outline is to mark along the outer contour of the target object;

[0275] Step S502, detecting that the user daubs on the original image;

[0276] For example, if Figure 5-a As shown in , the user has painted on the original image, and the target object is "stapler", where the original image is a color map;

...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com