Depth combined structuring and structuring learning method for mankind behavior identification

A joint structure and structured technology, applied in character and pattern recognition, instruments, computer parts, etc., can solve problems such as inability to recognize interactive behaviors, images that cannot be applied to multiple behavior categories, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0047] The present invention will be further described below.

[0048] A method for deep joint structured and structured learning for human action recognition comprising the following steps:

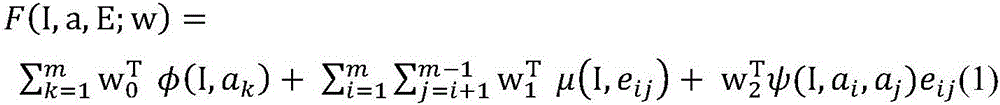

[0049] 1) Construct joint structure and structure formulation

[0050] Suppose there is a set of n training samples Here I represents an image, and a is a collection of behavior labels of all people in the image. If the image contains m individuals, then a=[a 1 ,...,a m ]. Matrix E=(e ij ) ∈ {0, 1} m×m is a strictly upper triangular matrix, representing the interrelationship structure of all individuals in the image. Specifically, e ij = 0 means there is no interaction between person i and person j, while e ij=1 indicates that person i and person j interact with each other. In fact, a and E can be considered as direct descriptions of human activities. With this representation, the recognition system is able to answer not only the question 1) what they are doing, but also the ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com