Deep neural network optimizing method based on coevolution and back propagation

A deep neural network and back-propagation algorithm technology, applied in neural learning methods, biological neural network models, etc., can solve problems such as easy to fall into local optimal solutions, and achieve improved classification accuracy, improved optimization speed, and good optimization performance. Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

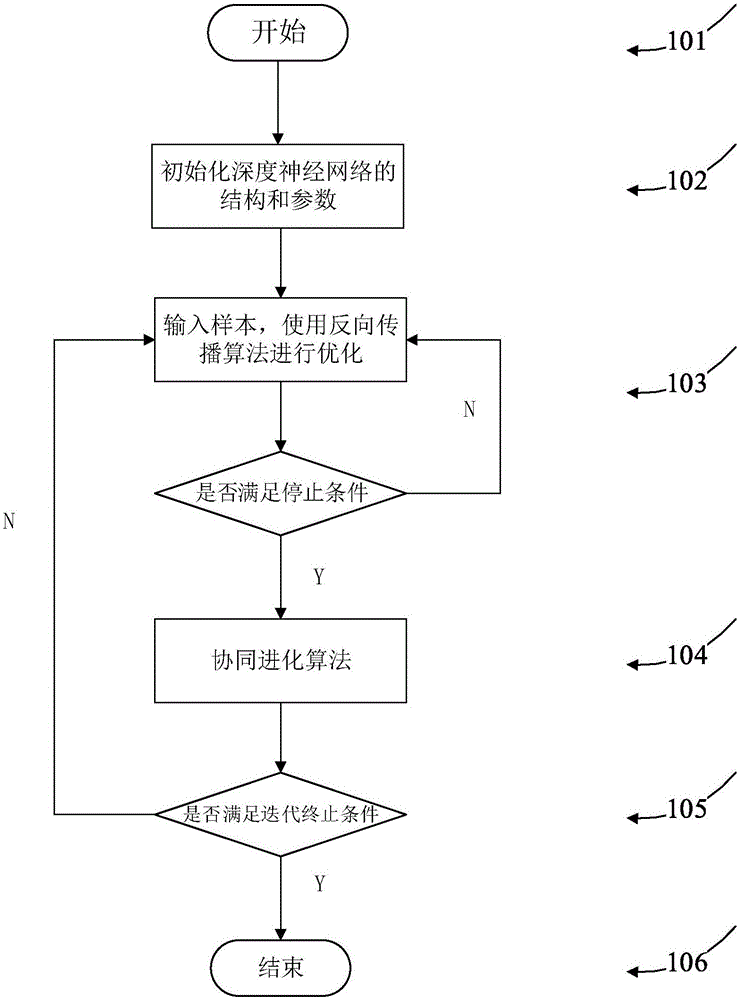

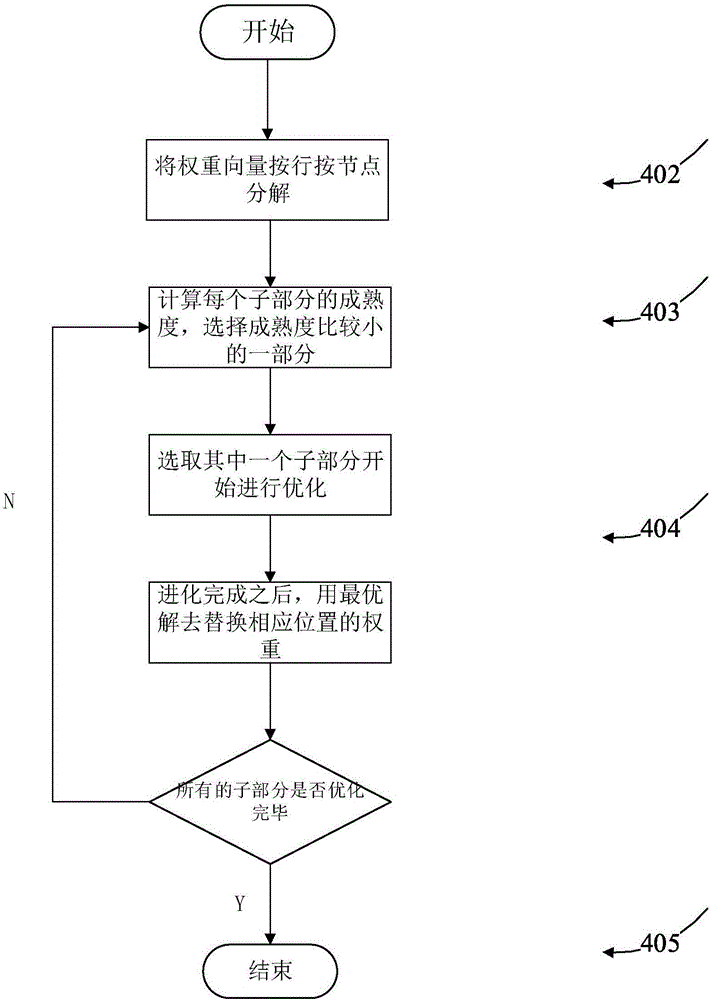

[0054] The method for optimizing a deep neural network based on co-evolution and backpropagation provided by the present invention will be described in detail below in conjunction with the accompanying drawings and embodiments.

[0055] The present invention proposes a deep neural network optimization method based on co-evolution and backpropagation, comprising the following steps:

[0056] Step 101: start the deep neural network optimization method based on co-evolution and backpropagation;

[0057] Step 102: Set a deep neural network structure, use L i Denotes the i-th layer of the network, N i Indicates the number of nodes in the i-th layer, initializes the weight W and bias b, sets the learning rate η, and customizes the parameter H;

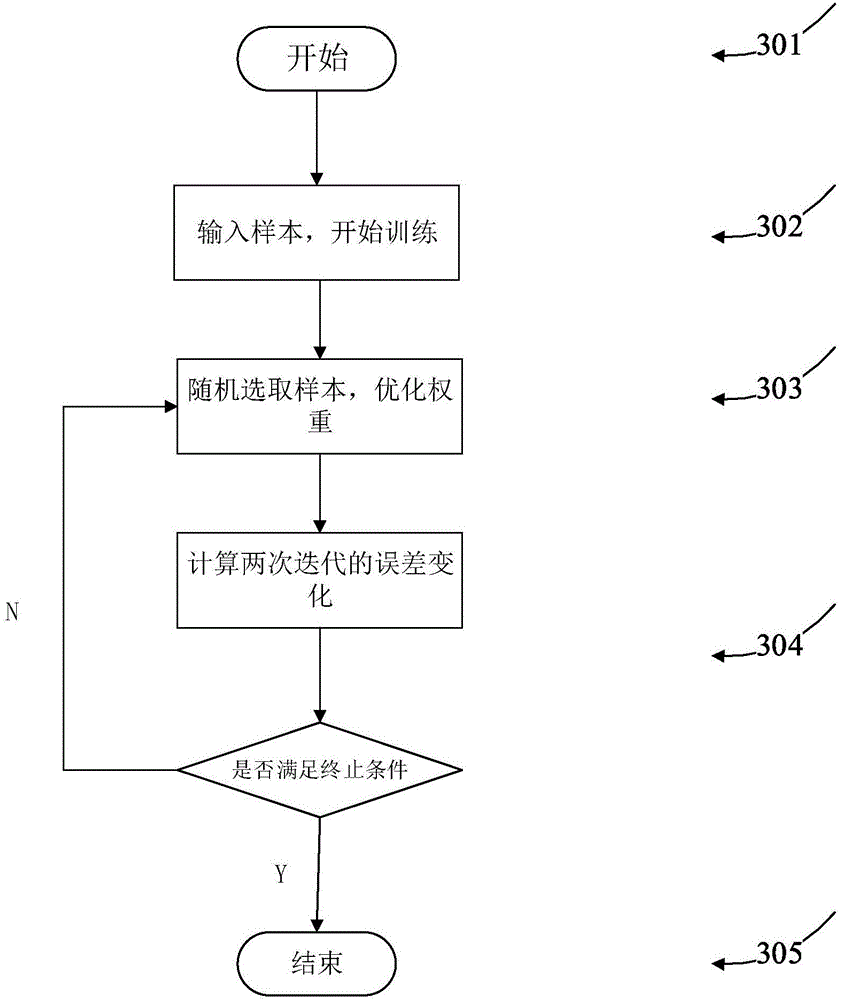

[0058] Step 103: Input training samples to the deep neural network in step 102, and then use the backpropagation algorithm to train the deep neural network until the iterative error change value σ for two consecutive iterations of the deep...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com