A method, system and robot for generating interactive content of robot

A technology of interactive content and generation system, applied in the field of robot interaction and robot interaction content generation, it can solve the problems of poor robot intelligence and robots cannot be more anthropomorphic, so as to improve intelligence, improve human-computer interaction experience, and improve anthropomorphism. Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

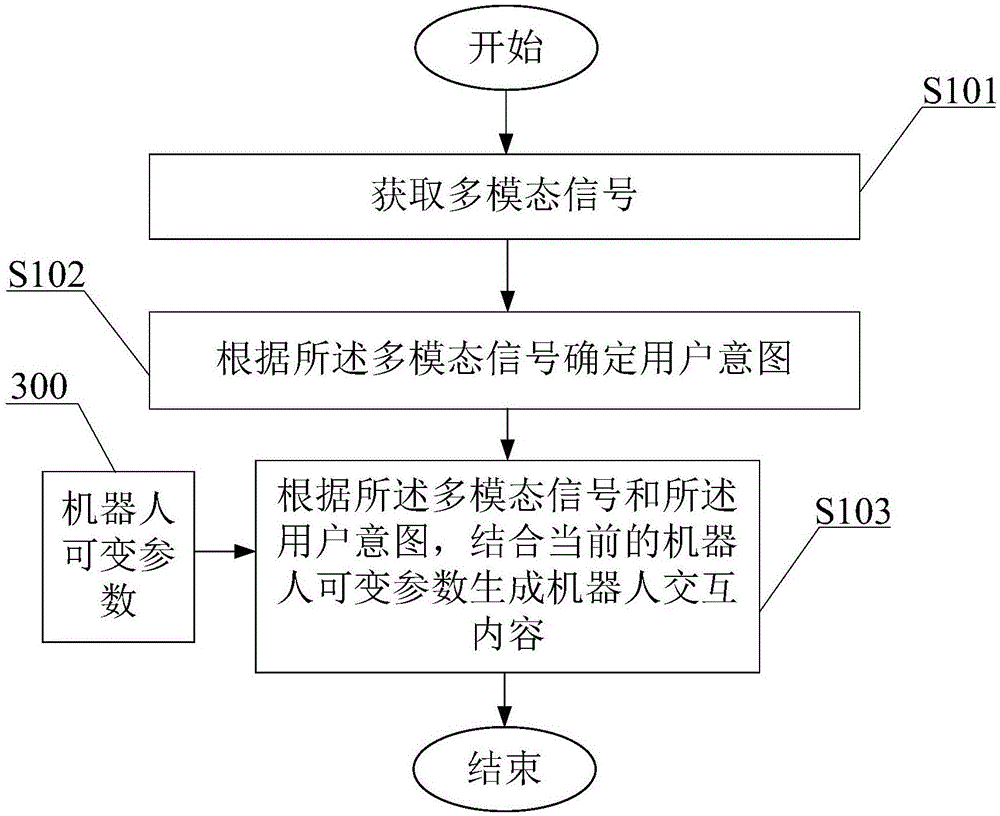

[0043] Such as figure 1 As shown, this embodiment discloses a method for generating robot interaction content, including:

[0044] S101. Obtain a multi-modal signal;

[0045] S102: Determine user intention according to the multi-modal signal;

[0046] S103: According to the multi-modal signal and the user's intention, generate robot interaction content in combination with current robot variable parameters.

[0047] For application scenarios, existing robots are generally based on a method for generating interactive content of a question-and-answer interactive robot in a fixed scene, and it is impossible to generate the expression of the robot more accurately based on the current scene. A method for generating interactive content of a robot of the present invention includes: acquiring a multi-modal signal; determining a user's intention according to the multi-modal signal; according to the multi-modal signal and the user's intention, combining current robot variable parameters Generat...

Embodiment 2

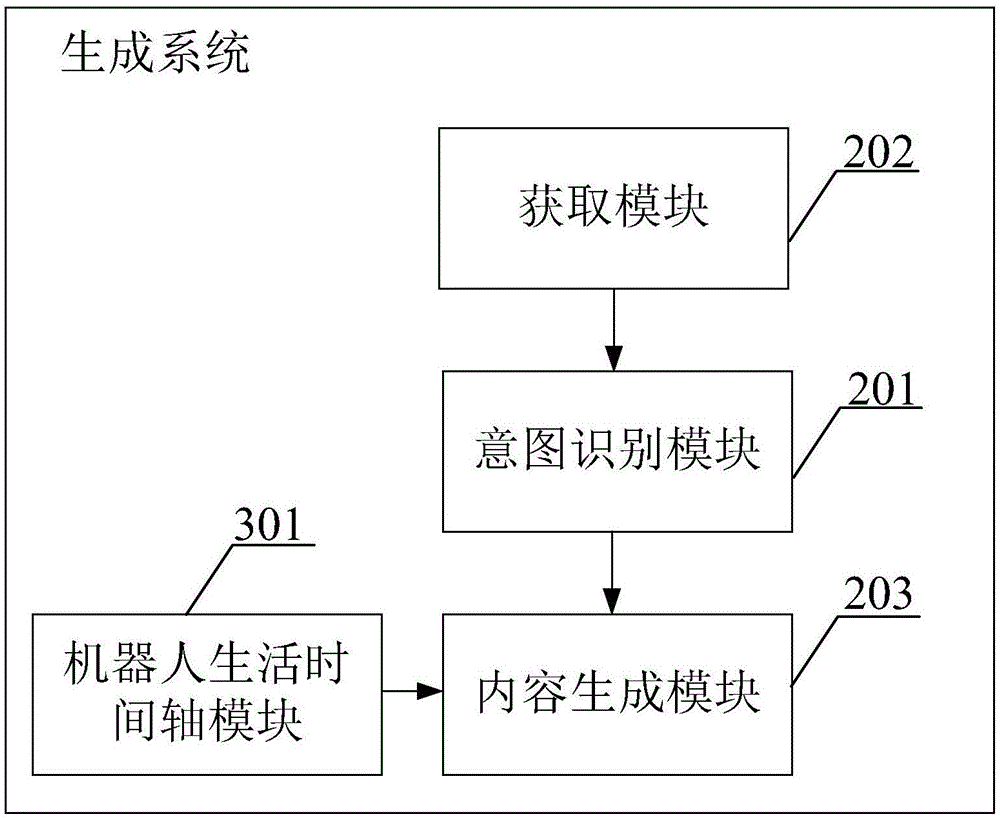

[0066] Such as figure 2 As shown, this embodiment discloses a system for generating robot interactive content of the present invention, which is characterized in that it includes:

[0067] The obtaining module 201 is used to obtain multi-modal signals;

[0068] The intention recognition module 202 is configured to determine the user's intention according to the multi-modal signal;

[0069] The content generation module 203 is configured to generate robot interaction content according to the multi-modal signal and the user's intention in combination with current robot variable parameters.

[0070] In this way, it is possible to more accurately generate robot interaction content based on multi-modal signals such as image signals and voice signals in combination with the variable parameters of the robot, so as to interact and communicate with people more accurately and anthropomorphically. Variable parameters are: parameters that the user actively controls during human-computer interac...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com