A Fast Stitching Method for Aerial Images with Large Tilt and Low Overlap

An aerial image and fast stitching technology, applied in the field of image processing, can solve the problems of large differences in local features in overlapping areas, few feature points to be matched, and long image stitching time, so as to overcome the problem of image stitching and reduce the average deformation. Error, the effect of reducing the average viewing angle difference

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

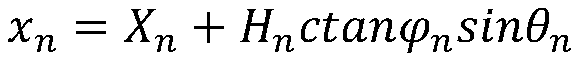

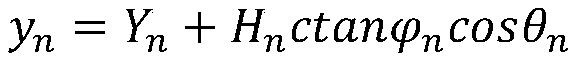

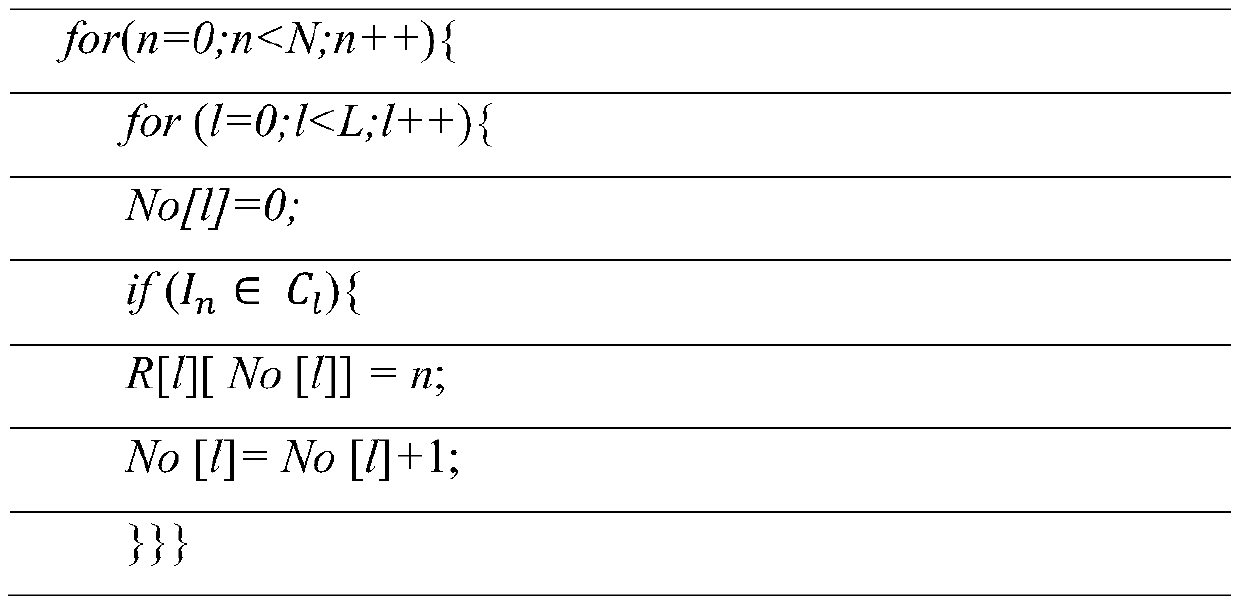

[0029] refer to figure 1 . According to the present invention, first read the sequence of aerial images collected by the sensor front-end camera and the imaging auxiliary data of each image, calculate the position distribution relationship of each image according to the imaging auxiliary data, and establish the image position relationship matrix; calculate all the images according to the image position relationship matrix The image corresponding to the center of the coverage area is used as the reference image of the synthetic image coordinate system; then the adjacency graph path between the image and the reference image is searched through the shortest path algorithm, and the positional relationship between adjacent images on the adjacency path is calculated, according to the position The relationship and overlap rate calculate the overlapping area between adjacent images, respectively extract the salient feature points and areas in the overlapping area of the two images, ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com