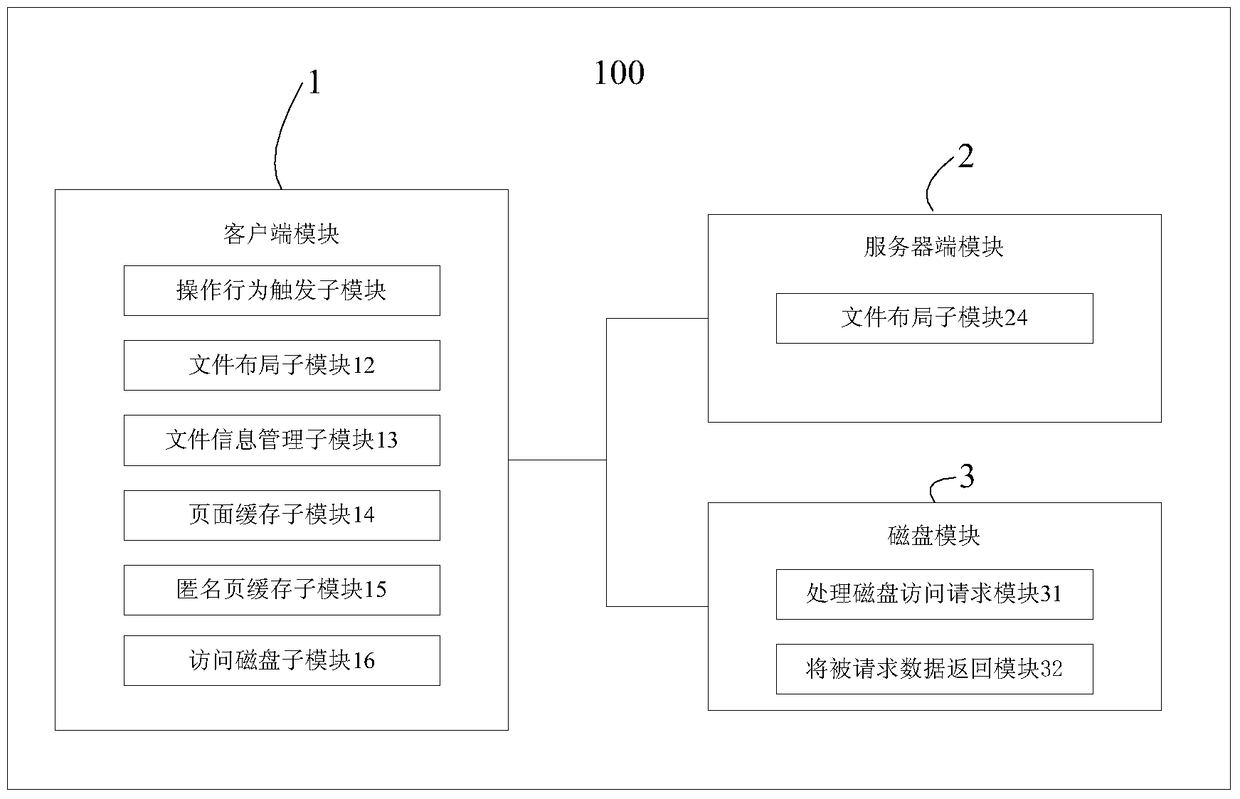

A system and method for busy waiting after pre-reading small files in a parallel network file system

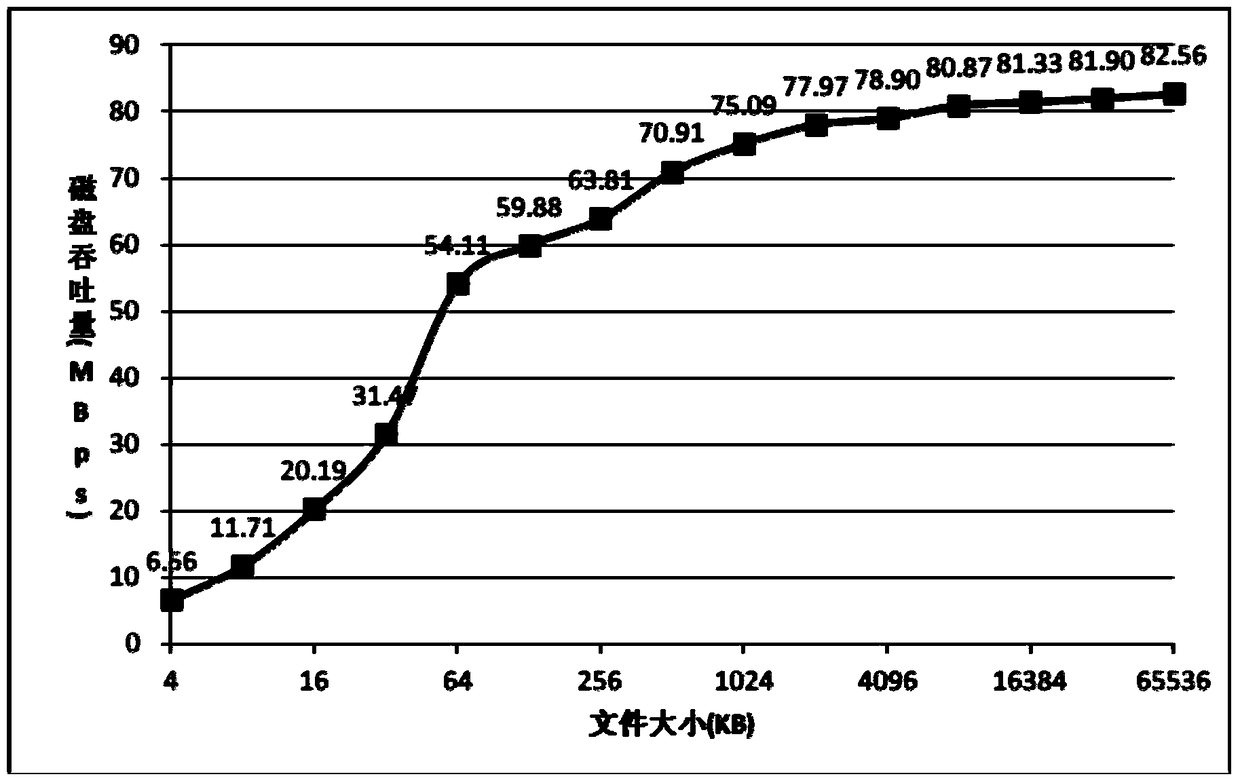

A network file system, small file technology, applied in the system field to achieve the effect of improving read access performance

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0044]The present invention is described in detail below in conjunction with accompanying drawing and specific embodiment, but not as limiting the present invention.

[0045] Such as figure 2 Shown is a flow chart of the method for reading busy etc.

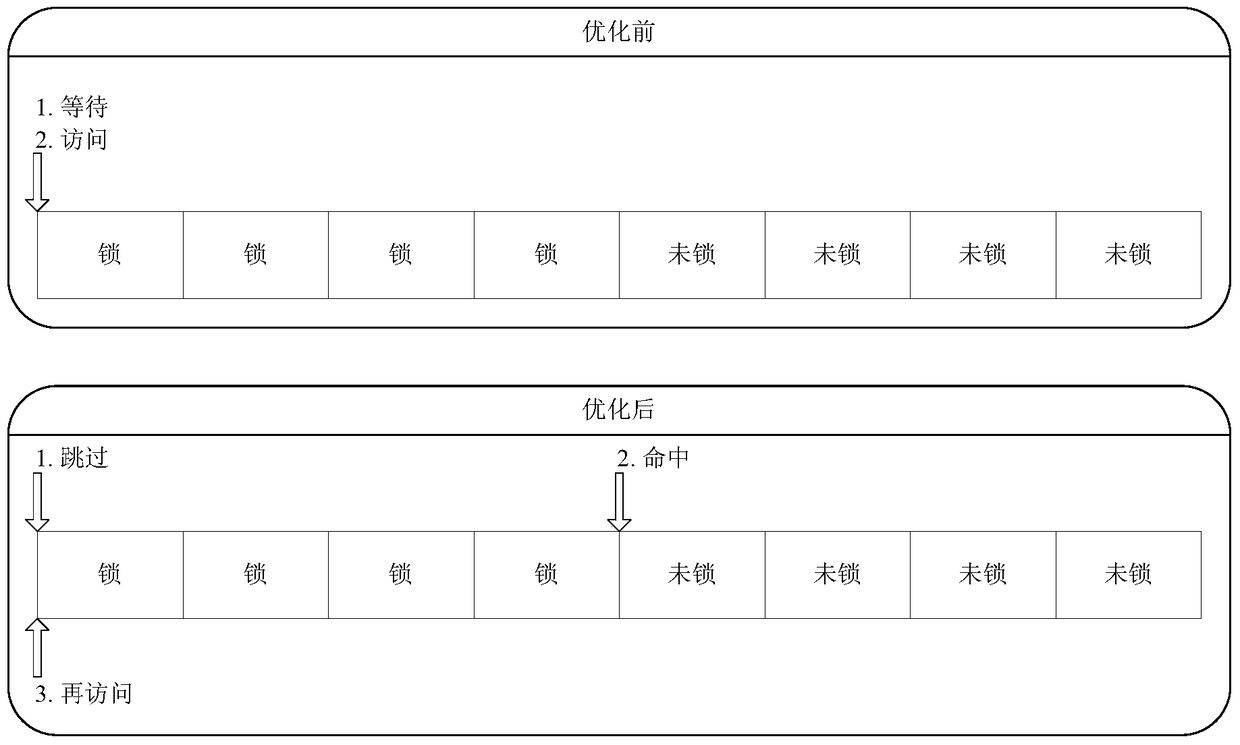

[0046] No matter how fast the storage medium is, its access delay still exists. In the process of high concurrent access to a large number of small files, the situation of "the page is requested but the result has not been returned yet" will appear. Such as figure 2 As shown, the traditional solution is the idle strategy, that is, waiting for the disk read delay. This is undoubtedly a strategic error in the scenario where subsequent pages are already in the cache. The present invention adopts a busy-waiting strategy: when an application program hits a locked page in the cache, first mark the page as "unvisited", then skip the page and access the next page, and wait until all pages are accessed, and then Secondary visits to...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com