Three-dimensional face recognition method based on expression invariant regions

A technology of three-dimensional face and recognition method, which is applied in the field of three-dimensional face recognition based on the invariant expression area, can solve the problem of low accuracy of the invariant expression area, and achieve the effect of avoiding non-convergence and accurate area of the invariant expression.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

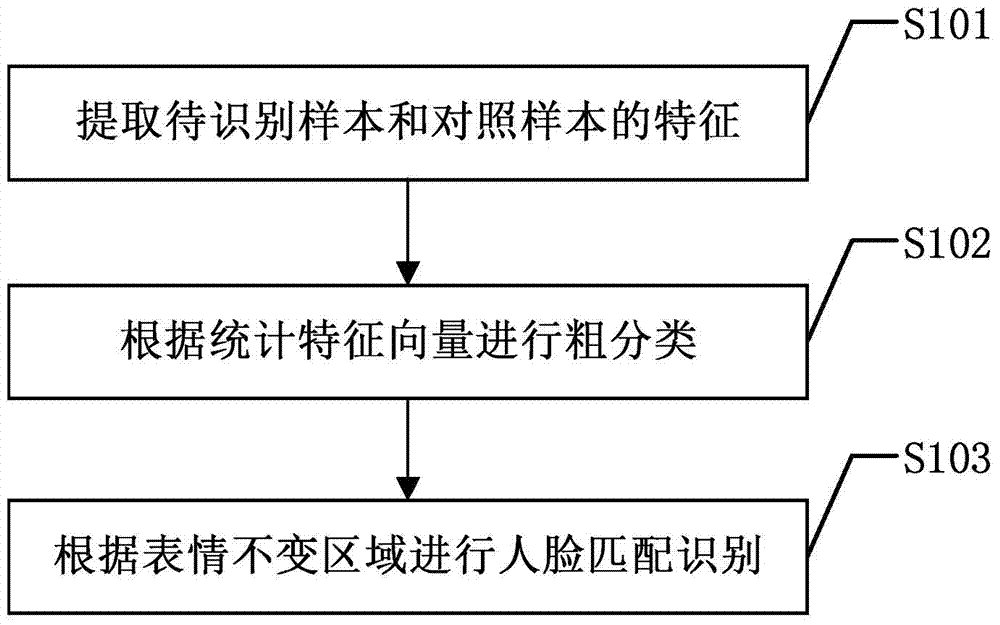

[0037] figure 1 It is a flowchart of the three-dimensional face recognition method based on the expression invariant region of the present invention. Such as figure 1 As shown, the specific steps of the present invention's three-dimensional face recognition method based on the expression invariant region include:

[0038] S101: Extracting the features of the sample to be identified and the control sample:

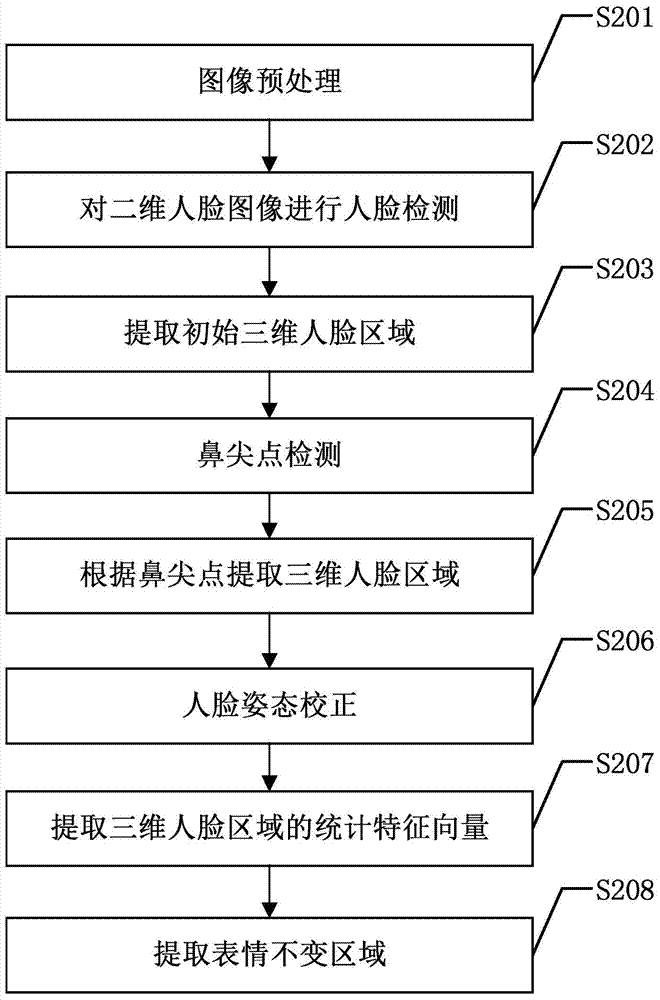

[0039]Firstly, the features of the sample to be identified and the control sample will be extracted respectively. There are two types of features of the three-dimensional face area adopted in the present invention: statistical feature vectors and expression-invariant area point sets. For the accuracy of feature extraction, human face Before the face area detection, the sample image needs to be preprocessed, and the extracted face area needs to be corrected. figure 2 It is a flow chart of face feature extraction. Such as figure 2 As shown, the specific steps of face f...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com