A 3d‑jnd threshold calculation method

A technology of 3D-JND and calculation method, which is applied in image communication, electrical components, stereo system, etc., and can solve the problems of not considering the impact and binocular stereo vision, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

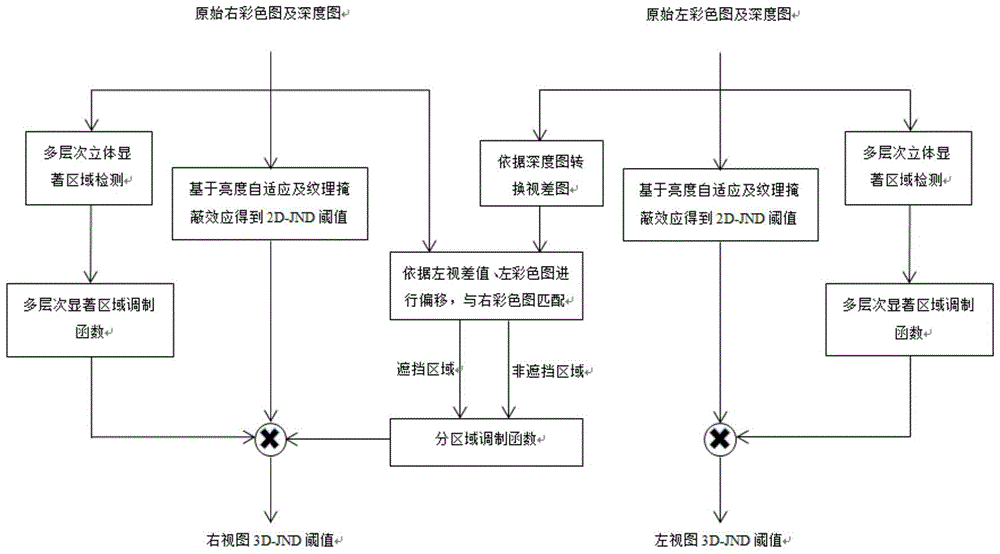

Method used

Image

Examples

Embodiment Construction

[0041] The present invention will be further described below in conjunction with the embodiments shown in the accompanying drawings.

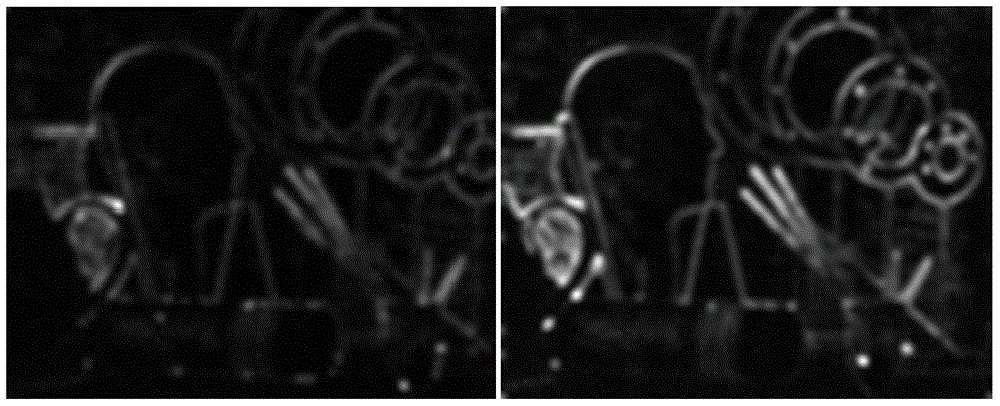

[0042] The example that the present invention provides adopts MATLAB7 as the simulation experiment platform, selects view1 and view3 angles of view of Art (size: 695*555) in the Middlebury3D image storehouse as the binocular stereoscopic test image (such as figure 2 shown), the following describes this example in detail in conjunction with each step:

[0043] In step (1), the left and right color images are selected as test images, and the 2D-JND basic thresholds of the left and right views are obtained by using the NAMM model respectively. Base threshold for left and right views It can be calculated according to the following formula:

[0044]

[0045] in T l (x, y) represents the adaptive threshold of the image based on the background brightness at (x, y), that is, the maximum value of the background brightness model and the spatial...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com