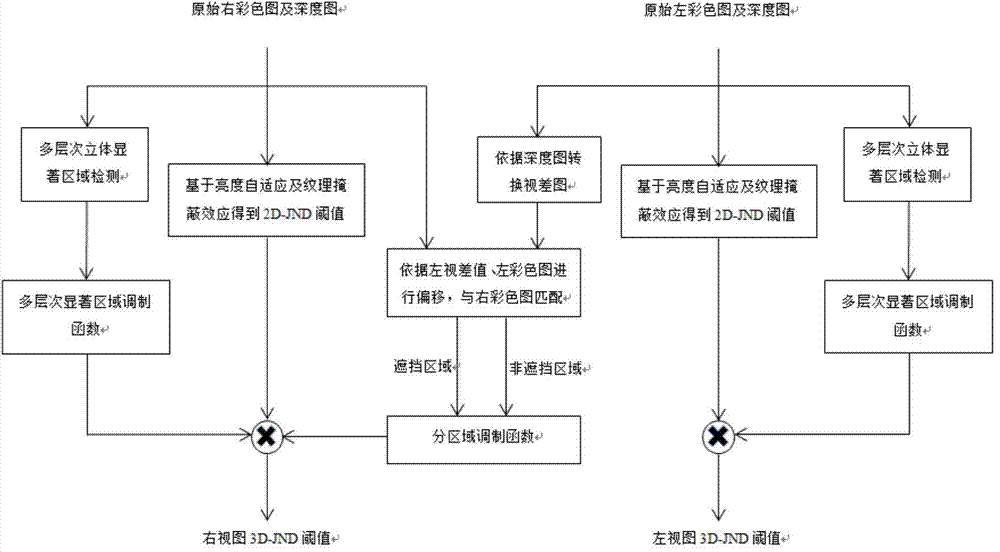

Method for calculating 3D-JND threshold value

A 3D-JND, calculation method technology, applied in the direction of image communication, electrical components, stereo systems, etc., can solve the problem of not considering binocular stereo vision, not considering the impact, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0041] The present invention will be further described below in conjunction with the embodiments shown in the drawings.

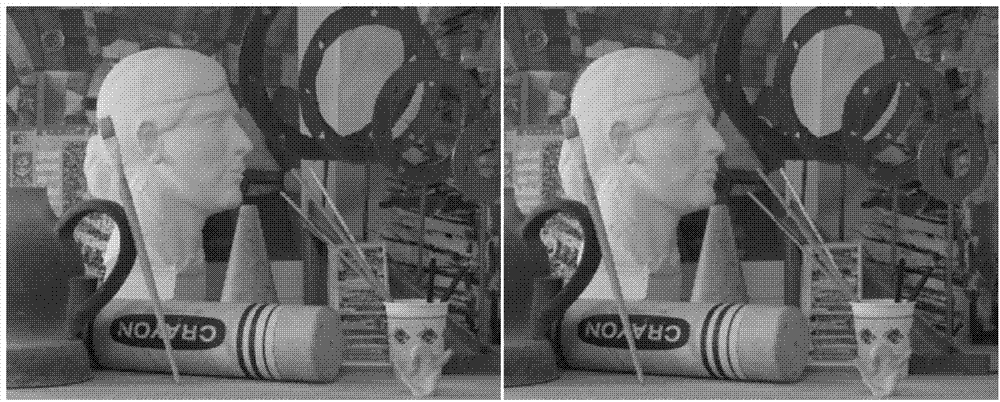

[0042] The example provided by the present invention uses MATLAB7 as the simulation experiment platform, and selects the view1 and view3 perspectives of Art (size: 695*555) in the Middlebury3D image library as the binocular stereo test images (such as figure 2 Shown), the following describes this example in detail in conjunction with each step:

[0043] Step (1): Select the left and right color images as test images, and use the NAMM model to obtain the 2D-JND basic thresholds of the left and right views. Basic threshold for left and right views It can be calculated according to the following formula:

[0044] SJND Y ( x , y ) = T l ( x , y ) + T Y t ( x , y ) - C Y lt · min { T l ( x , y ) , T Y t ( x , y ) } - - - ( 1 )

[0045...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com