Indoor scene positioning method based on hybrid camera

An indoor scene and localization method technology, which is applied in the field of indoor scene localization based on hybrid cameras, can solve the problems such as the scalability of the restriction method and the difficulty of calculating the similarity of key frames, and achieve the effect of improving the accuracy and efficiency.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0027] In order to describe the present invention more specifically, the technical solutions of the present invention will be described in detail below in conjunction with the accompanying drawings and specific embodiments.

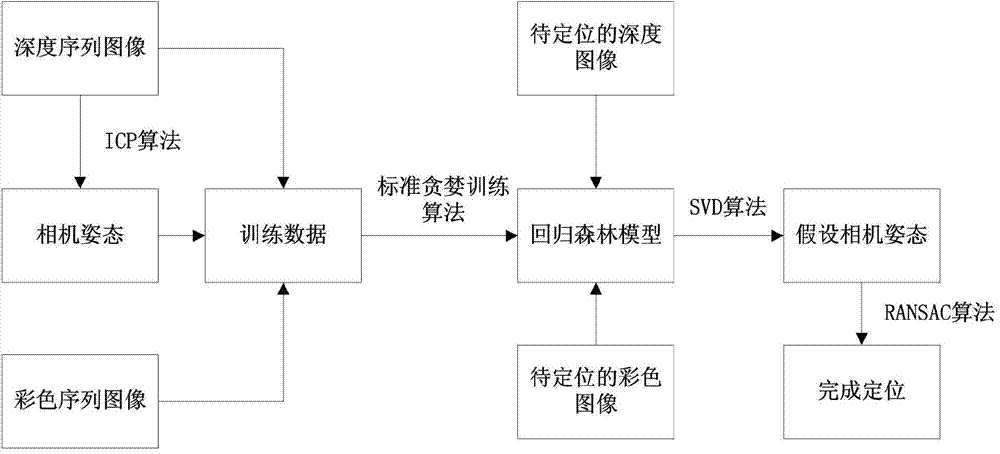

[0028] Such as figure 1 As shown, the present invention is based on the hybrid camera indoor scene positioning method, comprising the following steps:

[0029] (1) Use the RGB-D hybrid camera to shoot indoor scenes, and obtain one RGB image sequence and one depth image sequence;

[0030] (2) Extract the depth information of each pixel in each frame of the depth image sequence, generate a 3D point cloud image of the indoor scene in real time and calculate the hybrid camera parameters in real time;

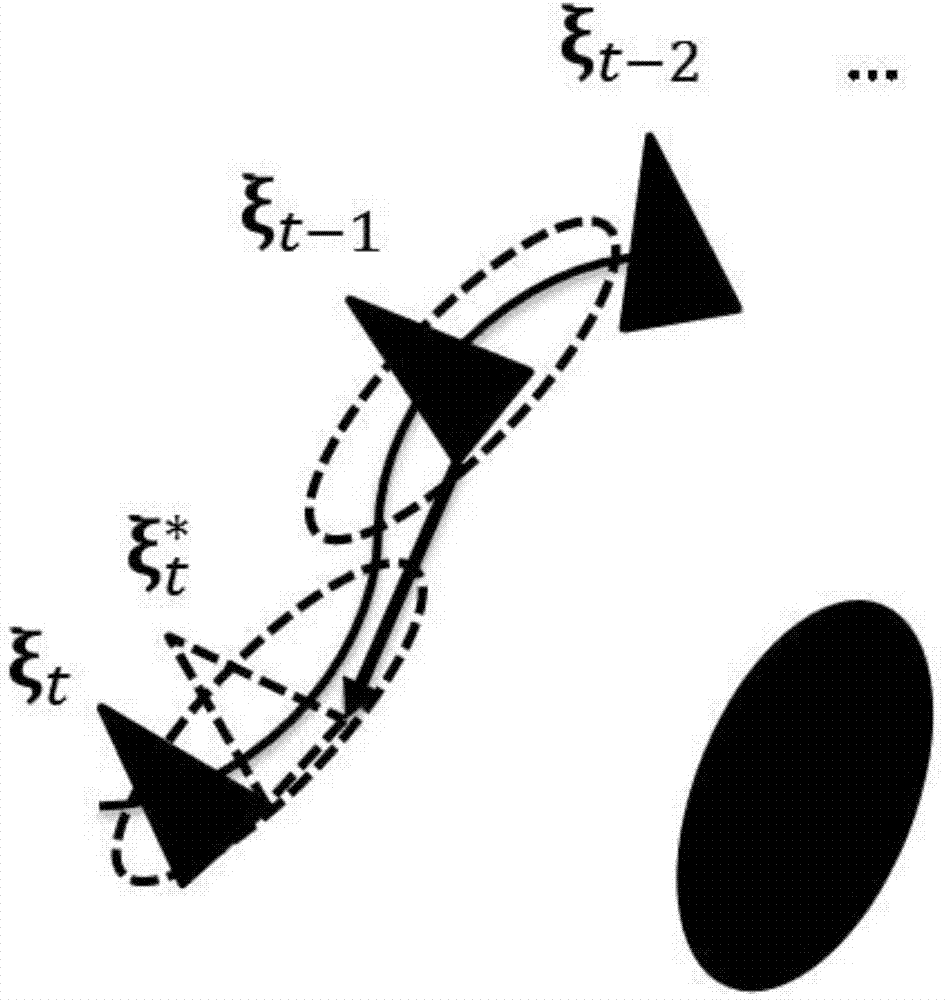

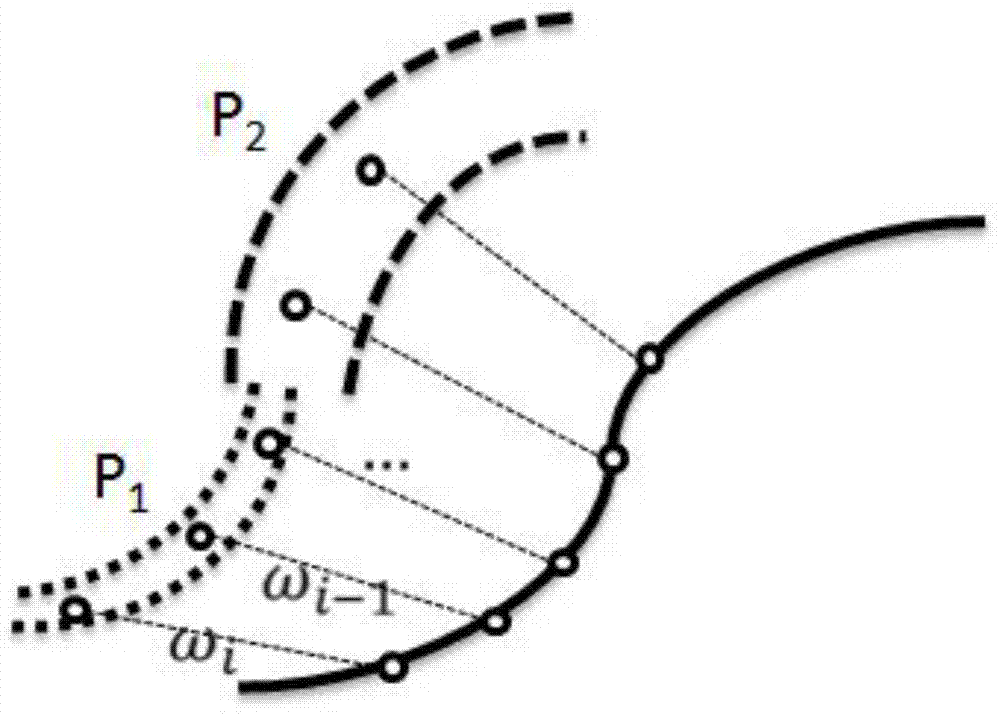

[0031] In this embodiment, on the basis of the traditional ICP (Iterative Closest Point) algorithm to estimate the camera pose, the ICP algorithm is optimized, mainly including camera pose motion compensation and weighted ICP point cloud registration. The ICP...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com