Method and system for processing multiview videos for view synthesis using motion vector predictor list

A motion vector prediction, multi-view video technology, applied in the field of multi-view video encoding and decoding, can solve the problem of not being able to encode temporally correlated and spatially correlated multi-view videos, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

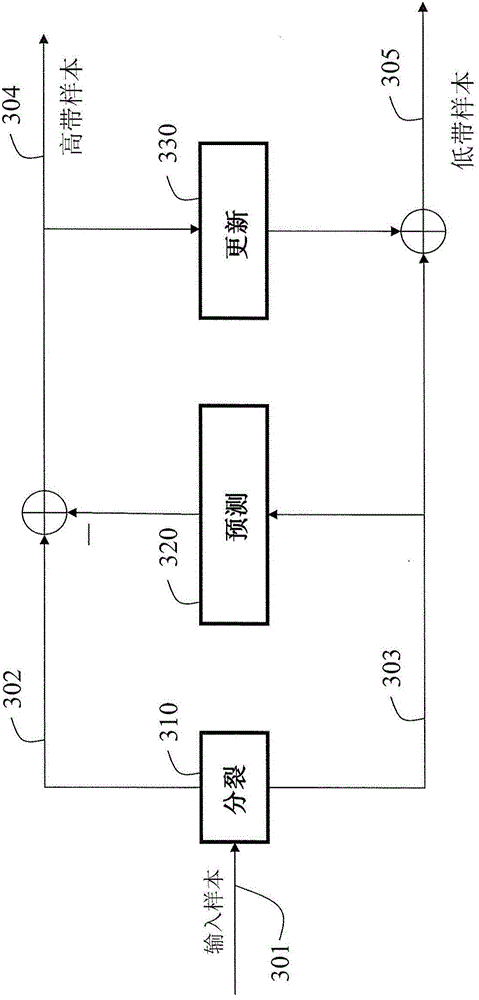

[0048] One embodiment of the present invention provides a joint temporal / inter-view processing method for encoding and decoding frames of multi-view video. A multi-view video is a video of a scene acquired by multiple cameras with different poses. Defines a pose camera as its 3D (x,y,z) position and its orientation. Each pose corresponds to a "view" of the scene.

[0049] The method uses temporal correlation between frames in the same video acquired for a specific camera pose, and spatial correlation between synchronized frames in different videos acquired from multiple camera views. Additionally, "composite" frames can be associated, as described below.

[0050] In one embodiment, the temporal correlation uses motion compensated temporal filtering (MCTF), while the spatial correlation uses disparity compensated inter-view filtering (DCVF).

[0051] In another embodiment of the invention, the spatial correlation uses predictions from one view of a synthesized frame genera...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com