Multi-information fusion based gesture segmentation method under complex scenarios

A multi-information fusion and complex scene technology, applied in the field of gesture segmentation, can solve problems such as unsatisfied real-time requirements, user freedom restrictions, and real-time decline

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

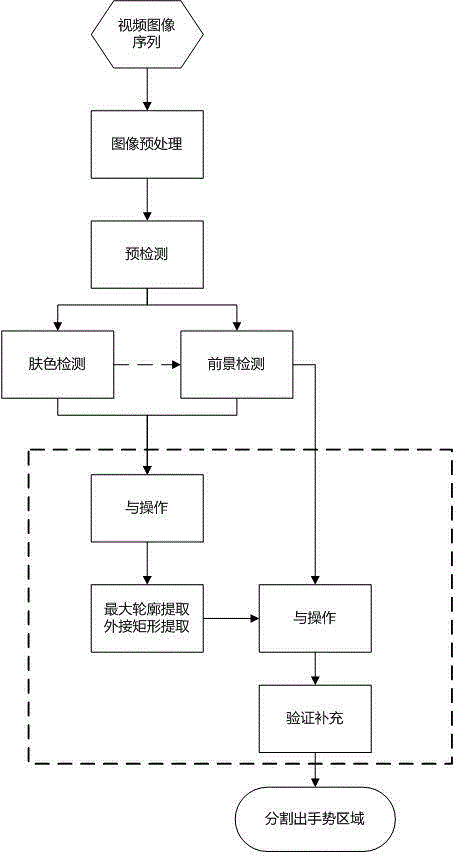

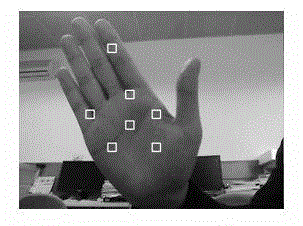

[0092] In this embodiment, a video sequence (640×480 pixels, 30ftps) captured by a Logitech C710 network camera is processed. The video was randomly shot in an indoor scene, which contains a complex background, background objects with similar skin color appear, there are changes in lighting, and other body parts such as the user's face and arms also appear in the video. figure 1 It is a schematic diagram of the overall process flow of the present invention, and the present embodiment includes the following steps:

[0093] Step 1: Image preprocessing: For each frame image of the video image sequence, perform smoothing filtering and output after averaging the pixel values in the 3X3 window, and remove certain noises existing in the image. The kernel function used for filtering is:

[0094] h = 1 hsize . width * hsize . hei...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com