Multi-visual-angle action recognition method

An action recognition and multi-view technology, applied in the field of computer vision, can solve the problems of poor recognition effect, complex motion can not get good effect, change of motion duration and noise sensitivity, etc., achieve high accuracy and realize effective The effect of identifying and increasing the degree of discrimination

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0040] The structure of the present invention will be explained in detail below in conjunction with the accompanying drawings.

[0041] A multi-view action recognition method includes two processes of action training and action recognition.

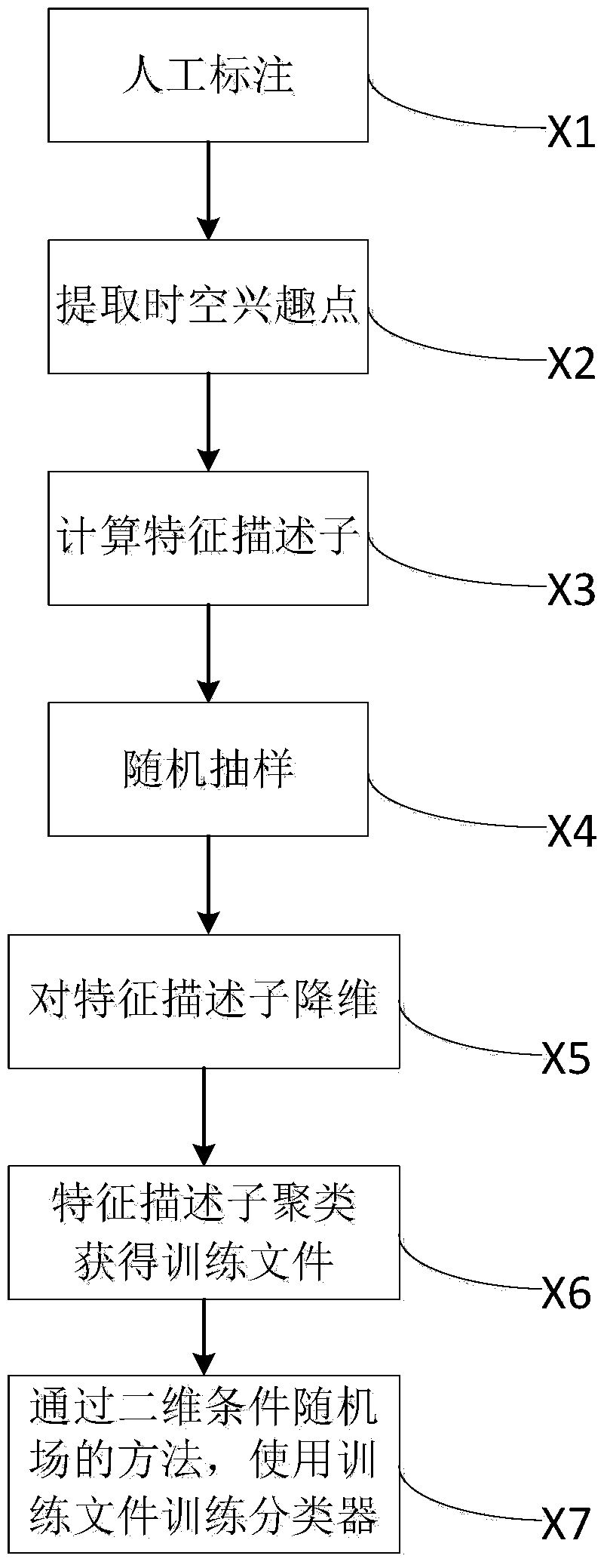

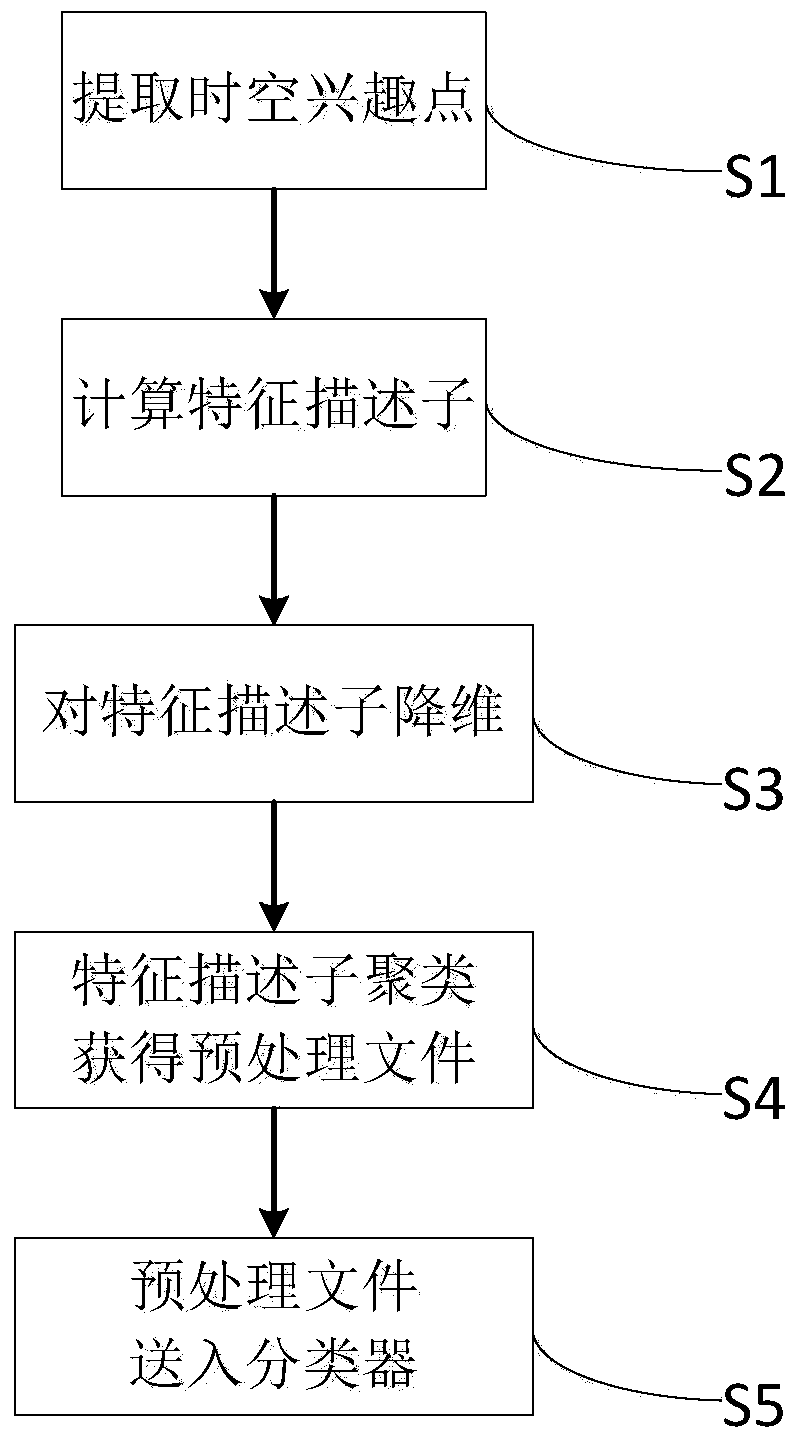

[0042] Such as figure 1 As shown, the action training process includes the following steps:

[0043] X1: Manually mark the training video files, with a total of 4 viewing angles and 10 types of actions;

[0044] X2: To extract spatio-temporal interest points from the training video files, the present invention adopts methods such as Gaussian filtering and Gabor filtering;

[0045] X3: Calculate the feature descriptor of the area where the spatio-temporal interest point is located. The feature descriptor of the present invention includes a direction gradient histogram and an optical flow histogram;

[0046] X4: Randomly sample the set of feature descriptors in step X3 to obtain a subset;

[0047] X5: Reduce the dimensionality of all fe...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com