Inertia/visual integrated navigation method adopting iterated extended Kalman filter and neural network

An extended Kalman and neural network technology, which is applied in the field of integrated navigation in complex environments, can solve the problems of inertial/visual integrated navigation system accuracy decline, inertial navigation system cannot provide long-term high-precision navigation, etc., and achieve the effect of improving the scope of application

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0027] The present invention will be further explained below in conjunction with the accompanying drawings.

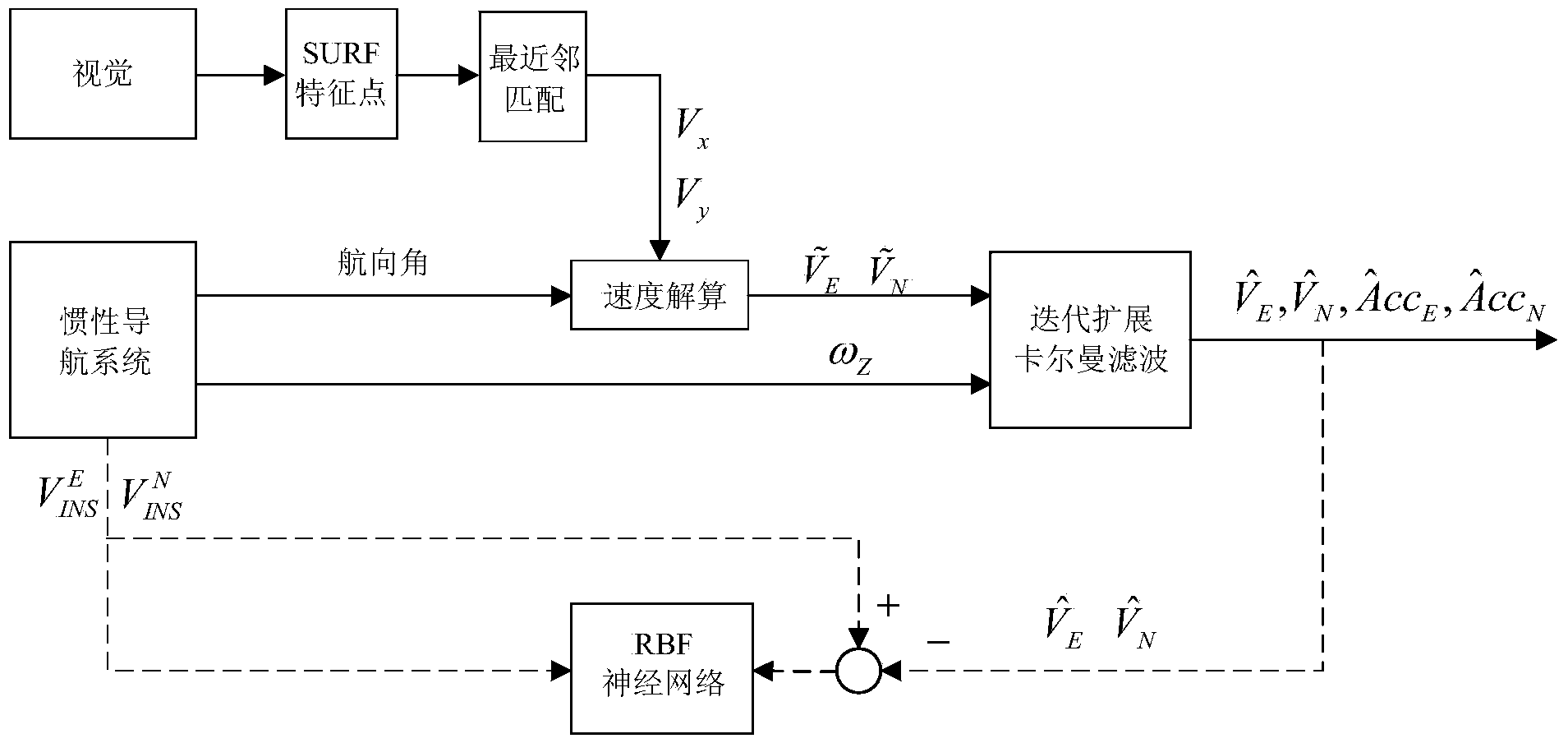

[0028] An inertial / visual integrated navigation method using iterative extended Kalman filter and neural network includes the following steps:

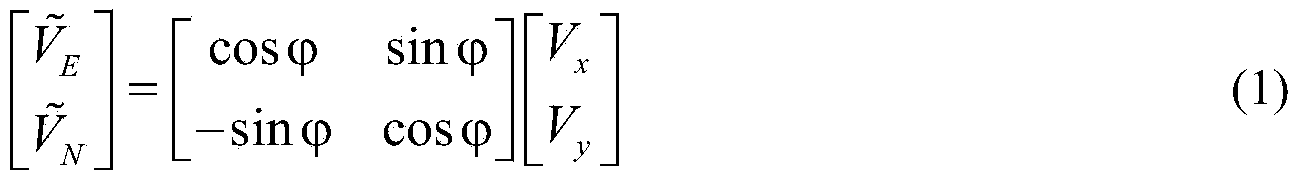

[0029] Step 1: If figure 1 As shown, when the visual signal is good, the vehicle-mounted camera is placed vertically downward to obtain the dynamic video of the road surface passed by the mobile robot during its movement, and the SURF algorithm is used to extract the SURF feature points in two adjacent image frames in the video, and Record the position coordinates of the feature points in the image coordinate system and match the SURF feature points on the two frames of images according to the nearest neighbor matching method to determine the speed V of the camera on the horizontal plane x , V y ;

[0030] Let the vertical distance between the projection center of the vehicle camera and the ground be Z R , the normalized f...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com