Method for adaptively quantizing optical flow features on complex video monitoring scenes

An adaptive quantization and video monitoring technology, applied in TV, color TV, closed-circuit TV system, etc., can solve the problems of increasing data volume, not considering the motion distribution characteristics of video monitoring scenes, loss of spatial position and direction resolution, etc., to achieve discriminative effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

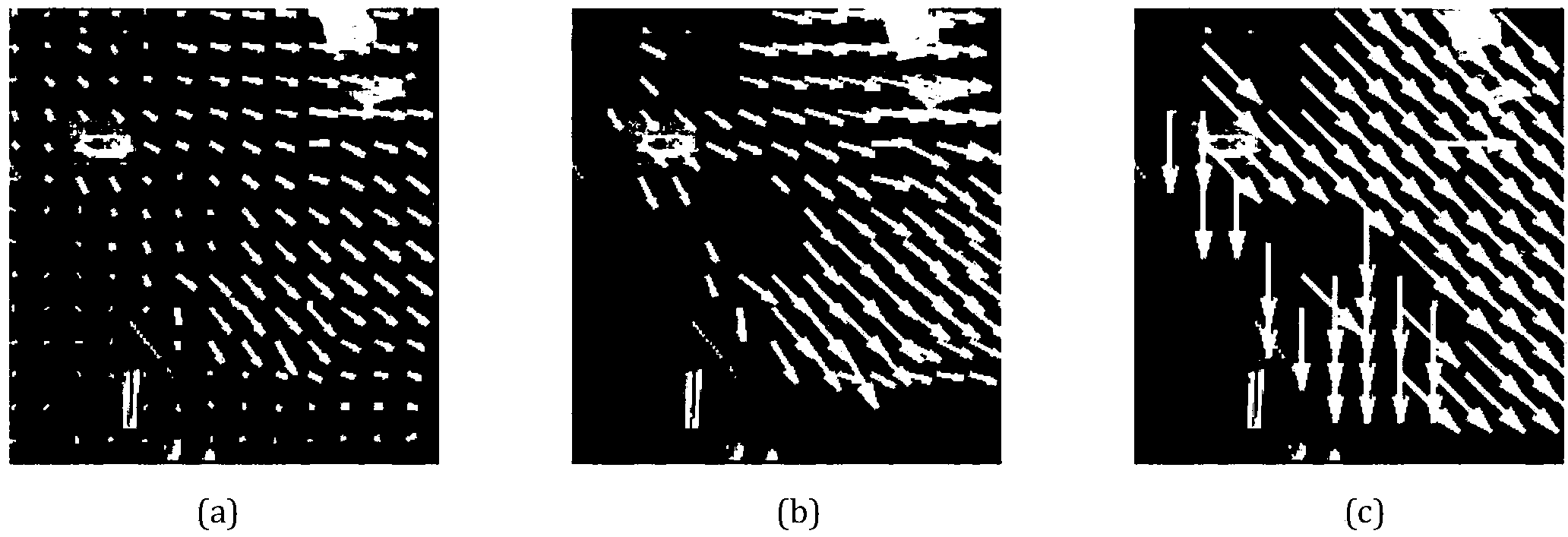

[0036] The video sequence used in this implementation comes from the traffic database QMUL (The Queen Mary University of London) with a frame rate of 25pfs and a resolution of 360×288. figure 2 For video surveillance scenarios. The QMUL database comes from Queen Mary, University of London, and is a database dedicated to the analysis of complex video surveillance scenarios.

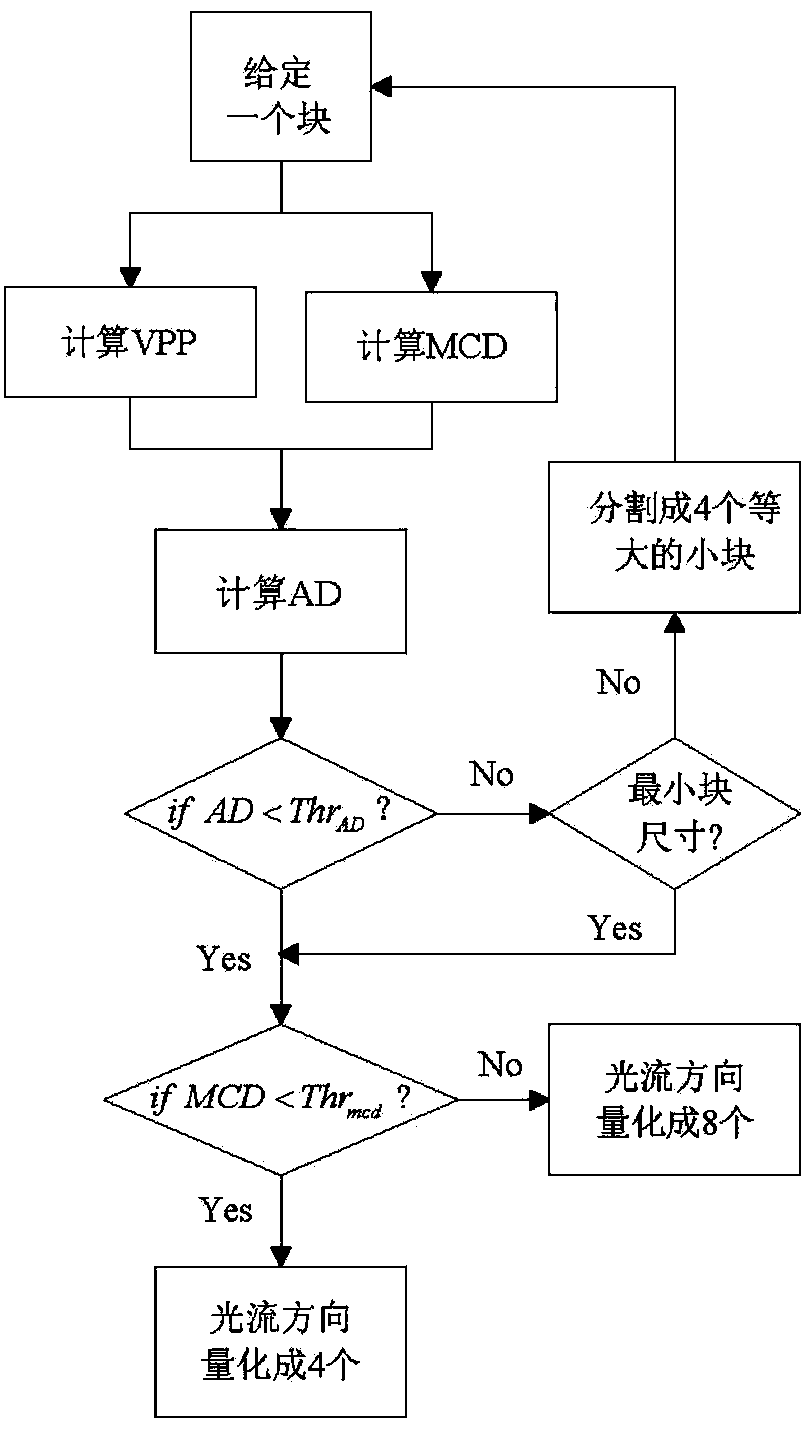

[0037] Such as figure 1 As shown, this embodiment includes the following specific steps:

[0038] Step 1: Probabilistic denoising of optical flow features. The specific steps are:

[0039] 1.1) Count the number of optical flow features generated on each spatial point (x, y) in the video scene, and perform normalization processing: Among them: P(x,y) represents the occurrence probability of the optical flow feature at the spatial point (x,y); A(x i ,y i )surface Com ( a , b ) = ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com