Vision-based integrated navigation robot and navigation method

A technology that combines navigation and navigation methods. It is applied in directions such as navigation, mapping and navigation, and two-dimensional position/channel control. It can solve the problems of accumulated errors in navigation methods, reduced reliability, and visual navigation light interference.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

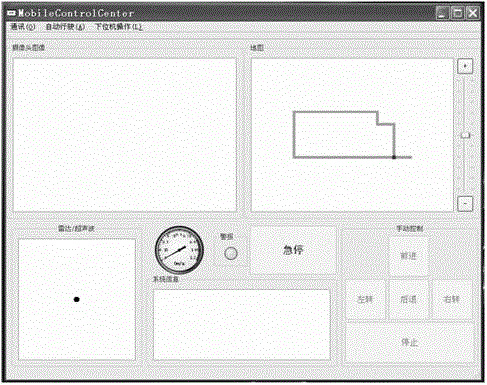

[0028] Such as figure 1 As shown, the robot involved in the present invention is a self-navigating robot that can adapt to various environments. It adopts a four-wheel drive trolley structure and mainly includes a car body, a color digital camera 1, an ultrasonic sensor 2, wheels 3, and a motor deceleration Device combination 4, photoelectric encoder 5, gyroscope 6, wireless network card 7, notebook computer (as a console) 8 and motion controller, industrial computer, image acquisition card, data acquisition and processing board, lithium battery, etc.

[0029] The robot is a four-wheel drive car. Each wheel 3 is equipped with a set of motor reducer combination 4. The four sets of motors output torque at the same time to ensure that the robot has sufficient power and enhances motion performance. The robot turns using a differential turning method. The color digital camera 1 is installed on the front end above the robot car body, collects video image information through the colo...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com