Shared memory based method for realizing multiprocess GPU (Graphics Processing Unit) sharing

A shared memory, multi-process technology, applied in the field of sharing GPU among multiple processes based on shared memory for data communication, can solve the problem of not being able to share the use of GPU, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0027] specific implementation plan

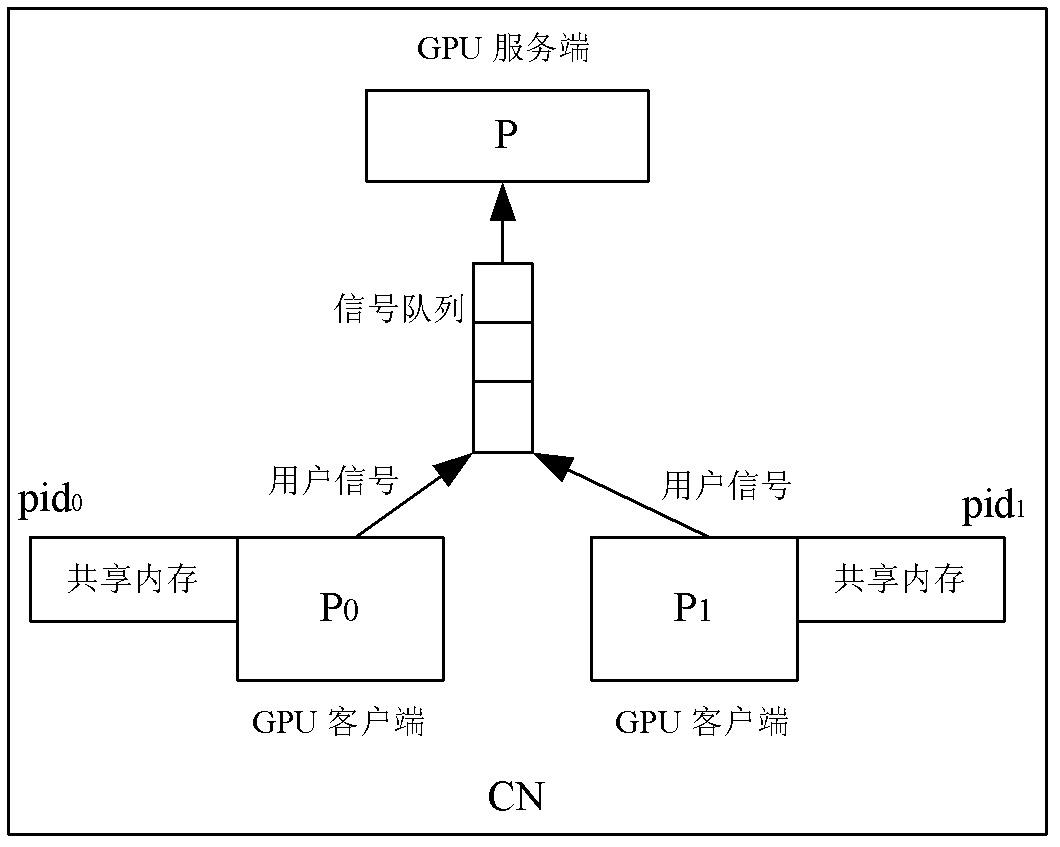

[0028] image 3 It is a schematic diagram of GPU shared by multiple processes in the present invention.

[0029] Two GPU clients and one GPU server run on the computing nodes. Each GPU client allocates its own memory space, which is identified by the process number pid of the GPU client. When the GPU client uses the GPU, it sends a user signal, and the user signal enters the signal queue. The GPU server responds to the user signal in the signal queue, enters the signal processing function, and uses the GPU for accelerated calculation.

[0030] Figure 4 It is an overall flow chart of the present invention.

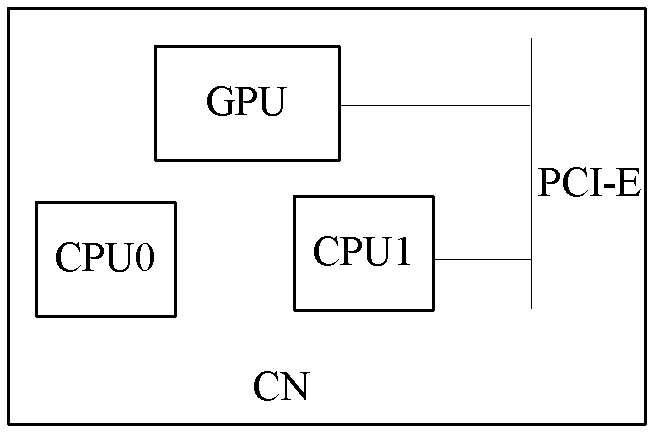

[0031] In order to test the effect of the present invention, the School of Computer Science of the National University of Defense Technology conducted an experimental verification on a single heterogeneous computing node of CPU+GPU. The specific configuration of the node is as follows: two Intel Xeon 5670 six-core CPUs, each with ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com