Method for modeling background based on camera response function in automatic gain scene

A technology of automatic gain and camera response, applied in the field of background modeling, can solve the problems of fully automatic implementation of unfavorable background difference method, inability to judge whether the rapid change of gray value of large-scale pixels is foreground or large error, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0097] The present invention will be described in detail below with reference to the accompanying drawings, and the described embodiments are intended to facilitate understanding of the present invention.

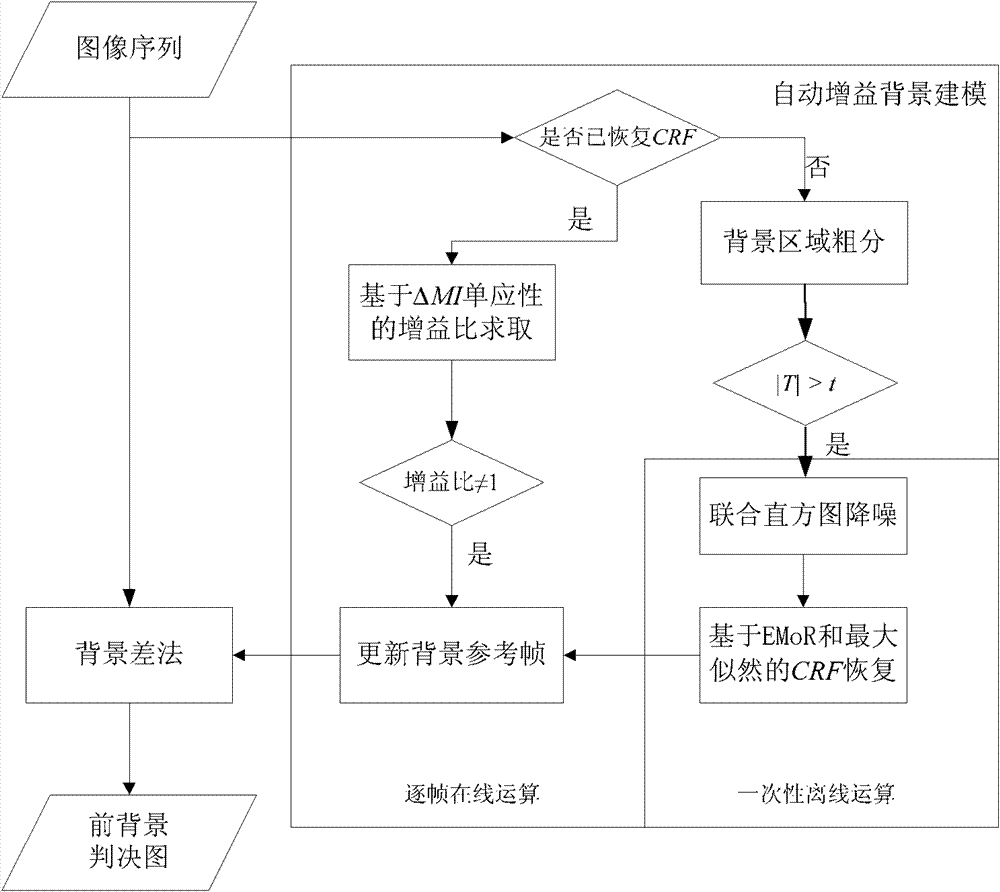

[0098] figure 1 is a flowchart of the camera-based automatic gain background modeling method. According to flow sequence, the specific implementation process of each step of the inventive method is as follows:

[0099] 1. Obtain image sequence

[0100] The system first acquires image sequences, and inputs the sequences to two parallel modules: a background difference method module and an automatic gain background modeling method module, and the automatic gain background modeling method module implements the method of the present invention.

[0101] 2. Determine whether the camera response function is recovered. If not, construct the critical false detection objective function T and set the automatic gain critical false detection threshold t. The automatic gain critical fa...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com