Method for distributing large continuous memory of kernel

A memory and kernel technology, applied in the field of memory management, can solve the problems of long application allocation time, large memory, time-consuming and other problems, and achieve the effect of shortening the allocation time

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

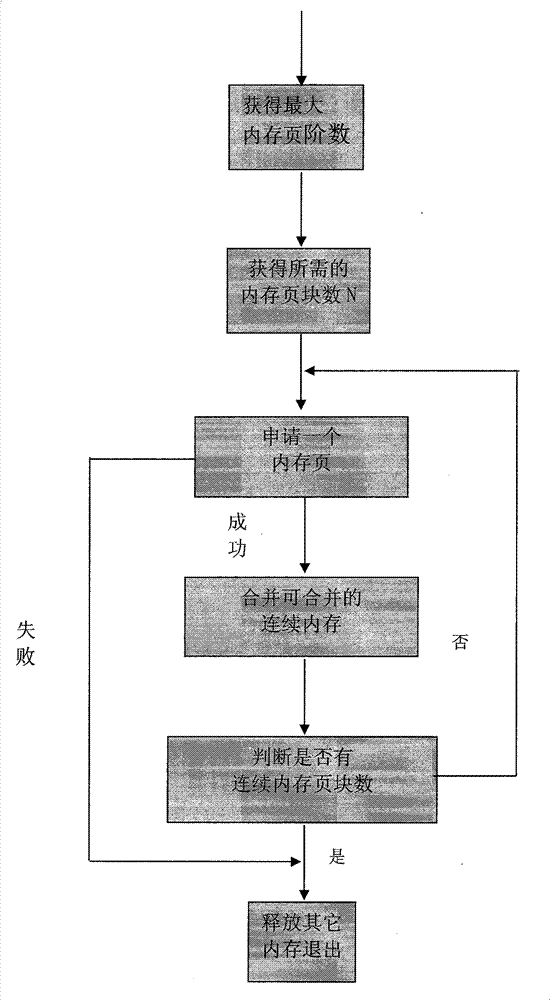

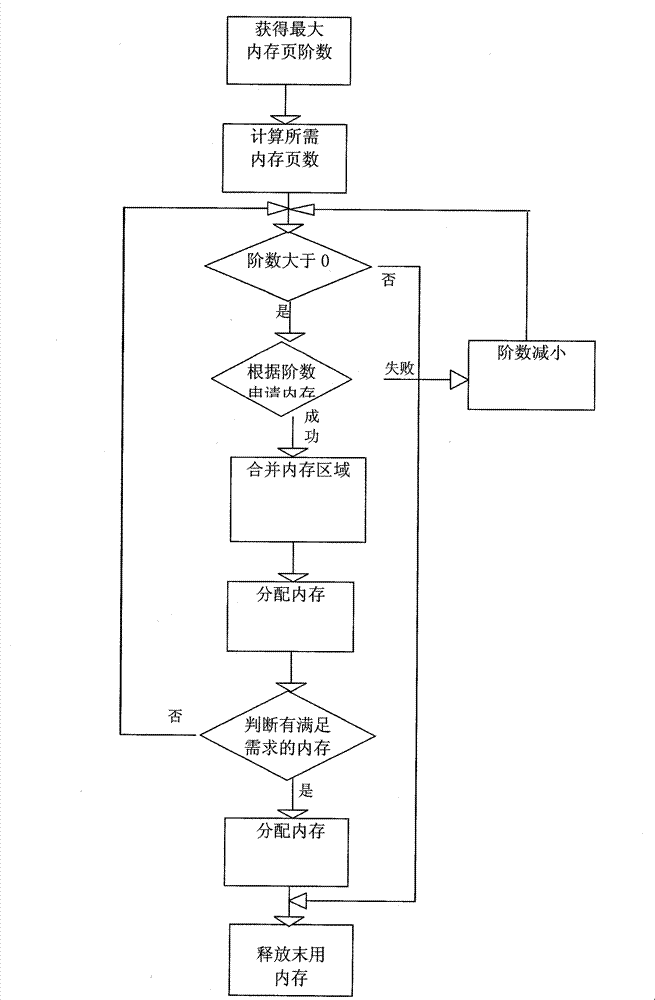

[0018] 1. Obtain the maximum memory page order when allocated by the kernel;

[0019] When applying for memory, the maximum amount of memory that can be applied for at one time is the order power of the memory page size. Here, it is ensured that the addresses of each memory block are continuous and that each application for a memory block is the largest memory block that can be applied for.

[0020] 2. Calculate the number of required memory pages N;

[0021] The upper integer of the quotient of the required memory size and the memory page size is taken as the required number of memory pages N.

[0022] 3. Judging the order, if it is negative, go to step 6, otherwise apply for memory.

[0023] The order may decrease as the free memory of the system decreases, and it is -1 when there are no free pages for allocation. When the order is positive, continue to allocate memory.

[0024] 4. If the memory application fails, the order will be reduced and go to step 3; if the applic...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com