Depth estimation method based on edge pixel features

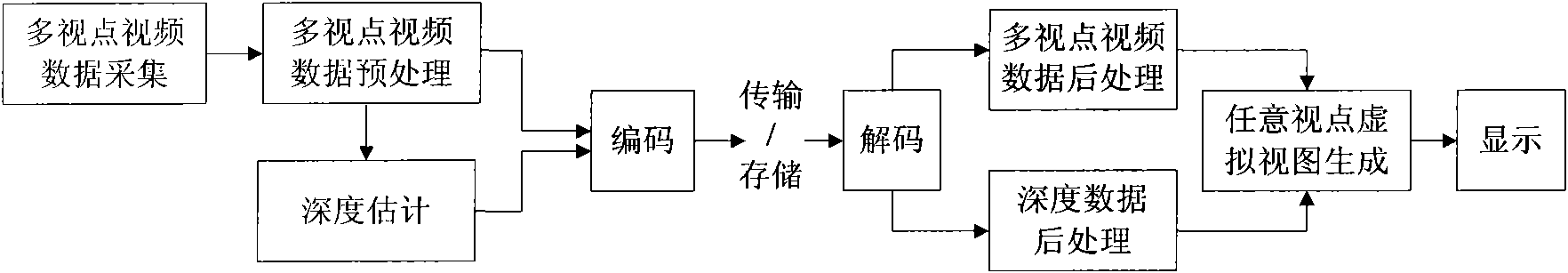

A technology of depth estimation and pixels, which is applied in the field of communication, can solve the problems of affecting accuracy, reducing the quality of virtual view, and large parallax difference, etc., achieving the effect of less noise points and improving subjective quality and objective quality

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

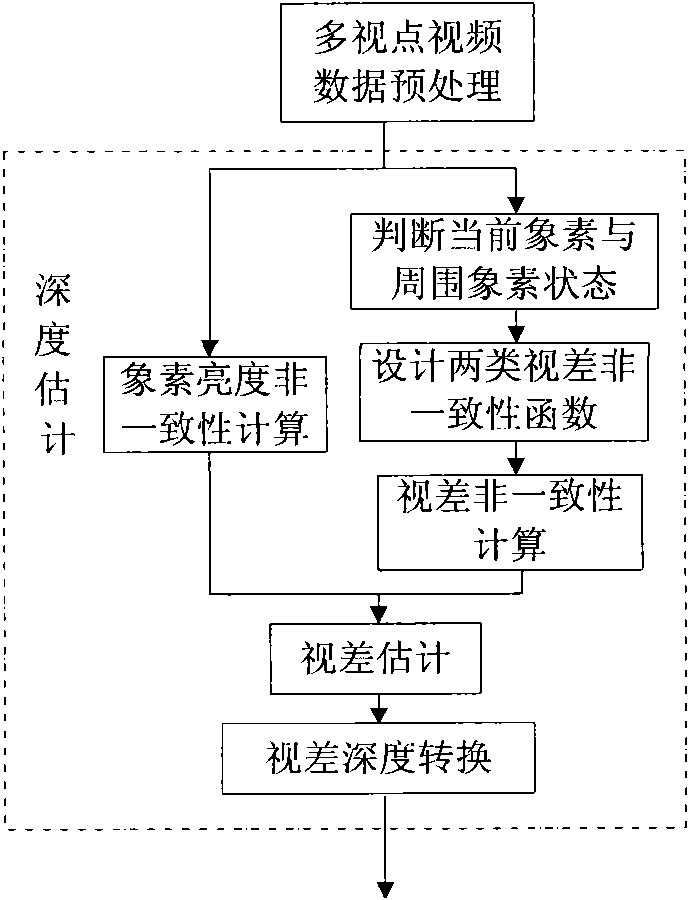

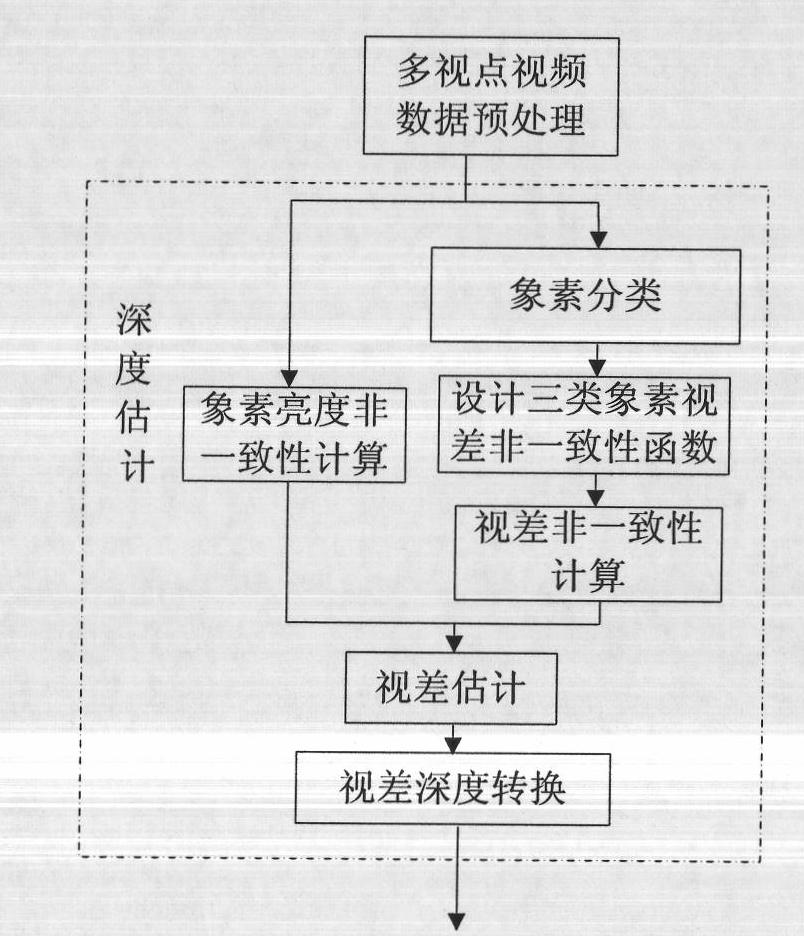

[0057] refer to image 3 , the depth estimation method of the present invention, comprises the following steps:

[0058] Step 1, classify the pixels in the image according to the specific position of the current pixel.

[0059] 1A) If the current pixel is located on the edge of the object in the image, then the current pixel is divided into the first type of pixels, i.e. the pixels on the edge;

[0060] 1B) If the current pixel is next to the edge of the object in the image, and there are pixels located on the edge of the object in its surrounding adjacent pixels, then the current pixel is assigned to the second type of pixel, i.e., the pixel next to the edge;

[0061] 1C) If the current pixel is on the edge of the object in the image, and its surrounding adjacent pixels are all on the edge of the object in the image, then classify the current pixel as the third type of pixel, ie non-edge pixel.

[0062] Step 2, according to the depth features of the object edge pixels in th...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com