Method for partial reference evaluation of wireless videos based on space-time domain feature extraction

A feature extraction and wireless video technology, applied in the field of video quality evaluation, can solve problems such as large amount of data, difficulty in building models, and incomplete HVS research

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

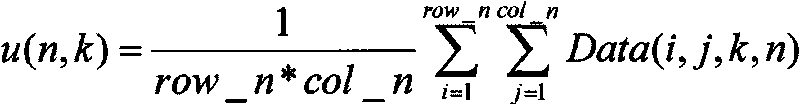

Method used

Image

Examples

Embodiment Construction

[0100] The present invention is mainly applied to 3G wireless video services such as videophone, and digital broadcasting and television services. The system mainly includes three parts: the sending end, the wireless channel and the receiving end.

[0101] The sending device needs to be equipped with a memory, a real-time processing chip, a camera and a wireless transmitting module. In practical applications, a typical device at the sending end may be a mobile terminal, and the video comes from a camera file of the mobile phone. The sending end needs to establish two independent channels. The main channel requires a wide bandwidth and a high rate for real-time transmission of video data streams; the secondary channel or auxiliary channel is mainly used to transmit the extracted video features. Parameters, these parameters require a small amount of data and are very representative, so the auxiliary channel has lower requirements on bandwidth and rate. These parameters mainly ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com