Unmanned aircraft landing navigation system based on vision

A technology for unmanned aircraft and autonomous landing. It is applied in navigation, surveying and navigation, navigation computing tools, etc. It can solve problems such as low practicality, too many landing environment assumptions, and unreasonable sensor combinations.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

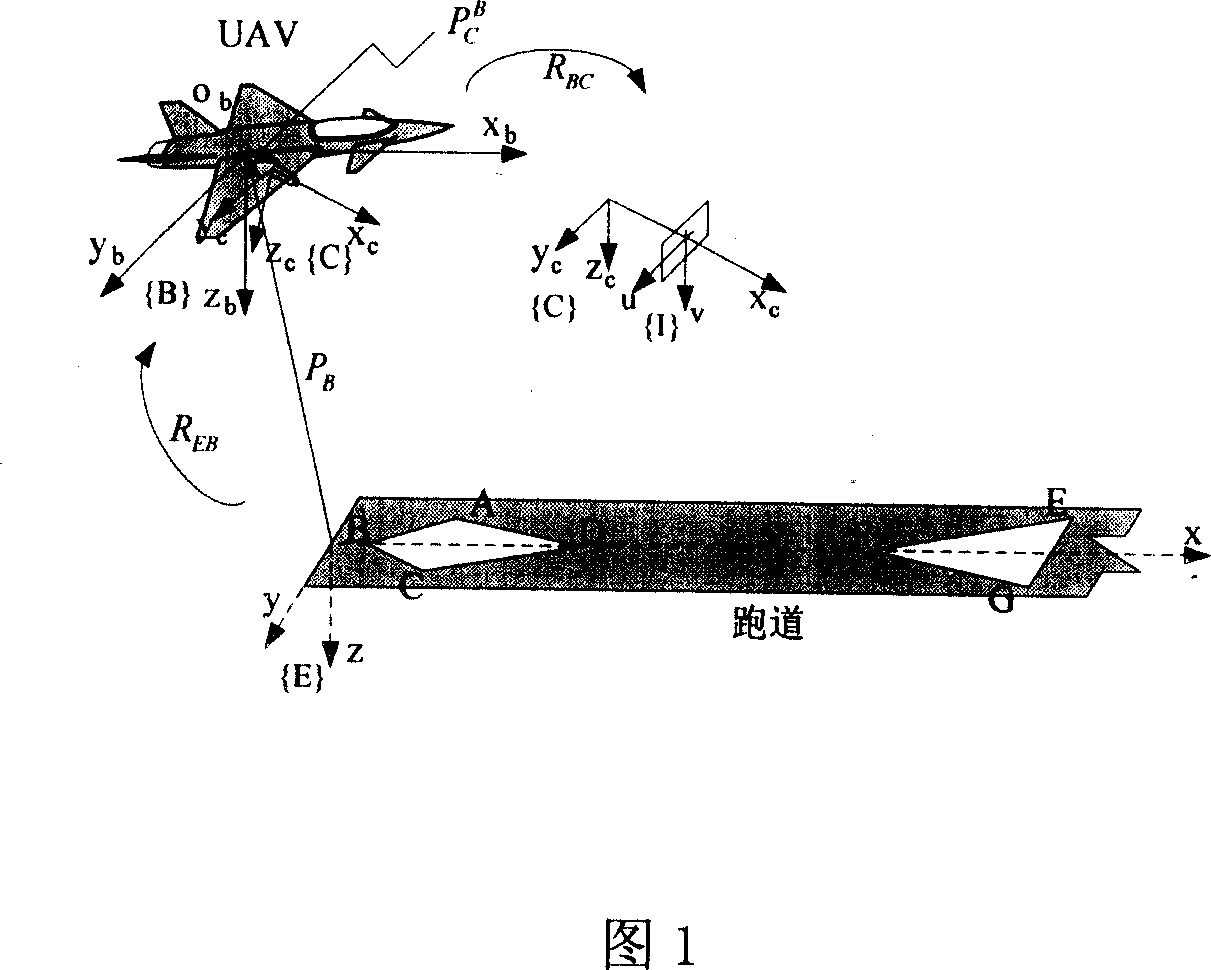

[0098] The present invention is a vision-based autonomous landing navigation system for unmanned aircraft, which consists of two parts: a software algorithm and a hardware device;

[0099] The software algorithm includes: computer vision algorithm, information fusion and state estimation algorithm;

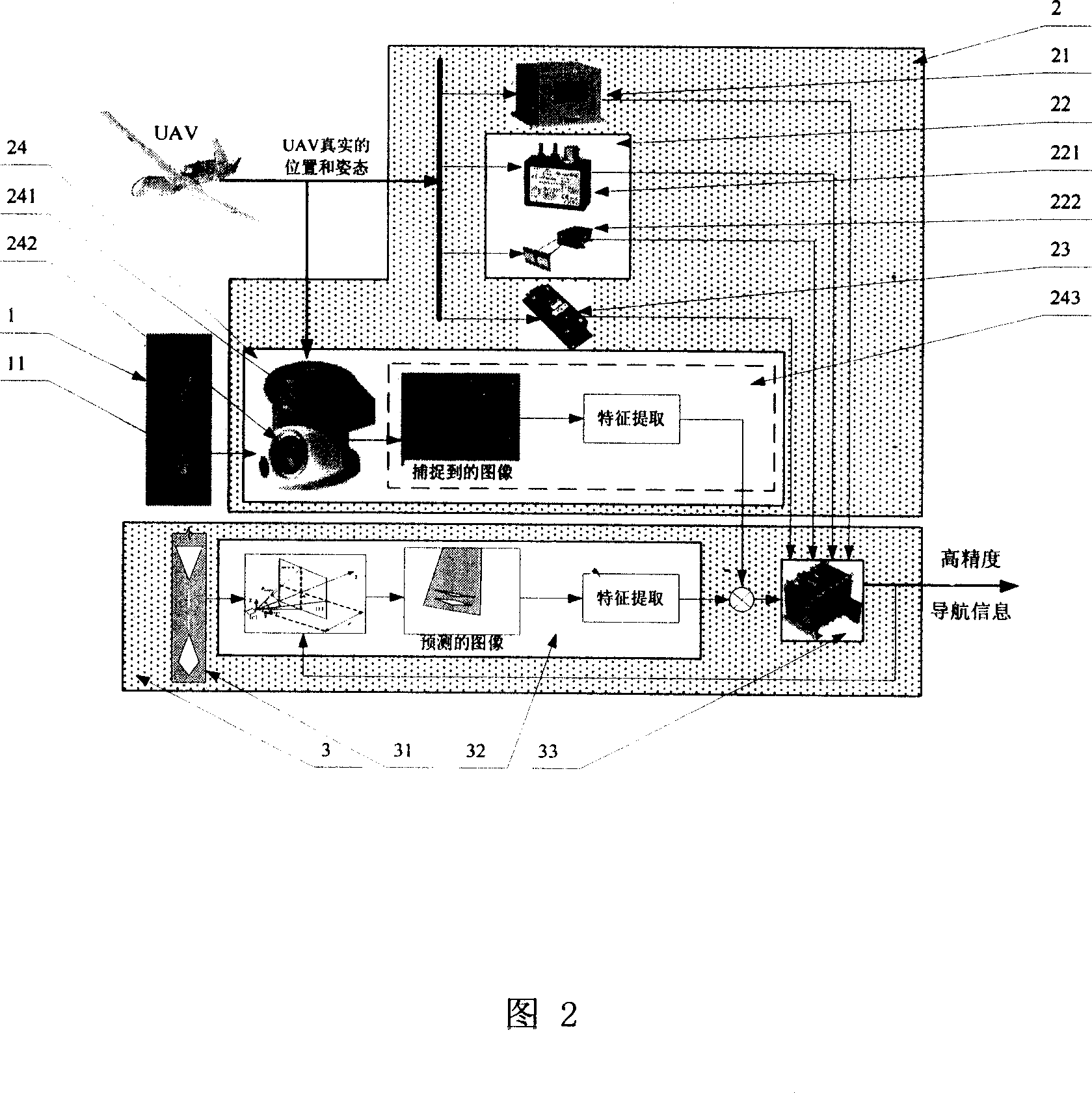

[0100] Please refer to Fig. 2, the hardware device includes: a runway feature 1 arranged on the runway plane, an airborne sensor subsystem 2 for measuring the state of the UAV, and an information fusion subsystem 3 for processing sensor measurement information;

[0101]The airborne sensor subsystem 2 includes an airborne camera system 24, an airborne inertial navigation system 21, an altimeter system 22, and a magnetic compass 23; Measure the real state of the UAV, track and analyze the runway feature 1 through the onboard camera system 24, obtain the measured value of the runway feature point 11, and transmit the measurement information to the information fusion subsystem 3;

[...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com